Intention

This website is designed to provide a step-by-step guide to how the ERA dataset can be analysed using inherent R functions that come as part of the ERAg package as well as advanced machine learning methods from the tidymodels meta-package framework. All analyses is demonstrated using packages within the freely available R statistical environment (Team R Development Core (2018)). The website is divided in three parts:

1) Introduction to a) Evidence for Resilient Agriculture, b) Machine Learning and c) the context of the research relevance for analysing agroforestry data from ERA,

2) An initial explorortive data analysis to familiarise ourself with the data.

3) A comprehensive step-by-step guide on how machine learning analysis using tidymodels can help to get better insights into how climate and soil factors influence the performance of agroforestry in Africa.

What is Evidence for Resilient Agriculture?

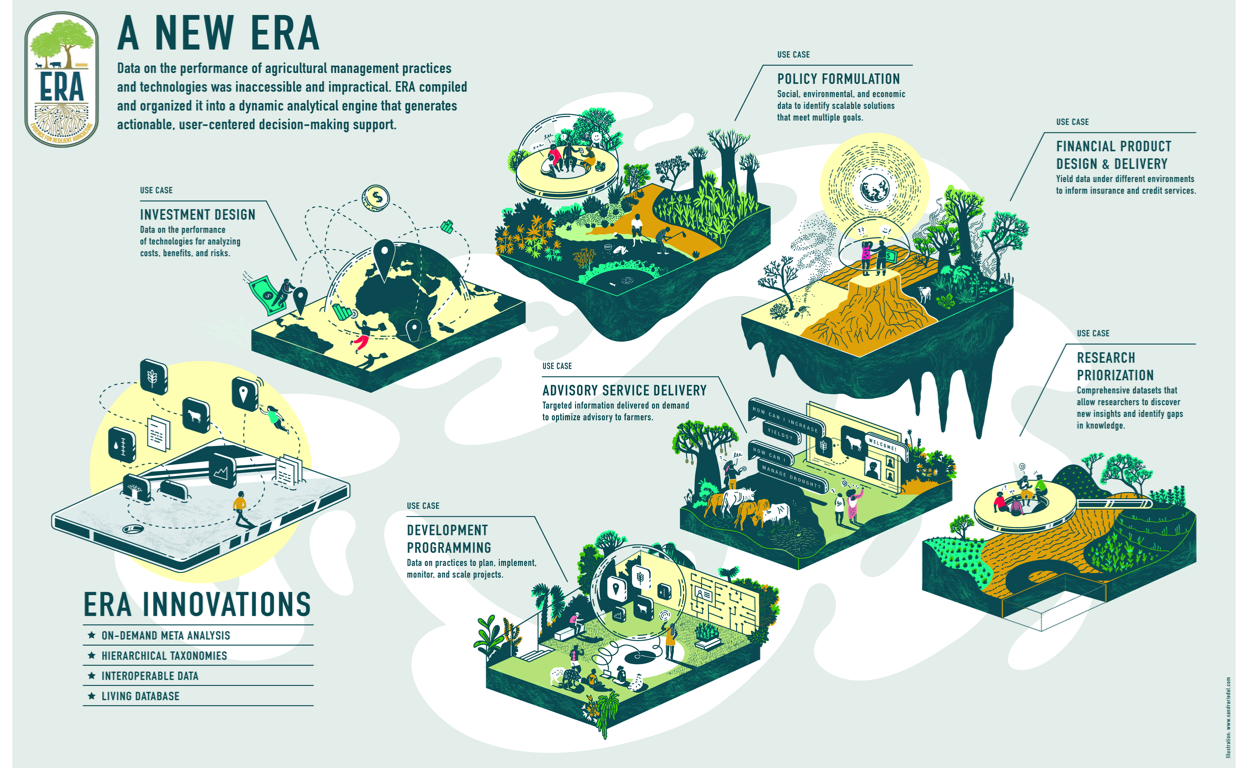

Evidence for Resilient Agriculture (ERA) is a meta-database designed to explore questions on the performance of agricultural practices, technologies and outcomes. ERA enables users to ask and answer questions about the effects of shifting from one practice or technology to another on indicators of productivity, resilience and climate change mitigation outcomes. It was specifically created to uncover what the data reveals regarding what works where and for whom. ERA makes it possible to perform dynamic and user-defined analysis based on cases such as: Policy Formulation, Investment Design, Financial Product Design & Delivery, Research Prioritisation, Advisory Service Delivery and Development Programming. Thereby making it possible to analyse a great variety of agricultural practices, outcomes and products from a user-centered approach, depending on the questions asked by either development organisations, academic researchers or policy -and decision-makers.

Show code

Figure 1: Evidence for Risilient Agriculture (ERA) is a dynamic analytical engine that generates actionable, user-centered decision-making support based on various ERA Use Cases. This allows users to fine-tune thier ERA-based analysis based on cases such as: Policy Formulation, Investment Design, Financial Product Design & Delivery, Research Prioritisation, Advisory Service Delivery and Development Programming.

The search - where does the data come from?

ERA was developed in general accordance with PRISMA guidelines.

See the THE SCIENTIFIC BASIS OF CLIMATE-SMART AGRICULTURE: A SYSTEMATIC REVIEW PROTOCOL, Climate-Smart Agriculture Compendium 2019 Reference Guide and the vignette “ERA-Search-Protocols-Draft” in the ERAg package for a detailed explanation on the search protocol.

To view the ERA Search Protocols run this line in R:

# Run this code and open the vignette "ERA-Search-Protocols-Draft".

browseVignettes("ERAg")

The ERA database

Currently ERA contains about 75,000 observations from about 1,400 agricultural studies that have taken place in Africa. Data have been compiled on more than 250 agricultural products. Management practices, outcomes and products are nested within respective hierarchies, allowing ERA to aggregate and disaggregate information. These data are linked to detailed covariates such as climate, soil and socio-economics based on geographical coordinates reported in the studies and seasons reported or inferred from remote sensing.

The analysis potential

ERA supports capacities to analyse the database in many ways. The fundamental analysis follows standard meta-analysis procedures. Meta-analysis

Response Ratios - the chosen effect size outcome in ERA

Specifically, ERA calculates response ratios (RR) and effect size statistics. The response ratio is calculated as the log ratio of the mean effect of the treatment (e.g. an improved practice) against the mean effect of the control practice. These response ratios can then be combined to generate an ‘effect size’ for the relationship, which provides an overall estimate of the magnitude and variability of the relationship. When calculating effect sizes, results from different studies are weighted to reduce bias from any given study (e.g., studies that has hundreds of observations are weighted higher than studies with very few observations). ERA weights the results of studies based on their level of precision. Because historical agricultural studies rarely report variance at the level necessary, ERA also weighs results positively based on the number of replications in a given study and negatively based on the number of observations.

Structure of ERA - higher level concepts and hierarchical mapping

Users inherently need information at different levels of aggregation. Policy-makers, for example, often talk about management practices and outcomes such as agroforestry and productivity, respectively. Extension agents, by contrast, refer to management practices in greater detail, describing, for example, the difference between intercropping

In addition to spatial and temporal co-variables, such as biophysical factors and geo-locations (longitude and latitude), there are three high level concepts that are the foundation of ERA’s experiment classification system. These are practices, outcomes and products also called experimental units (EUs). Practices here is shorthand for management practices and technologies which describe agronomic, agroforestry, and livestock interventions, for example, crop rotations, livestock dietary supplements or the like. Outcomes, as they sound, relate to the dependent variables in experiments (e.g., yield, benefit-cost ratios, soil carbon etc.). Products refers to the the species or commodity that the outcome is measured on, for example maize, milk, or meat.

Each is organized hierarchically, where concepts are nested below and above related concepts. This organization allows the user to aggregate or disaggregate data using these fields to explore different questions, from narrow (e.g., how does a Gliricidia-based alley cropping change maize crop yields?) to broad (e.g., considering all products which practices, on average, improve productivity, resilience and mitigation outcomes?). It also facilitates the user to deliver information at a level relevant for specific users. For example, policy-makers refer to agroforestry broadly while farmers are typically more interested in nuanced (disaggregated) results for species and practices. ERA’s practices, outcomes, and products hierarchies are unique but recently has been mapped to other ontologies including AGROVOC.

As explained ERA is structured based on hierarchy. Where practices, outcomes and products have various levels of aggregation. For example practices have Theme as the highest aggregated level, followed by Practice and then Sub-Practice. Outcomes are aggregated as Pillar, followed by Sub-Pillar, Indicator and finally Sub-Indicator. Products are aggregated by Type and Sub-Type.

Hierarchy and aggregation levels for ERA Observations

ERAg::ERAConcepts$Agg.Levels

Choice Choice.Code Agg Label

1: Observation O Index No. Locations

2: Study S Code No. Studies

3: Location L Site.Key No. ObservationsHierarchy and aggregation levels for ERA Practices

ERAg::ERAConcepts$Prac.Levels

Choice Choice.Code Prac Base

1: Subpractice S SubPrName SubPrName.Base

2: Practice P PrName PrName.BaseHierarchy and aggregation levels for ERA Outcomes

ERAg::ERAConcepts$Out.Levels

Choice Choice.Code Out

1: Subindicator SI Out.SubInd

2: Indicator I Out.Ind

3: Subpillar SP Out.SubPillar

4: Pillar P Out.PillarHierarchy and aggregation levels for ERA Products

ERAg::ERAConcepts$Prod.Levels

Choice Choice.Code Prod

1: Product P Product.Simple

2: Subtype S Product.Subtype

3: Type T Product.TypeHow did ERA evolve - looking back

ERA has a long history and started as the Climate-Smart Agriculture Compendium in 2012. Since then, the project has gone through many iterations and improvements to clarify the underlying practices, outcomes and product descriptions and hierarchies; built on external databases; prototype data products with users; and develop online capacity. The CSA Compendium (and subsequently ERA) has been principally funded by the CGIAR’s Research Program on Climate Change, Agriculture and Food Security (CCAFS) Flagship on Practices and Technologies. It has received supplementary funding from the European Union, International Fund for Agricultural Development (IFAD), Food and Agriculture Organization of the United Nations, United States Department of Agriculture–Foreign Agricultural Service (USDA–FAS), CCAFS Flagship on Low-emission Development and Center for Forestry Research’s (CIFOR) Evidence-Based Forestry. The Web portal was specifically funded by the EU–IFAD project: Building Livelihoods and Resilience to Climate Change in East and West Africa: Agricultural Research for Development (AR4D) for large-scale implementation of Climate-Smart Agriculture (#2000002575).

Why agroforestry?

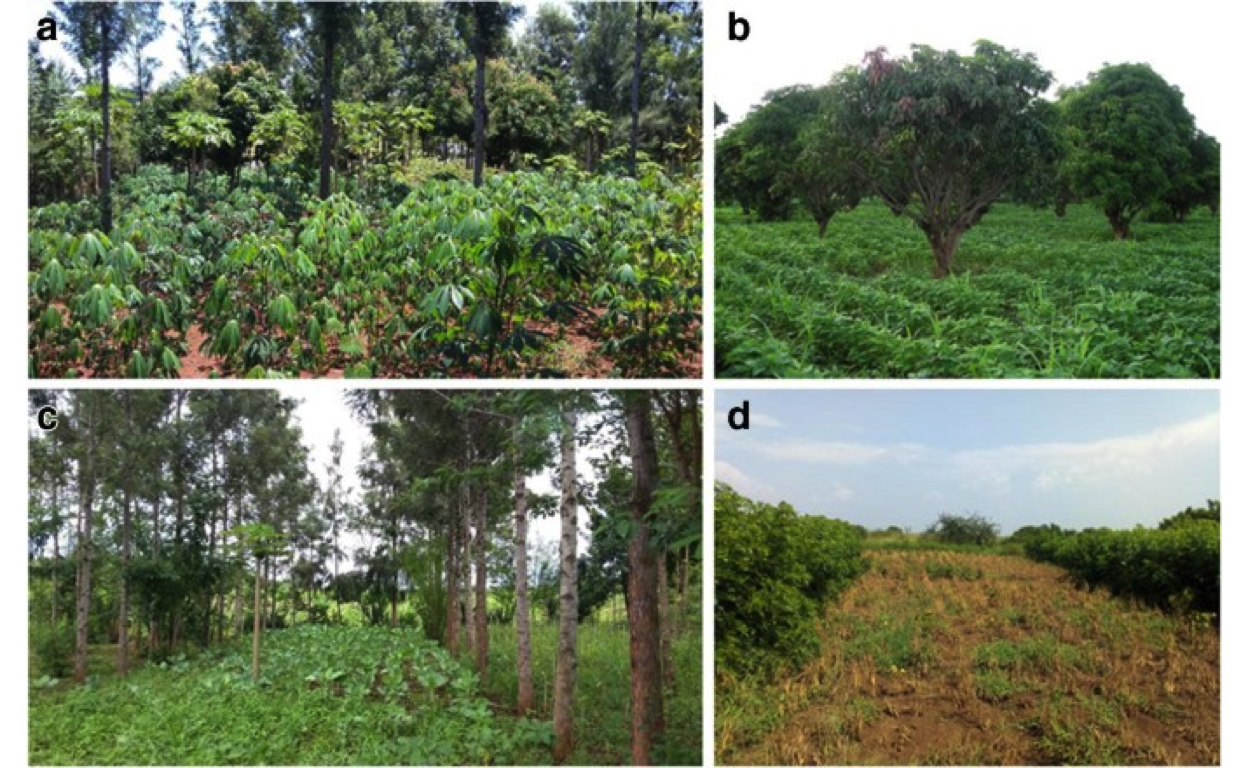

Agroforestry is considered as one of the most sustainable intensification practices, and now widely promoted in SSA as it provides low-input, resource-conserving farming approaches that are socially relevant and relate well to livelihoods and ecosystem functions. Agroforestry can help to maintain food supplies in many landscapes in SSA while at the same time increasing their climate resilience. The practice involves deliberate growing of woody perennials in association with food crops and pastures. Agroforestry is viewed as a sustainable alternative to monoculture systems because of its ability to provide multiple ecosystem services. In some areas, agroforestry is preferred over monoculture systems, because it can combine provisioning ecosystem services with environmental benefits. For example, agroforestry can raise carbon stocks in agricultural systems, maintain or improve soil fertility, regulate soil moisture content, control erosion, enhance pollination, and supply food (e.g., fruits and nuts), fuel wood, fodder, medicines, and other products Kuyah et al. (2019). Ecosystem services -and the productivity of agroforests are affected by tree-crop-environment interactions.

These interactions can occur aboveground, for example through interception of radiant energy and rainfall by foliage and moderation of temperatures by canopies or belowground, e.g., in resource use (nutrient, water, space) competition, or complementarity. Primarily, tree-crop-environment interactions influence biomass production, nutrient uptake and availability, storage and availability of water in the soil, water uptake by trees and crops, loss by evapotranspiration, and crop yields. Despite the great number of studies investigating the role of agroforestry practices in ecosystem service provision, evidence is still lacking concerning the overall effects of agroforestry and the influence of biophysical conditions (co-variables) affect crop yield (and soil fertility, erosion control, and water regulation etc.). This makes it difficult to assess the degree to which different biophysical conditions and agroforestry practices can be optimized to agroecological systems, and to anticipate their respective consequences on crop yield. Because agroforestry has the potential to contribute to both climate change mitigation and adaptation by sequestering carbon, reducing greenhouse emissions, enhancing resiliency, and reducing threats while facilitating biodiversity migration to more favourable conditions in the highly fragmented agricultural landscapes, it is a promissing practice to investigate.

Show code

ggdraw() +

draw_image(here::here("IMAGES", "Agroforestry_win_win.png"))

Figure 2: Figure from Kuyah et al. (2019) - Agroforestry practices common in Africa. a Homegarden (a mosaic landscape with cassava, pawpaw, Mangifera indica L. and Grevillea robusta A.Cunn. ex R.Br. in Uganda). b Dispersed intercropping (M. indica in maize-bean intercrop in Malawi). c Intercropping with annual crops between widely spaced rows of trees (collard intercropped with G. robusta). d Alley cropping (climbing beans planted between hedges of Gliricidia sepium (Jacq.) Kunth ex Walp. in Rwanda)

What is machine learning - and why is it needed to reveal patterns in ERA?

What is machine learning

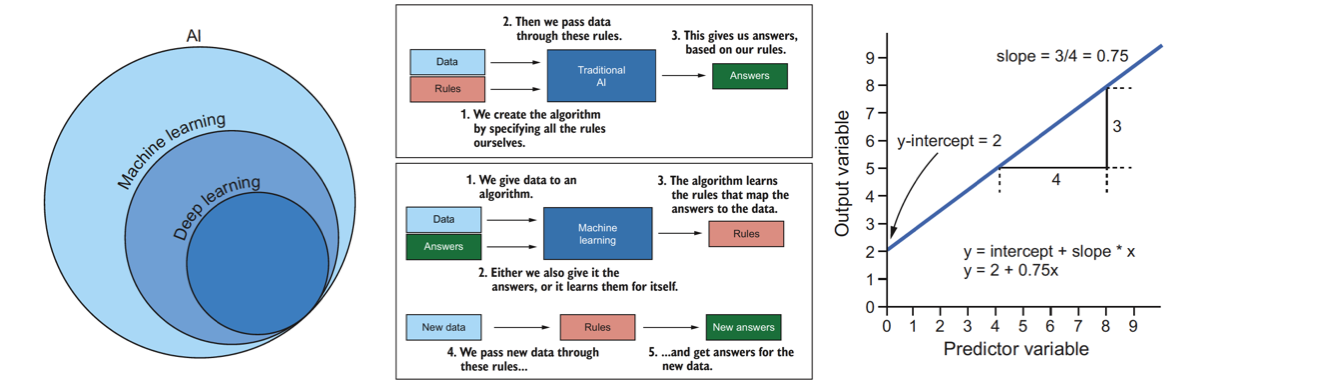

Machine learning (ML) is a powerful tool in the arsenal of modelling, especially when we are dealing with large amounts of data with complex relationships and interdependencies. Simply put, machine learning allows the us to feed the potentially immense amounts of ERA data to a computer algorithm and have the algorithm analyse and reveal patterns in our data in an interpretable way based on only the input data. On top of that we can use the algorithms, whether regression or classification, to predict on future scenarios and input data combinations to answer complex questions, such as: How does a certain biophysical co-variable (temperature or precipitation) affect agroforestry outcomes?

Machine learning isn’t solely the domain of large tech companies or computer scientists. Anyone with basic programming skills can implement machine learning in their work. If you’re a scientist, machine learning can give you extraordinary insights into the phenomena you’re studying. In this guide it is not expected that you are great at math. Although the techniques you’re about to learn are based in math, I’m a firm believer that there are no hard concepts in machine learning. All of the processes we’ll explore together will be explained graphically and intuitively. Machine learning, sometimes referred to as statistical learning, is a sub-field of artificial intelligence (AI) whereby algorithms “learn” patterns in data to perform specific tasks. Although algorithms may sound complicated, they aren’t. In fact, the idea behind an algorithm is not complicated at all. An algorithm is simply a step-by-step process that we use to achieve something that has a beginning and an end. Chefs have a different word for algorithms—they call them “recipes.” At each stage in a recipe, you perform some kind of process, like beating an egg, and then you follow the next instruction in the recipe, such as mixing the ingredients.

Arthur Samuel, a scientist at IBM, first used the term machine learning in 1959. He used it to describe a form of AI that involved training an algorithm to learn to play the game of checkers. The word learning is what’s important here, as this is what distinguishes machine learning approaches from traditional AI. Traditional AI is programmatic. In other words, you give the computer a set of rules so that when it encounters new data, it knows precisely which output to give. In contrast, in the machine learning approaches we are feeding the computer the data and not the rules, and we allow the computer it self to learn the rules. The advantage of this approach is that the machine can “learn” patterns we didn’t even know existed in the data —and the more data we provide, the better it gets at learning those patterns.

Show code

ggdraw() +

draw_image(here::here("IMAGES", "AI_ML_DeepLearning.png"), width = 0.3) +

draw_image(here::here("IMAGES", "What_is_ML.png"), width = 0.5, x = 0.2) +

draw_image(here::here("IMAGES", "Fitting_a_line_intro_to_ML.png"), width = 1, x = 0.25)

Figure 3: Introduction to Machine Learning

The difference between a model and an algorithm

In practice, we call a set of rules that a machine learning algorithm learns a model. Once the model has been learned, we can give it new observations, and it will output its predictions for the new data. We refer to these as models because they represent real-world phenomena in a simplistic enough way that we and the computer can interpret and understand it. Just as a model of the Eiffel Tower may be a good representation of the real thing but isn’t exactly the same, so statistical models are attempted representations of real-world phenomena but won’t match them perfectly.

The process by which the model is learned is referred to as the algorithm. As we discovered earlier, an algorithm is just a sequence of operations that work together to solve a problem. So how does this work in practice? Let’s take a simple example. Say we have two continuous variables, and we would like to train an algorithm that can predict one (the outcome or dependent variable) given the other (the predictor or independent variable). The relationship between these variables can be described by a straight line that can be defined using only two parameters: its slope and where it crosses the y-axis (the y-intercept). An algorithm to learn this relationship could look something like the example in figure 1.4. We start by fitting a line with no slope through the mean of all the data. We calculate the distance each data point is from the line, square it, and sum these squared values. This sum of squares is a measure of how closely the line fits the data. Next, we rotate the line a little in a clockwise direction and measure the sum of squares for this line. If the sum of squares is bigger than it was before, we’ve made the fit worse, so we rotate the slope in the other direction and try again. If the sum of squares gets smaller, then we’ve made the fit better. We continue with this process, rotating the slope a little less each time we get closer, until the improvement on our previous iteration is smaller than some presset value we’ve chosen. The algorithm has iteratively learned the model (the slope and y-intercept) needed to predict future values of the output variable, given only the predictor variable. This example is slightly crude but hopefully illustrates how such an algorithm could work.

While certain algorithms tend to perform better than others with certain types of data, no single algorithm will always outperform all others on all problems. This concept is called the no free lunch theorem. In other words, you don’t get something for nothing; you need to put some effort into working out the best algorithm for your particular problem. Data scientists typically choose a few algorithms they know tend to work well for the type of data and problem they are working on, and see which algorithm generates the best-performing model. You’ll see how we do this later in the book. We can, however, narrow down our initial choice by dividing machine learning algorithms into categories, based on the function they perform and how they perform it.

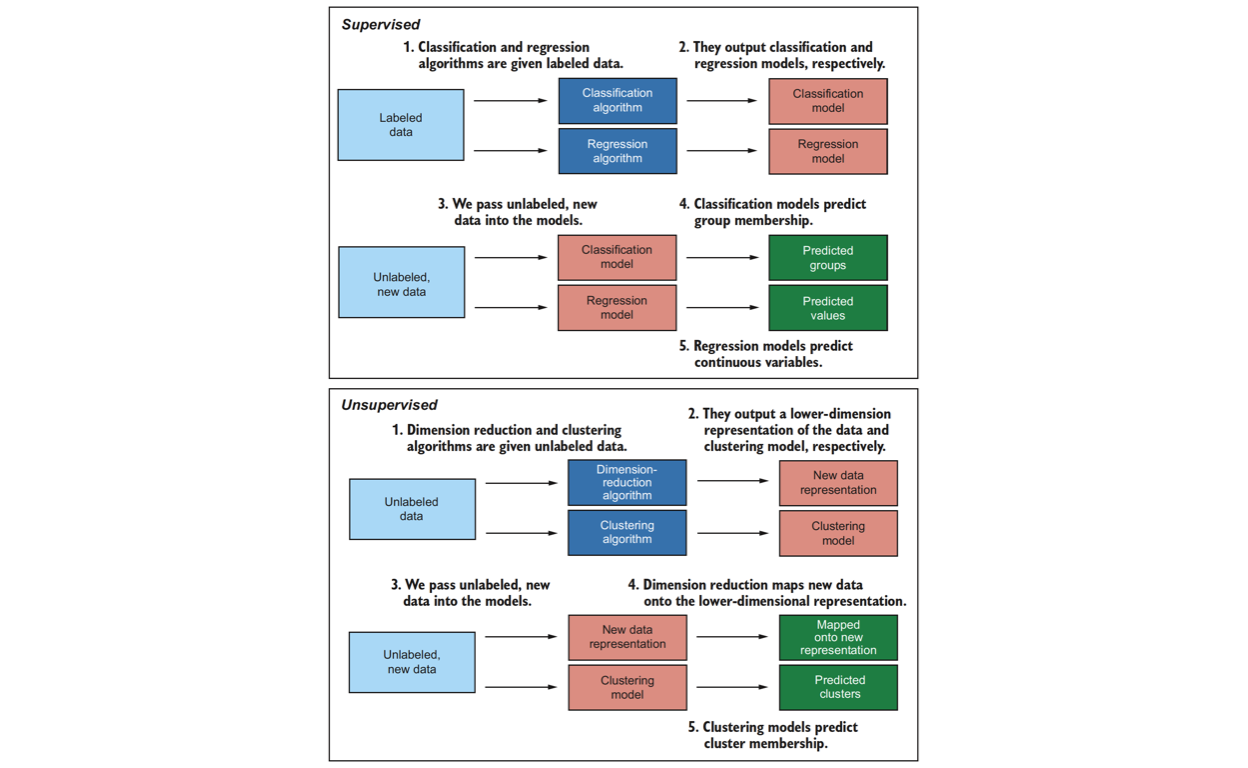

Classes of machine learning algorithms

All machine learning algorithms can be categorized by their learning type and the task they perform. There are three learning types:

- Supervised

- Unsupervised

- Semi-supervised

The type depends on how the algorithms learn. Do they require us to hold their hand through the learning process? Or do they learn the answers for themselves? Supervised and unsupervised algorithms can be further split into two classes each:

- Supervised

- Classification

- Regression

- Unsupervised

- Dimension reduction

- Clustering

The class depends on what the algorithms learn to do. So we categorize algorithms by how they learn and what they learn to do. But why do we care about this? Well, there are a lot of machine learning algorithms available to us. How do we know which one to pick? What kind of data do they require to function properly? Knowing which categories different algorithms belong to makes our job of selecting the most appropriate ones much simpler.

Show code

ggdraw() +

draw_image(here::here("IMAGES", "Supervised_and_unsupervised_ML.png"))

Figure 4: Supervised vs unsupervised Machine Learning

Why machine learning is needed for analysing ERA data

Evidence for Resilient Agriculture (ERA) is the most comprehensive (meta)-database of agro-environmental data in Africa to date. Its unique aspect of generalisability through effect sizes and geo-referenced data observations opens the doors for state-of-the-art analysis across spatio-temporal scales. This makes ERA particularly suitable for pinpointing what agricultural technologies work well where and for understanding how certain agricultural practices, outcomes and products relate to one another. In addition, the latest version of ERA include detailed spatially explicit information on a large number of biophysical factors (e.g. soil and climate), which allows research into for example not only where a certain practice performs well but also potentially why. But inorder to make the required analysis to answer these questions we need powerful algorithms capable of analysing complex patterns across and between dozens of features (also called variables). This is where machine learning comes in. It has enormous potential to do so.

What is tidymodels?

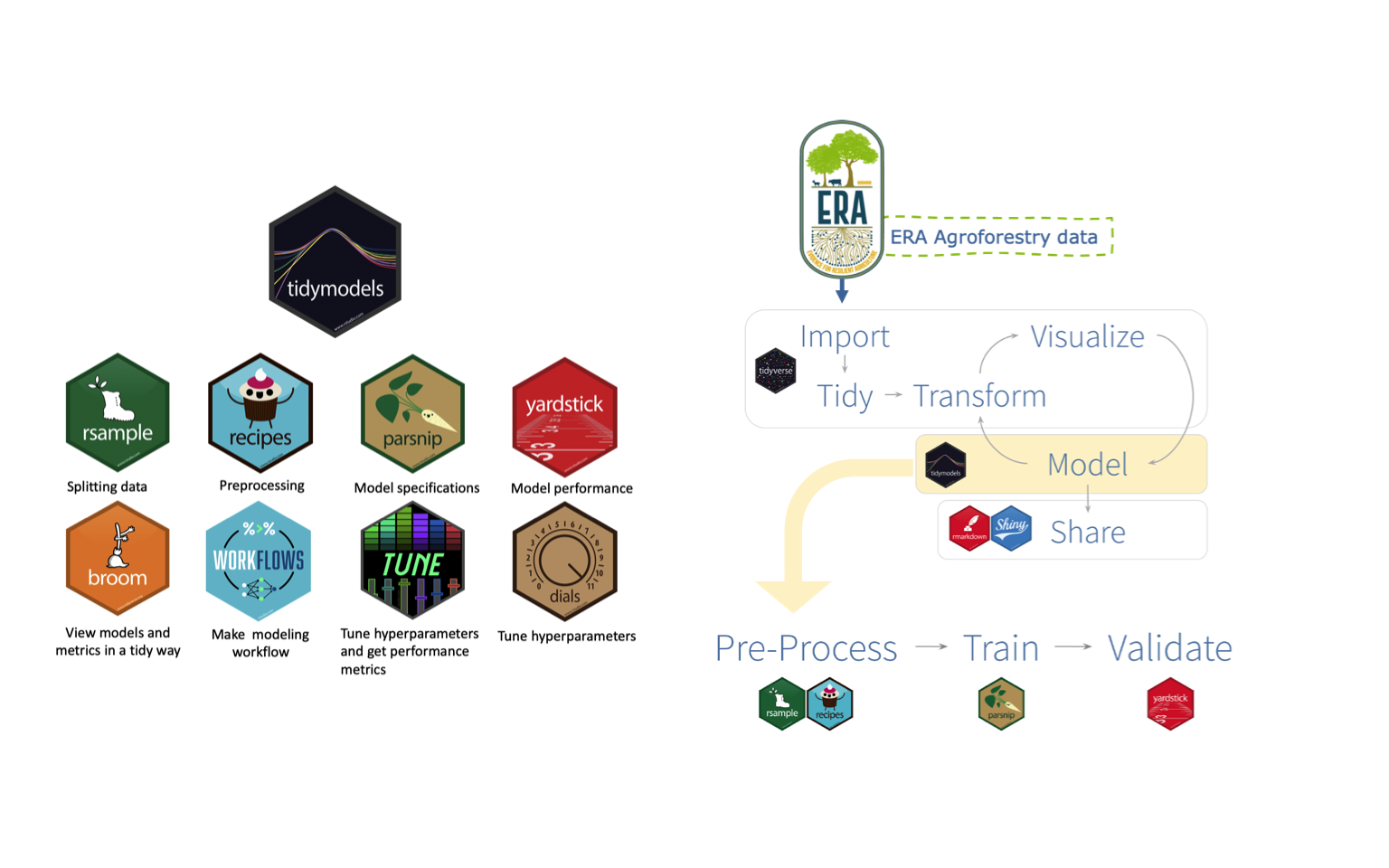

Many machine learning R packages are well known, with some of the most common being mlr (now mlr3) and caret. See these excellent blogs (mlr3 vs. caret, tidymodels vs. mlr3 and caret vs. tidymodels) for more information and general comparisons between mlr3, caret and tidymodels. Additionally, what scikit-learn is for Python, tidymodels could be for R. The tidymodels framework is a collection of packages for modelling and machine learning using tidyverse

Show code

ggdraw() +

draw_image(here::here("IMAGES", "tidymodels_packages_description.png"), width = 0.5) +

draw_image(here::here("IMAGES", "tidymodels_for_ERA.png"), width = 0.4, x = 0.5)

Figure 5: Tidymodels meta-package framework

Intention of this document

The intention of this report is to provide users and readers with an example-based step-by-step guide on how ERA data potentially can be analysed using machine learning approaches. The idea is that anyone with basic familiarity with R and ERA should be able to perform similar analysis steps on demand using this document as a rigid, guideline to perform any ERA related analysis of there own.

Aim, objective and research questions

Aim

The aim of this document is to provide a step-by-step guide and vignette-like

Objectives

We are going to achieve this ambitious goal by following though a number of objectives. First we are going to perform a comprehensive explorortive data analysis (EDA)

Research Question(s)

What biophysical factors influence the performances of agroforestry systems and can these factors be used to spatially map suitability of these systems? Where does different agroforestry systems perform better and where do they perform worse?

Sub-question 1: How does biophysical factors relate to agroforestry systems performances?

Sub-question 2: How can biophysical factors help to understand agroforestry suitability in the face of climate change and resilience?

Sub-question 3: What trade-offs and synergies exist between biophysical factors, management and socio-economic factors, when it comes to agroforestry systems suitability?

Hypotheses

- Like any agroecosystem

- Agroforestry systems are complex and diverse but can be classified into broad distinct classes as done in the ERA database based on agroforestry practices and sub-practices.

Methodology

All analysis, machine learning, documentation and text editing is made using packages within the freely available R statistical environment Team R Development Core (2018) with R version 4.1.1 GUI 1.77 High Sierra through RStudio version 1.4.1717. An extensive effort has been made to make all functions and (meta)-data manipulation and analysis outcomes accessible and interpretable to the users/readers of this document. The methods and steps taken in this document are roughly devided in five areas.

a preliminary Systematic Mapping

A subset of ERA agroforestry data will be made so we only work with agroforestry data that have outcome of crop yield and/or biomass yield. We are using this data to calculate effect sizes on each individual observation of the agroforestry data using the MeanC and MeanT columns in the ERA data. With these a response ratio (RR)

an exploratory data analysis (EDA) designed to get familiar with the data and identify and deal with abnormalities, relationship patterns in the data and other important aspects of the outcome feature (RR) and the biophysical co-variables (predictor features). In EDA we are going to make use of several packages R created specifically for initial data visualisation, explorortive data analysis and the important aspect of data cleaning and dealing with outliers. Outliers will be excluded from the analysis based on the “extreme outlier removal method,” where response ratios above or below 3 ∗ IQR (interquartile range

The tidymodels

- Model recipies - defining key pre-processing steps on data

- Setting model specifications

- Defining model workflows

- Model (hyper)-parameter tuning

- Selecting best performing models - best (hyper)-parameters

- Finalising model workflow with best performing model (hyper)-parameter settings

- Perform last model fitting using all data (training + testing)

- Model validation/evaluation and performance, e.g. variable importance

- Creating final Ensemble Stacked Model - with a selecction of different high-performing models

- Lastly we are going to briefly explore the potentials of using SDM to spatially project where on the African continent agroforestry practices perform rellatively better compared to alternative agricultural practices. To do this a set of environmental parameters will be used as the biophysical predictors. Here we are going to use the R packages: sdm and BiodiversityR.