Here we are going to explore Machine Learning with Tidymodels on the ERA agroforestry data

Loading necessary R packages and ERA data

Loading general R packages

This part of the document is where we actually get to the nitty-gritty of the ERA agroforestry data and therefore it requires us to load a number of R packages for both general Explorortive Data Analysis and Machine Learning.

Show code

# Using the pacman functions to load required packages

if(!require("pacman", character.only = TRUE)){

install.packages("pacman",dependencies = T)

}

# ------------------------------------------------------------------------------------------

# General packages

# ------------------------------------------------------------------------------------------

required.packages <- c("tidyverse", "here", "see", "ggplot2", "hablar", "kableExtra", "RColorBrewer",

"cowplot", "ggpubr", "ggbeeswarm", "viridis", "GGally", "DataExplorer", "corrr",

"ggridges", "Boruta",

# ------------------------------------------------------------------------------------------

# Parallel computing packages

# ------------------------------------------------------------------------------------------

"parallelMap", "parallelly", "parallel",

# ------------------------------------------------------------------------------------------

# Packages specifically for tidymodels machine learning

# ------------------------------------------------------------------------------------------

"tidymodels", "treesnip","stacks", "workflowsets",

"finetune", "vip", "modeldata", "finetune",

"visdat", "spatialsample", "kknn",

"kernlab", "ranger", "xgboost", "tidyposterior"

)

p_load(char=required.packages, install = T,character.only = T)

Loading the ERAg package

The ERAg package includes all the ERA data we need for our ED steps.

Show code

# devtools::install_github("peetmate/ERAg",

# auth_token = "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",

# build_vignettes = T)

library(ERAg)

Preliminary data cleaning and data wrangling

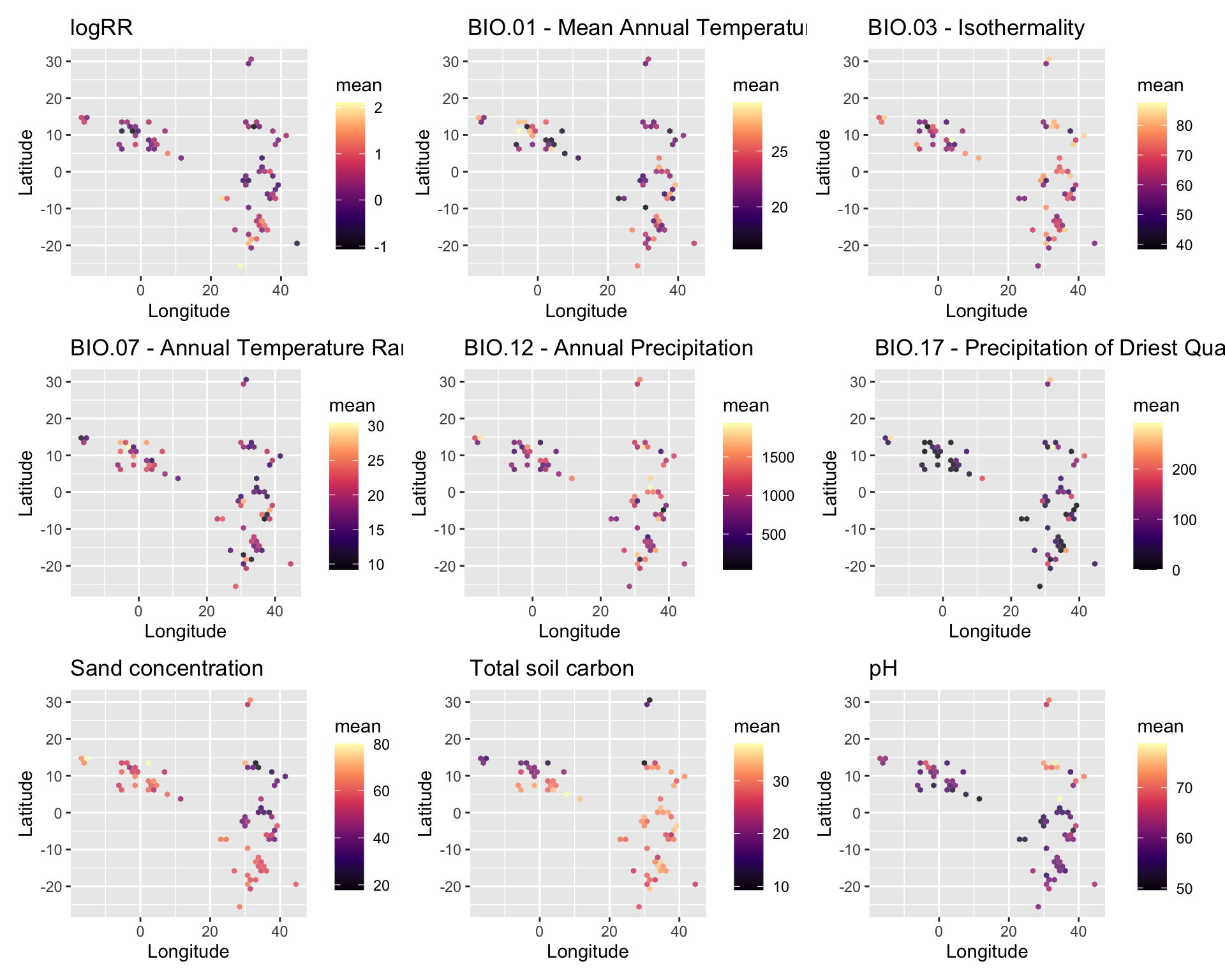

The real power of ERA is that it is geo-referenced by latitude and longitude of where the agricultural research/study was performed. This make it exceptionally relevant for getting insights into useful relationships between any spatially explicit data and ERA practices, outcomes and (crop) products. Any data that can be spatially referenced such as weather, soil, marked access etc. can be investigated in relation to ERA data. In our case we are going to make use of two datasets, a digital soil for the continent of Africa called iSDA and a climate dataset, called BioClim adapted from WorldClim.org to have more biologically meaningful variables. The digital soil map on 30 m resolution is from the Innovative Solutions for Decision Agriculture (iSDA) data from Hengl, T., Miller, M.A.E., Križan, J. et al. (2021). Please see the websites isda-africa.com, worldagroforestry.org/isda and zenodo.org/isdasoil for more information and direct data access to this soil data. Please see the paper of Noce, S., Caporaso, L. & Santini, M. (2020) for more information on WorldClim and BioClim variables.

Merging ERA and biophysical pretictor features

Now that we are familiar with the biophysical co-variables, we are going to merging our ERA agroforestry data with the iSDA and the BioClim data. We can use the coordinate information we have from our latitude and longitude columns in our ERA agroforestry data to merge by.

Note: here that we are proceeding with the agroforestry dataset called “ERA.AF.PR.TRESH” that we created in the previous section - because this dataset is the subset of ERA.Compiled, in which we have only agroforestry data, we have filtered out all non-annual crops and included a thresholds with a minimum of 25 observations per agroforestry practice. The ERA.AF.PR.TRESH data is not the output of the ERAg::PrepareERA function, thus we have not removed data with negative outcomes, that would potentially cause issues in the machine learning analysis.

# Defining new variable of agroforestry data

# To avoid common problems of data tables we are going to use

# the data.table::copy() function to add our ERA.AF.PR.TRESH data and define a new variable

era.agrofor <- data.table::copy(ERA.AF.PR.TRESH)

dim(era.agrofor)

#[1] 6361 217

BioClim climate co-variables

Show code

# Getting the BioClim co-variables from the ERAg package and converting the dataset to a dataframe

ERA.BioClim <- as.data.table(ERAg::ERA_BioClim)

# Changing the name of the column that contains the coordinates from "V1" to "Site.Key", so that we can use it to merge later

names(ERA.BioClim)[names(ERA.BioClim) == "V1"] <- "Site.Key"

# ------------------------------------------------------------------------------------------------------------------------

# merging with the agroforestry.bioclim data using the common "Site.Key" coordinates and creating a new dataset

era.agrofor.bioclim <- cbind(era.agrofor, ERA.BioClim[match(era.agrofor[, Site.Key], ERA.BioClim[, Site.Key])])

dim(era.agrofor.bioclim)

# [1] 6361 332

# rmarkdown::paged_table(era.agrofor.bioclim)

ERA Physical co-variables

Show code

# Getting some of the older the physical (ERA_Physical) co-variables from the ERAg package.

# Note that we load in this ERA data because some of this information is not found in the iSDA data (e.g. altitude and slope)

#converting the dataset to a dataframe

ERA.Phys <- as.data.table(ERAg::ERA_Physical)

# selecting features

ERA.Phys <- ERA.Phys %>%

dplyr::select(Site.Key, Altitude.mean, Altitude.sd, Altitude.max, Altitude.min, Slope.med, Slope.mean, Slope.sd, Slope.max,

Slope.min, Aspect.med, Aspect.mean, Aspect.sd)

# ------------------------------------------------------------------------------------------------------------------------

# merging with the era.agrofor.bioclim data using the common "Site.Key" coordinates and creating a new dataset

era.agrofor.bioclim.phys <- cbind(era.agrofor.bioclim, ERA.Phys[match(era.agrofor.bioclim[, Site.Key], ERA.Phys[, Site.Key])])

dim(era.agrofor.bioclim.phys)

# [1] 6361 345

iSDA soil co-variables In a similar fasion we can load the iSDA soil co-variables from the ERAg package.

Show code

# iSDA

iSDAsoils <- ERAg::ERA_ISDA

# We want to retain the soil depth information from iSDA, so we create fields for upper and lower measurement depths.

iSDAsoils[grepl("0..200cm",Variable),Depth.Upper:=0

][grepl("0..200cm",Variable),Depth.Lower:=200

][,Variable:=gsub("_m_30m_0..200cm","",Variable)]

iSDAsoils[grepl("0..20cm",Variable),Depth.Upper:=0

][grepl("0..20cm",Variable),Depth.Lower:=20

][,Variable:=gsub("_m_30m_0..20cm","",Variable)]

iSDAsoils[grepl("20..50cm",Variable),Depth.Upper:=20

][grepl("20..50cm",Variable),Depth.Lower:=50

][,Variable:=gsub("_m_30m_20..50cm","",Variable)]

iSDAsoils[,Weight:=Depth.Lower-Depth.Upper]

iSDAsoils <- unique(iSDAsoils[,list(Median=weighted.mean(Median,Weight),Mean=weighted.mean(Mean,Weight),SD=weighted.mean(SD,Weight),Mode=Mode[Weight==max(Weight)]),by=list(Variable,Site.Key)])

iSDAsoils <- dcast(iSDAsoils,Site.Key~Variable,value.var = c("Median","Mean","SD","Mode"))

ERA.iSDA <- as.data.table(iSDAsoils)

# ------------------------------------------------------------------------------------------------------------------------

# merging with the era.agrofor.bioclim.phys data using the common "Site.Key" coordinates and creating a new dataset

era.agrofor.bioclim.phys.isda <- cbind(era.agrofor.bioclim.phys, ERA.iSDA[match(era.agrofor.bioclim.phys[, Site.Key], ERA.iSDA[, Site.Key])])

dim(era.agrofor.bioclim.phys.isda)

# [1] 6361 425

# ([1] 6361 418)

We see that the number of features have increased dramatically. We now have 418 co-variables, also called independent variables in modelling, and features in typical machine learning language. From now on the term “feature” will be used to describe the columns including the co-variables.

Show code

Removing duplicated columns

Show code

era.agrofor.bioclim.phys.isda.no.dubl <- era.agrofor.bioclim.phys.isda %>%

subset(., select = which(!duplicated(names(.))))

Data Cleaning

Deriving value from any machine learning-based approach crucially depends on the quality of the underlying data. There is a lot of data wrangling and pre-processing to do before this messy data is useful and ready for machine learning analysis. We are first going to perform some preliminary data cleaning as our first pre-processing step. Even though many of the important data cleaning steps are integrated into the tidymodels framework when we are going to construct our machine learning model workflows it is still important to perform the crucial data cleaning step manually prior to advancing into the more complex machine learning in order to get a better understanding of the nature of the data.

We will iterate between the data cleaning and the exploitative data analysis of the agroforestry data.

Renaming features from BioClim and iSDA Note that all variables are mean values. Keep individual units in mind!

# New ERA dataframe with all biophysical co-variables

agrofor.biophys <- data.table::copy(era.agrofor.bioclim.phys.isda.no.dubl)

dim(era.agrofor.bioclim.phys.isda.no.dubl)

# [1] 6361 415

class(agrofor.biophys)

# [1] "data.table" "data.frame"

dim(agrofor.biophys)

# [1] 6361 415

agrofor.biophys.rename <- data.table::copy(agrofor.biophys) %>%

# Renaming BioClim features

rename(Bio01_MT_Annu = wc2.1_30s_bio_1.Mean) %>%

rename(Bio02_MDR = wc2.1_30s_bio_2.Mean) %>%

rename(Bio03_Iso = wc2.1_30s_bio_3.Mean) %>%

rename(Bio04_TS = wc2.1_30s_bio_4.Mean) %>%

rename(Bio05_TWM = wc2.1_30s_bio_5.Mean) %>%

rename(Bio06_MinTCM = wc2.1_30s_bio_6.Mean) %>%

rename(Bio07_TAR = wc2.1_30s_bio_7.Mean) %>%

rename(Bio08_MT_WetQ = wc2.1_30s_bio_8.Mean) %>%

rename(Bio09_MT_DryQ = wc2.1_30s_bio_9.Mean) %>%

rename(Bio10_MT_WarQ = wc2.1_30s_bio_10.Mean) %>%

rename(Bio11_MT_ColQ = wc2.1_30s_bio_11.Mean) %>%

rename(Bio12_Pecip_Annu = wc2.1_30s_bio_12.Mean) %>%

rename(Bio13_Precip_WetM = wc2.1_30s_bio_13.Mean) %>%

rename(Bio14_Precip_DryM = wc2.1_30s_bio_14.Mean) %>%

rename(Bio15_Precip_S = wc2.1_30s_bio_15.Mean) %>%

rename(Bio16_Precip_WetQ = wc2.1_30s_bio_16.Mean) %>%

rename(Bio17_Precip_DryQ = wc2.1_30s_bio_17.Mean) %>%

rename(Bio18_Precip_WarQ = wc2.1_30s_bio_18.Mean) %>%

rename(Bio19_Precip_ColQ = wc2.1_30s_bio_19.Mean) %>%

# Renaming iSDA soil variables

rename(iSDA_Depth_to_bedrock = Mean_bdr) %>%

rename(iSDA_SAND_conc = Mean_sand_tot_psa) %>%

rename(iSDA_SILT_conc = Mean_silt_tot_psa) %>%

rename(iSDA_CLAY_conc = Mean_clay_tot_psa) %>%

rename(iSDA_FE_Bulk_dens = Mean_db_od) %>%

rename(iSDA_log_C_tot = Mean_log.c_tot) %>%

rename(iSDA_log_Ca = Mean_log.ca_mehlich3) %>%

rename(iSDA_log_eCEC = Mean_log.ecec.f) %>%

rename(iSDA_log_Fe = Mean_log.fe_mehlich3) %>%

rename(iSDA_log_K = Mean_log.k_mehlich3) %>%

rename(iSDA_log_Mg = Mean_log.mg_mehlich3) %>%

rename(iSDA_log_N = Mean_log.n_tot_ncs) %>%

rename(iSDA_log_SOC = Mean_log.oc) %>%

rename(iSDA_log_P = Mean_log.p_mehlich3) %>%

rename(iSDA_log_S = Mean_log.s_mehlich3) %>%

rename(iSDA_pH = Mean_ph_h2o) %>%

# Renaming iSDA soil texture values based on the guide according to iSDA

rowwise() %>%

mutate(Texture_class_20cm_descrip =

case_when(

as.numeric(Mode_texture.class) == 1 ~ "Clay",

as.numeric(Mode_texture.class) == 2 ~ "Silty_clay",

as.numeric(Mode_texture.class) == 3 ~ "Sandy_clay",

as.numeric(Mode_texture.class) == 4 ~ "Loam",

as.numeric(Mode_texture.class) == 5 ~ "Silty_clay_loam",

as.numeric(Mode_texture.class) == 6 ~ "Sandy_clay_loam",

as.numeric(Mode_texture.class) == 7 ~ "Loam",

as.numeric(Mode_texture.class) == 8 ~ "Silt_loam",

as.numeric(Mode_texture.class) == 9 ~ "Sandy_loam",

as.numeric(Mode_texture.class) == 10 ~ "Silt",

as.numeric(Mode_texture.class) == 11 ~ "Loam_sand",

as.numeric(Mode_texture.class) == 12 ~ "Sand",

as.numeric(Mode_texture.class) == 255 ~ "NODATA")) %>%

# Renaming the ASTER variables, slope and elevation

rename(ASTER_Slope = Slope.mean) %>%

rename(ASTER_Altitude = Altitude.mean) %>%

# Renaming agroecological zone 16 simple

mutate(AEZ16s = AEZ16simple)

# Error: C stack usage 7969312 is too close to the limit

Fixing empty cells in the Tree column

Show code

agrofor.biophys.rename <- agrofor.biophys.rename %>%

#dplyr::filter_all(all_vars(!is.infinite(.))) %>%

dplyr::mutate(across(Tree, ~ ifelse(. == "", NA, as.character(.)))) %>%

mutate_if(is.character, factor) %>%

dplyr::mutate(across(Tree, ~ ifelse(. == "NA", "Unknown_tree", as.character(.)))) %>%

dplyr::mutate(across(AEZ16s, ~ ifelse(. == "NA", "Unknown_AEZ16", as.character(.)))) %>%

replace_na(list(Tree = "Unknown_Tree", AEZ16s = "Unknown_AEZ16s"))

# Error: C stack usage 7969312 is too close to the limit

There are currently several site types we will change this so we only have three site types. A Site.Type called “Farm,” another called “Station” and a third one called “Other,” that will collect site types that are neither Farm nor Station. We will also remove the site type “Greenhouse,” as it does not make sense in an agroforestry setting. It remains unknown where the ERA agroforestry observations with “Greenhouse” as site type come from..

Renaming the column Site.Types

Show code

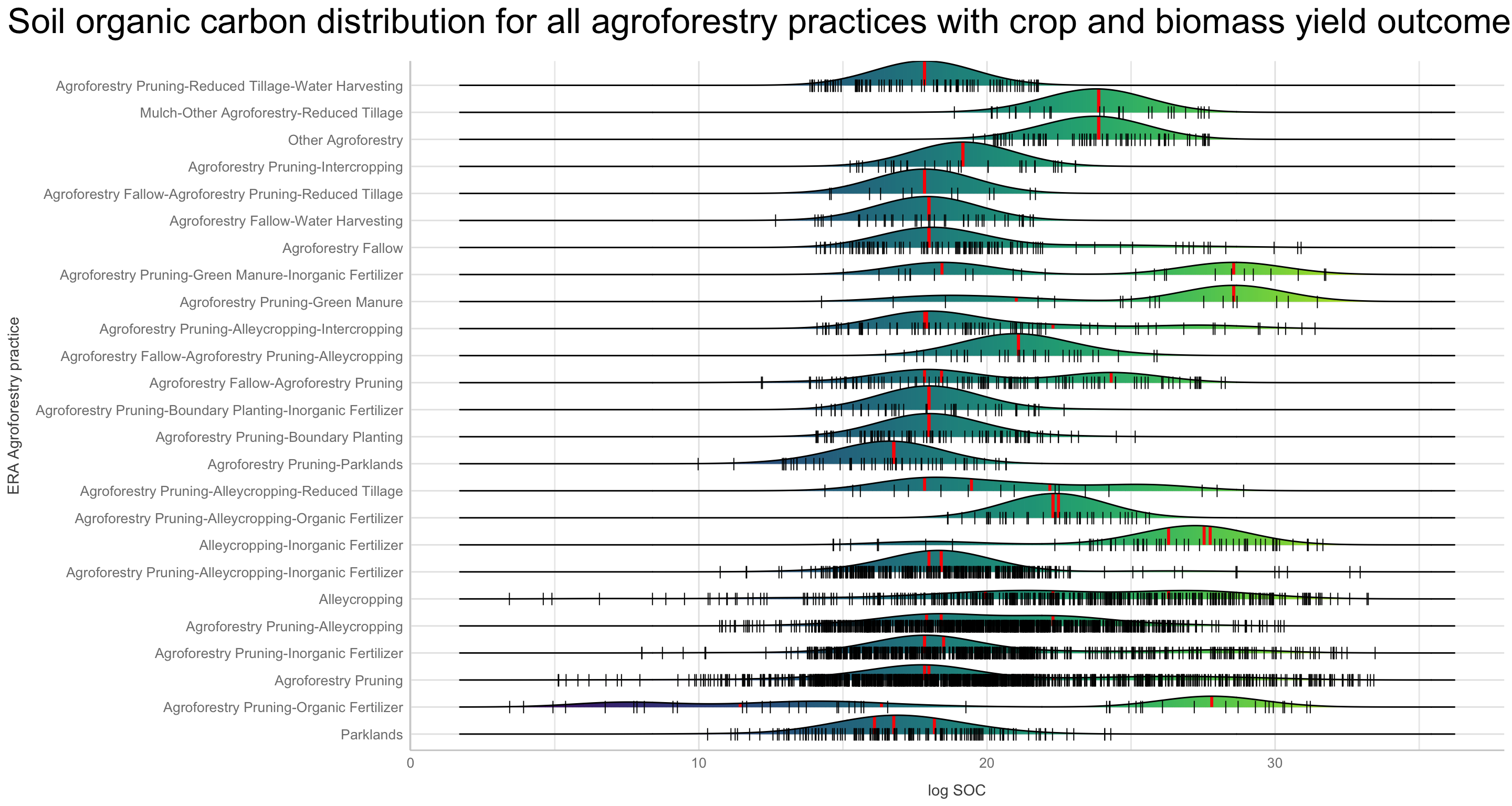

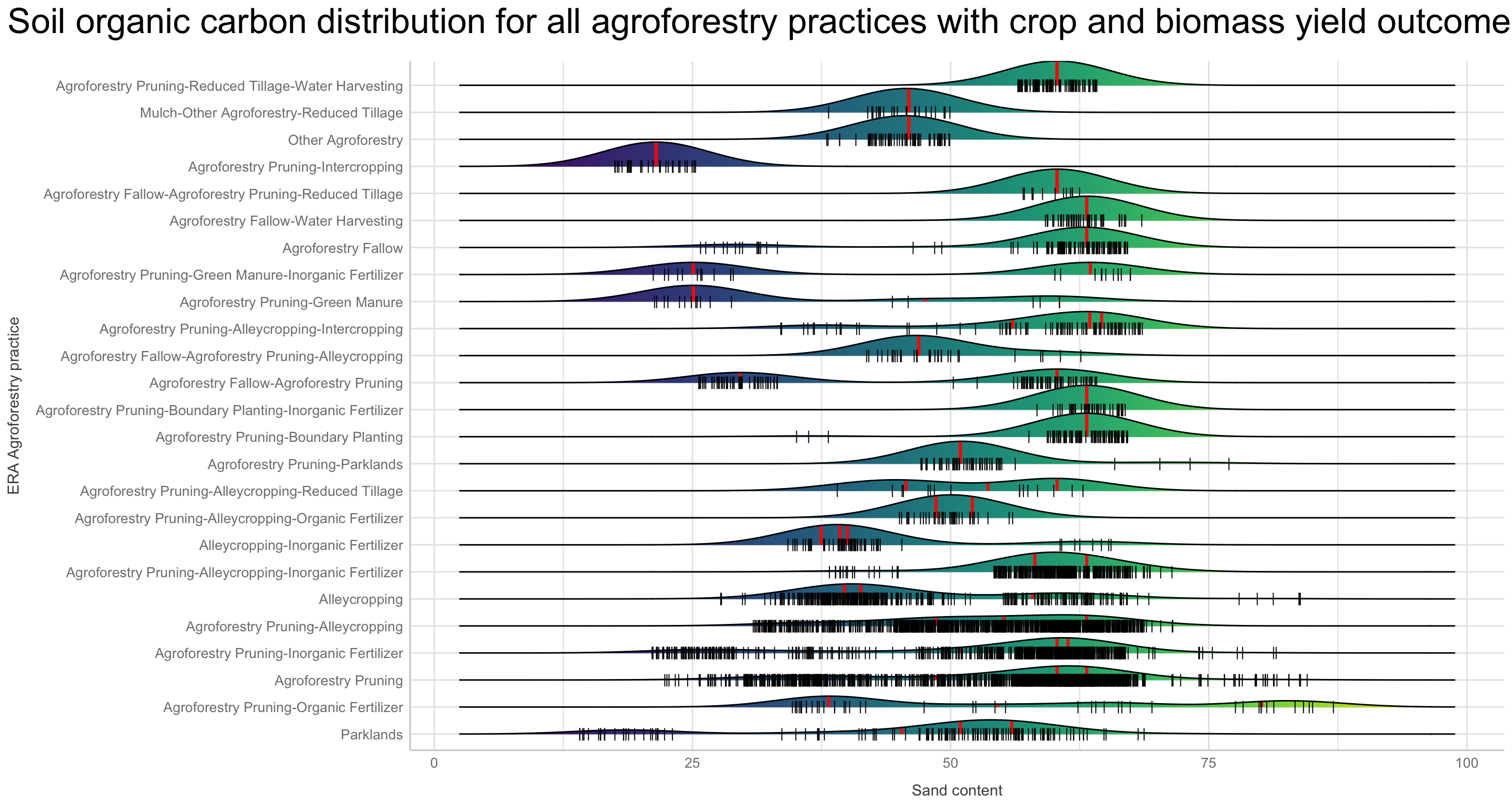

Selecting ERA Agroforestry outcomes; Crop and Biomass Yield

For this machine learning analysis we will only focus on agroforestry data that have crop and biomass yield as outcomes. This is because it is not meaningful to compare response ratios across the diverse set of ERA outcomes as the basis for calculating MeanC and MeanT might vary tremendously. Hence due to that fact we are subsetting on Out.SubInd for crop and biomass yield.

agrofor.biophys.outcome.yield <- agrofor.biophys.rename %>%

dplyr::filter(Out.SubInd == "Crop Yield" | Out.SubInd == "Biomass Yield")

Selecting features for modelling and calculating outcome variable, response ratios

we are going to select the features for our modelling and machine learning exercise. We are selecting MeanC and MeanT as we will convert these to one outcome feature.

Selecting feature columns for modelling

agrofor.biophys.selected.features <- data.table::copy(agrofor.biophys.outcome.yield) %>%

dplyr::select(MeanC, # Outcome variable(s)

MeanT,

ID, # ID variable (will not be included as predictor)

AEZ16s, # ID variable (will not be included as predictor)

PrName, # ID variable (will not be included as predictor)

PrName.Code, # ID variable (will not be included as predictor)

SubPrName, # ID variable (will not be included as predictor)

Product, # ID variable (will not be included as predictor)

Out.SubInd, # ID variable (will not be included as predictor)

Out.SubInd.Code, # ID variable (will not be included as predictor)

Country, # ID variable (will not be included as predictor)

Latitude, # ID variable (will not be included as predictor, but as a basis for the spatial/cluster cross-validation)

Longitude, # ID variable (will not be included as predictor, but as a basis for the spatial/cluster cross-validation)

Site.Type, # Site_Type will be included as predictor, to see how much it explains the outcome

Tree, # Tree will be included as predictor, to see how much it explains the outcome

Bio01_MT_Annu,

Bio02_MDR,

Bio03_Iso,

Bio04_TS,

Bio05_TWM,

Bio06_MinTCM,

Bio07_TAR,

Bio08_MT_WetQ,

Bio09_MT_DryQ,

Bio10_MT_WarQ,

Bio11_MT_ColQ,

Bio12_Pecip_Annu,

Bio13_Precip_WetM,

Bio14_Precip_DryM,

Bio15_Precip_S,

Bio16_Precip_WetQ,

Bio17_Precip_DryQ,

iSDA_Depth_to_bedrock,

iSDA_SAND_conc,

iSDA_CLAY_conc,

iSDA_SILT_conc,

iSDA_FE_Bulk_dens,

iSDA_log_C_tot,

iSDA_log_Ca,

iSDA_log_eCEC,

iSDA_log_Fe,

iSDA_log_K,

iSDA_log_Mg,

iSDA_log_N,

iSDA_log_SOC,

iSDA_log_P,

iSDA_log_S,

iSDA_pH,

ASTER_Altitude,

ASTER_Slope

) %>%

relocate(ID, PrName, Out.SubInd, Product, AEZ16s, Country, Site.Type, MeanC, MeanT, Tree)

dim(agrofor.biophys.selected.features)

# [1] 4578 48

class(agrofor.biophys.selected.features)

# [1] "rowwise_df" "tbl_df" "tbl" "data.frame"

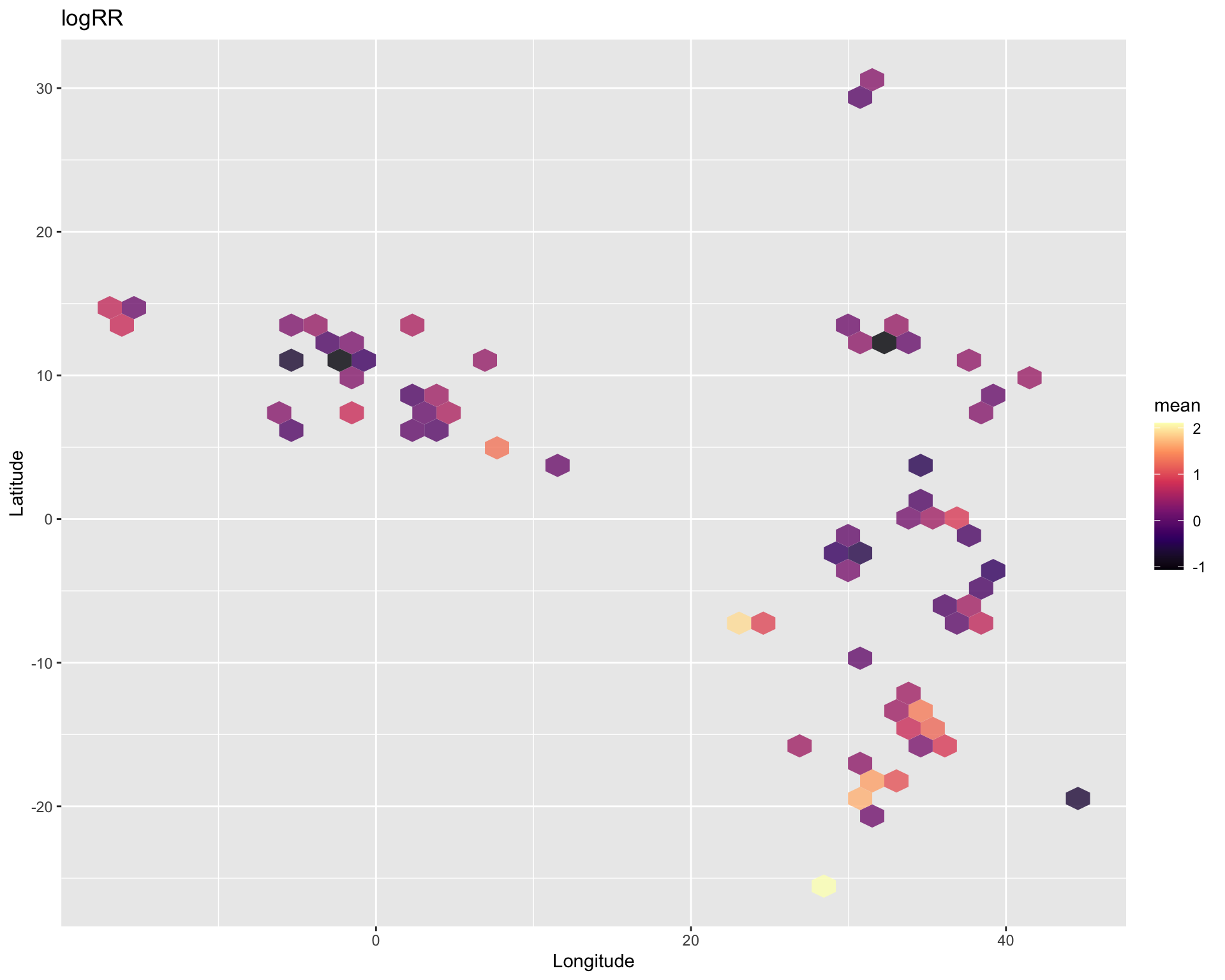

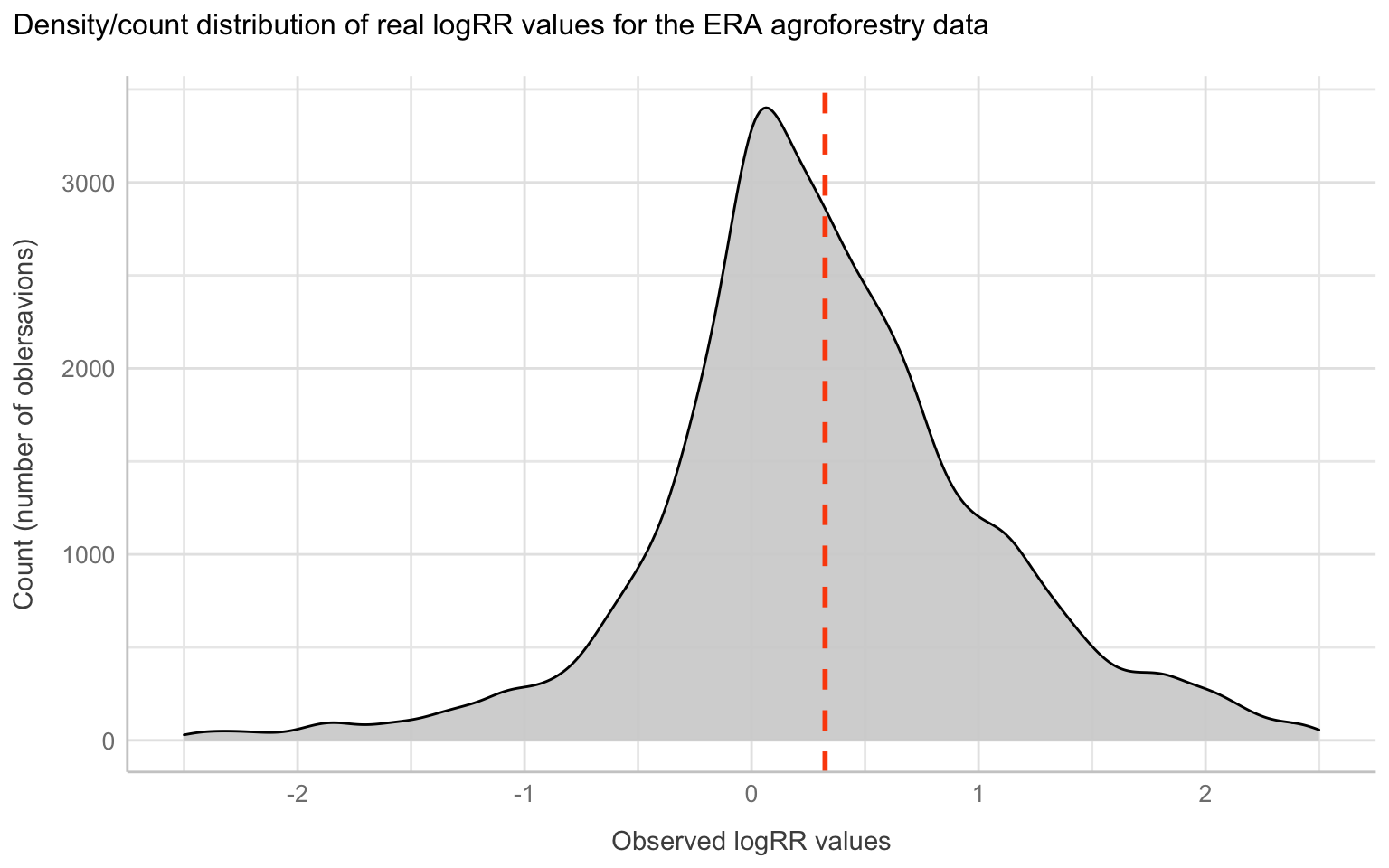

Calculating response ratios for each observation

We are going to compute a simple response ratio as RR = (MeanT/MeanC) and a log-transformed response ratio as logRR = (log(MeanT/MeanC)). The later will be used as our outcome variable.

agrofor.biophys.modelling.data <- data.table::copy(agrofor.biophys.selected.features) %>%

#subset(., select = which(!duplicated(names(.)))) %>% # First, removing duplicated columns

dplyr::mutate(RR = (MeanT/MeanC)) %>%

dplyr::mutate(logRR = (log(MeanT/MeanC)))

dim(agrofor.biophys.modelling.data)

# [1] 4578 52

Lets have a look at our modelling data with selected features and our outcome, calculated response ratios

Show code

agrofor.biophys.modelling.data <- readRDS(file = here::here("TidyMod_OUTPUT","agrofor.biophys.modelling.data.RDS"))

rmarkdown::paged_table(agrofor.biophys.modelling.data)

ID <dbl> | PrName <fct> | Out.SubInd <fct> | Product <fct> | AEZ16s <fct> | Country <fct> | Site.Type <fct> | MeanC <dbl> | |

|---|---|---|---|---|---|---|---|---|

| 1660 | Parklands | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 3.450000e+00 | |

| 1660 | Parklands | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 4.550000e+00 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 2.204370e+00 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 2.204370e+00 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 2.204370e+00 | |

| 1675 | Agroforestry Pruning | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 4.140900e+00 | |

| 1675 | Agroforestry Pruning | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 5.458400e+00 | |

| 1675 | Agroforestry Pruning | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 5.458400e+00 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Crop Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 5.770000e-01 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Crop Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 5.770000e-01 |

Show code

agrofor.biophys.modelling.data %>% group_by()

# A tibble: 4,578 × 52

ID PrName Out.SubInd Product AEZ16s Country Site.Type MeanC

<dbl> <fct> <fct> <fct> <fct> <fct> <fct> <dbl>

1 1660 Parklands Biomass Yi… Pearl … Warm.… Niger Farm 3.45

2 1660 Parklands Biomass Yi… Pearl … Warm.… Niger Farm 4.55

3 1675 Agrofores… Biomass Yi… Pearl … Warm.… Niger Farm 2.20

4 1675 Agrofores… Biomass Yi… Pearl … Warm.… Niger Farm 2.20

5 1675 Agrofores… Biomass Yi… Pearl … Warm.… Niger Farm 2.20

6 1675 Agrofores… Biomass Yi… Pearl … Warm.… Niger Farm 4.14

7 1675 Agrofores… Biomass Yi… Pearl … Warm.… Niger Farm 5.46

8 1675 Agrofores… Biomass Yi… Pearl … Warm.… Niger Farm 5.46

9 1675 Agrofores… Crop Yield Pearl … Warm.… Niger Farm 0.577

10 1675 Agrofores… Crop Yield Pearl … Warm.… Niger Farm 0.577

# … with 4,568 more rows, and 44 more variables: MeanT <dbl>,

# Tree <fct>, PrName.Code <fct>, SubPrName <fct>,

# Out.SubInd.Code <fct>, Latitude <dbl>, Longitude <dbl>,

# Bio01_MT_Annu <dbl>, Bio02_MDR <dbl>, Bio03_Iso <dbl>,

# Bio04_TS <dbl>, Bio05_TWM <dbl>, Bio06_MinTCM <dbl>,

# Bio07_TAR <dbl>, Bio08_MT_WetQ <dbl>, Bio09_MT_DryQ <dbl>,

# Bio10_MT_WarQ <dbl>, Bio11_MT_ColQ <dbl>, …Looks pretty good, however we have many empty cells for the Tree column that needs to be fixed. We have a data.frame (rowwise_df, tbl_df, tbl, data.frame) with 4578 observations and 52 features. Four of these features are our MeanC and MeanT that we will use to calculate the response ratio (RR and logRR). The RR and/or logRR will be our outcome feature, also called response variable. Hence, we have 52 - (4 + 11) = 37 predictors of which 35 are numeric and two (Tree and Site.Type are categorical/factors).

Creating data with only numeric predictors

A separate dataset with only numeric predictors and our outcome (RR and logRR) can be helpful when performing some important exploitative data analysis.

agrofor.biophys.modelling.data.numeric <- data.table::copy(agrofor.biophys.modelling.data) %>%

dplyr::select(RR,

logRR,

Bio01_MT_Annu,

Bio02_MDR,

Bio03_Iso,

Bio04_TS,

Bio05_TWM,

Bio06_MinTCM,

Bio07_TAR,

Bio08_MT_WetQ,

Bio09_MT_DryQ,

Bio10_MT_WarQ,

Bio11_MT_ColQ,

Bio12_Pecip_Annu,

Bio13_Precip_WetM,

Bio14_Precip_DryM,

Bio15_Precip_S,

Bio16_Precip_WetQ,

Bio17_Precip_DryQ,

iSDA_Depth_to_bedrock,

iSDA_SAND_conc,

iSDA_CLAY_conc,

iSDA_SILT_conc,

iSDA_FE_Bulk_dens,

iSDA_log_C_tot,

iSDA_log_Ca,

iSDA_log_eCEC,

iSDA_log_Fe,

iSDA_log_K,

iSDA_log_Mg,

iSDA_log_N,

iSDA_log_SOC,

iSDA_log_P,

iSDA_log_S,

iSDA_pH,

ASTER_Altitude,

ASTER_Slope

)

Explorotive Data Analysis

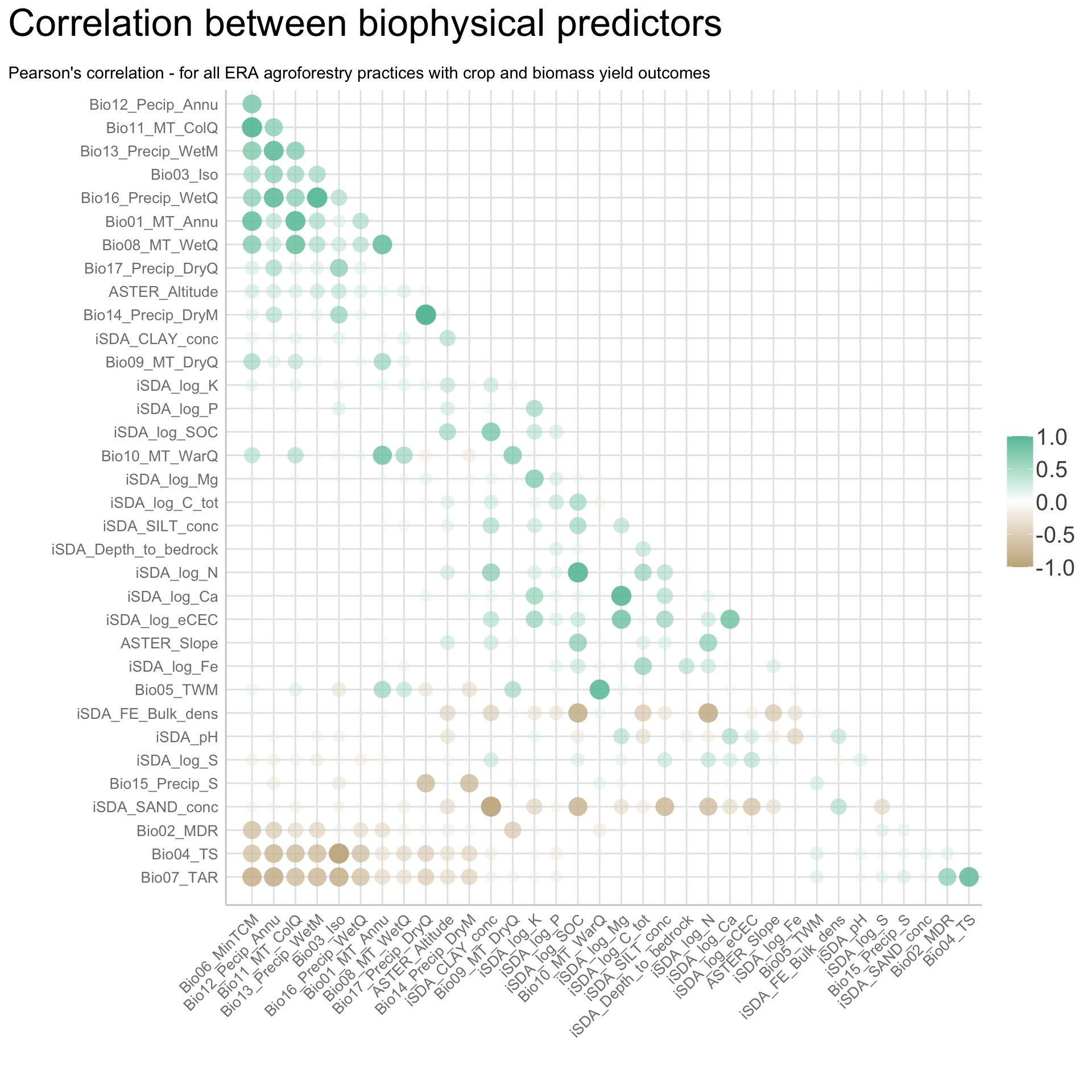

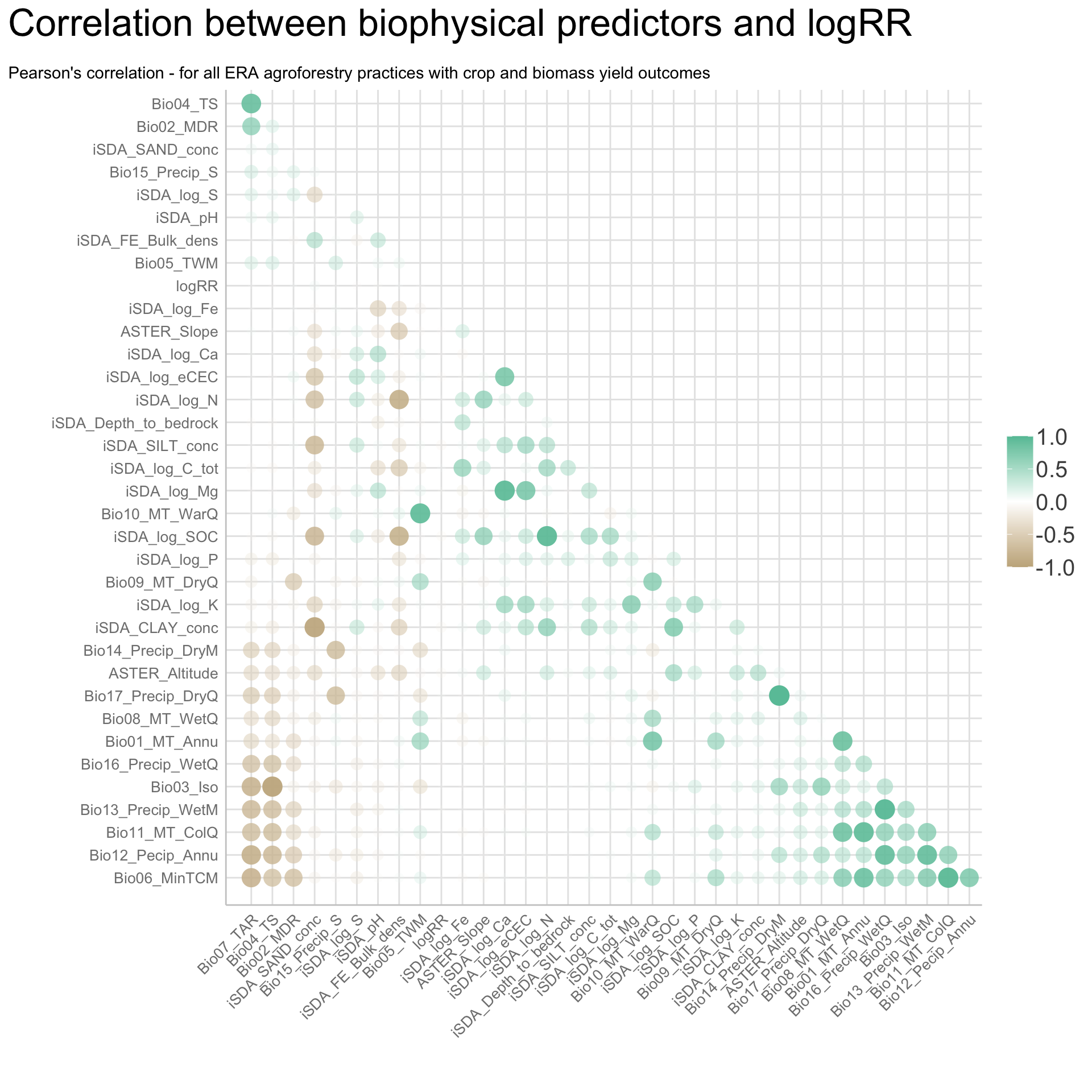

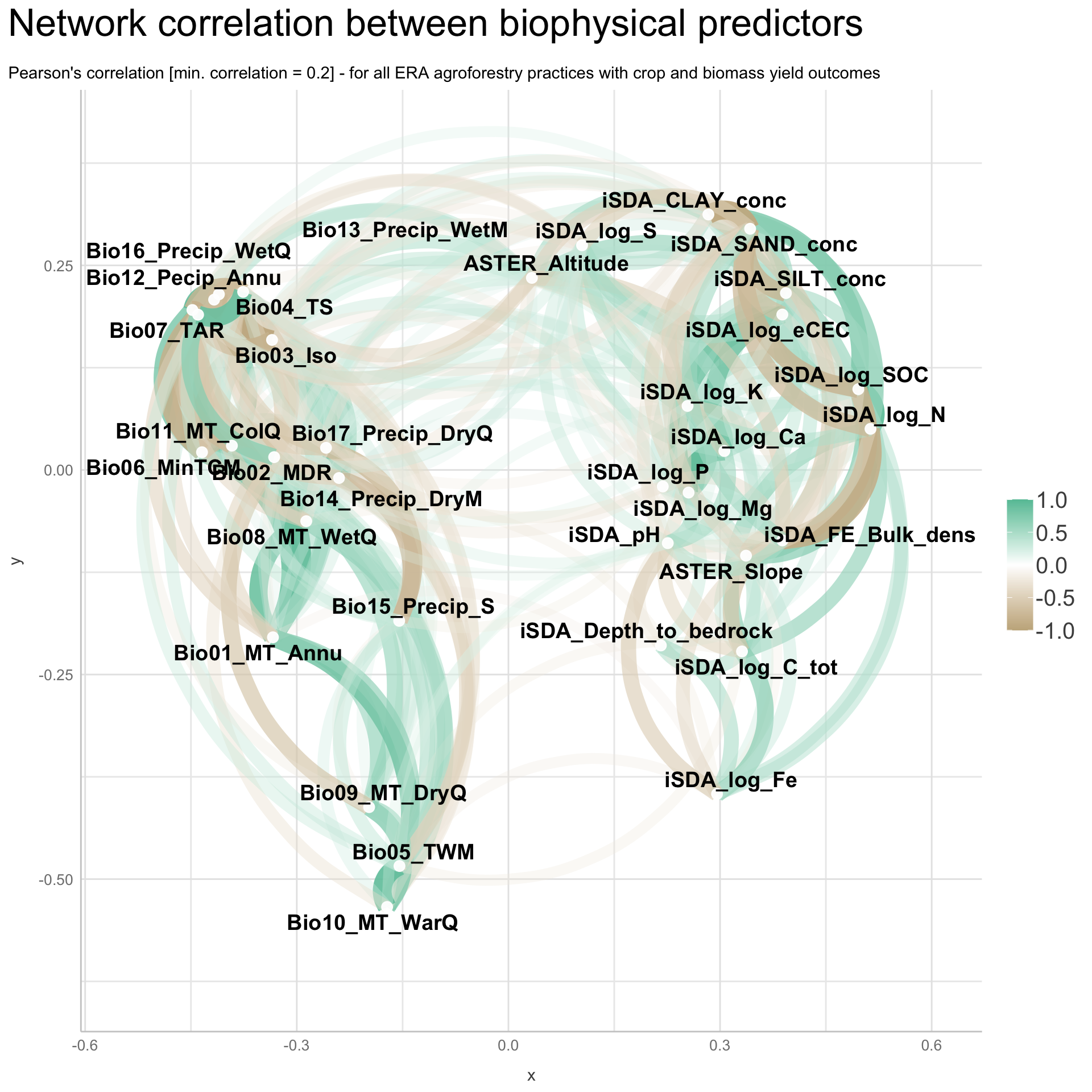

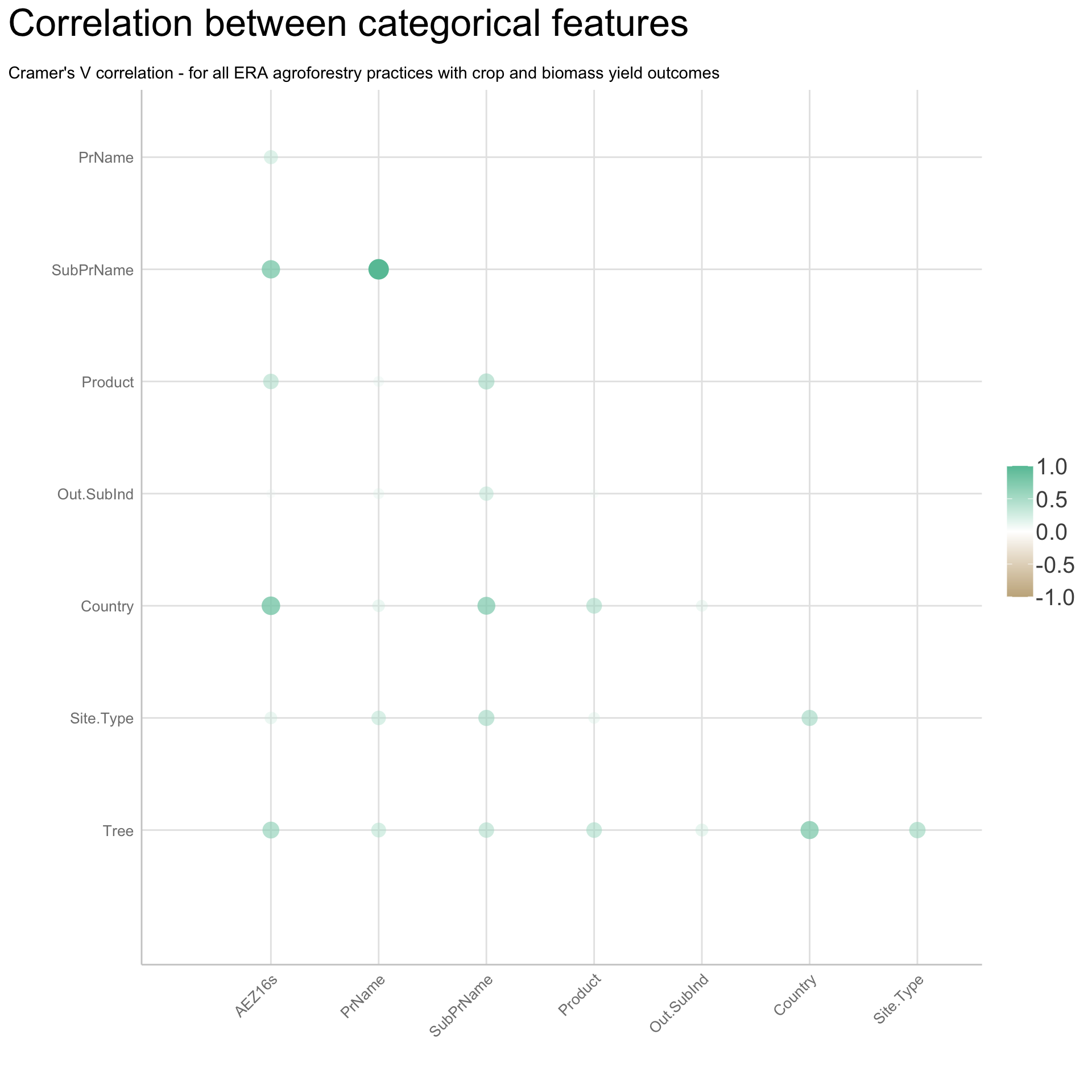

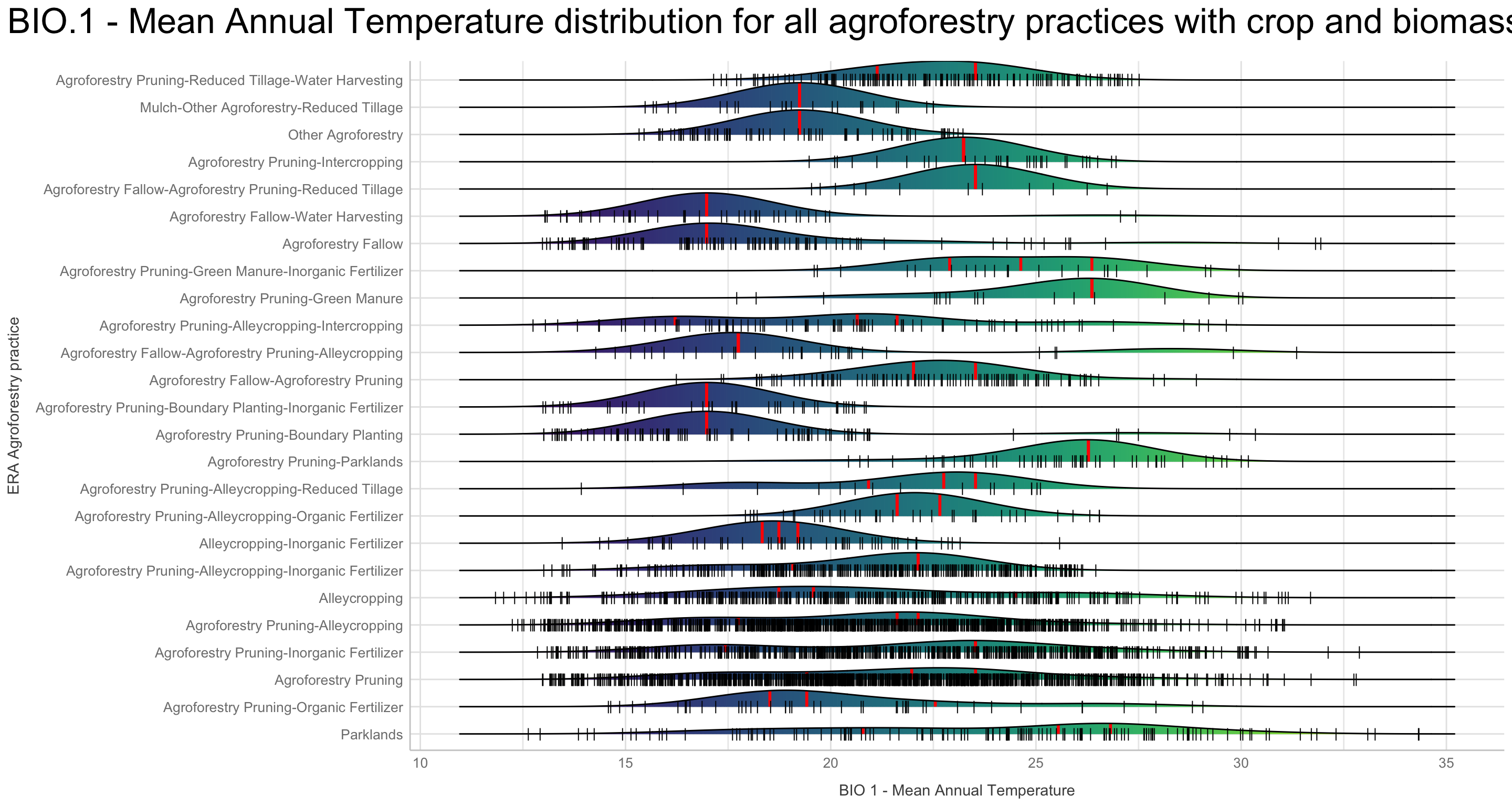

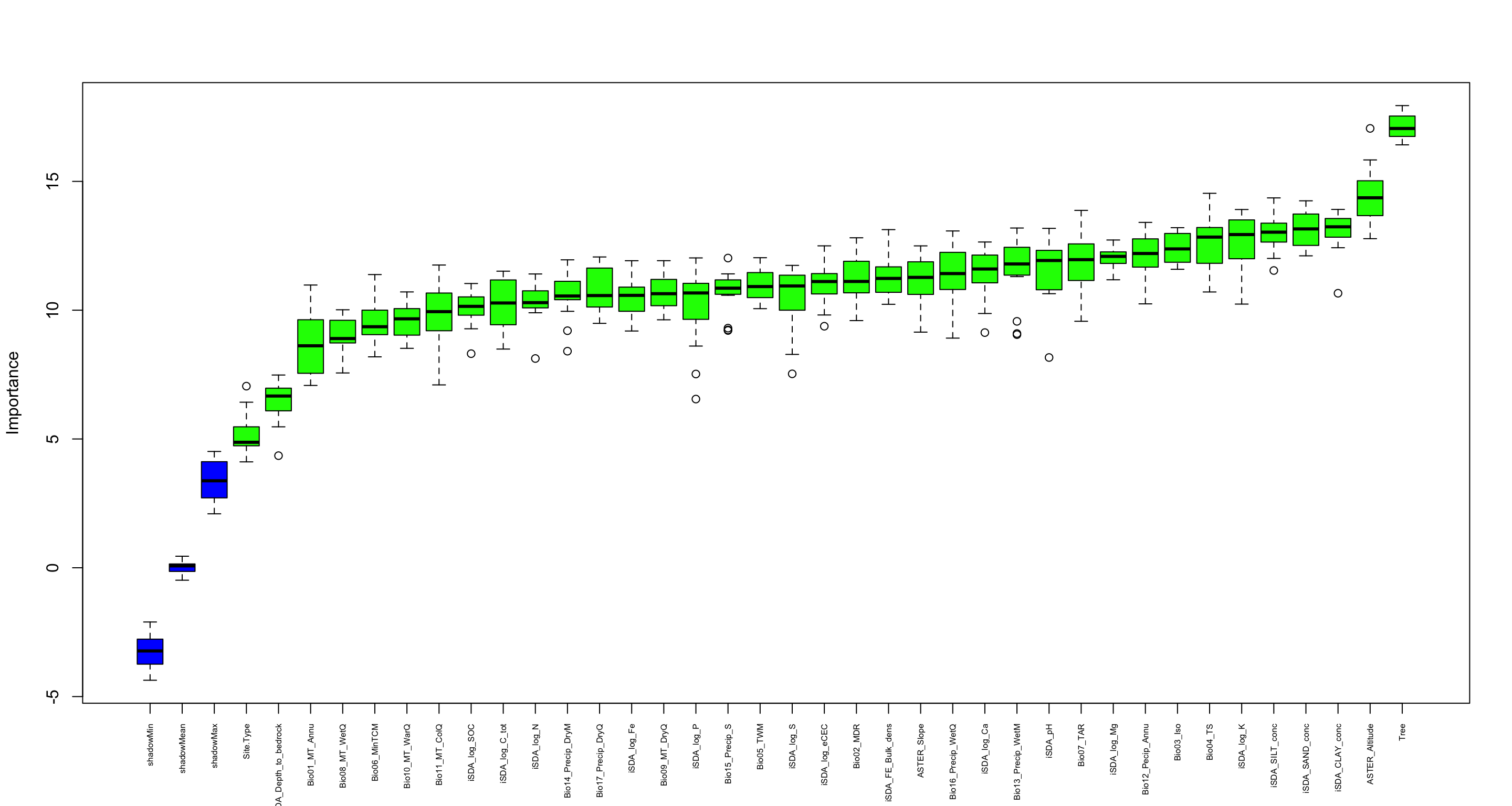

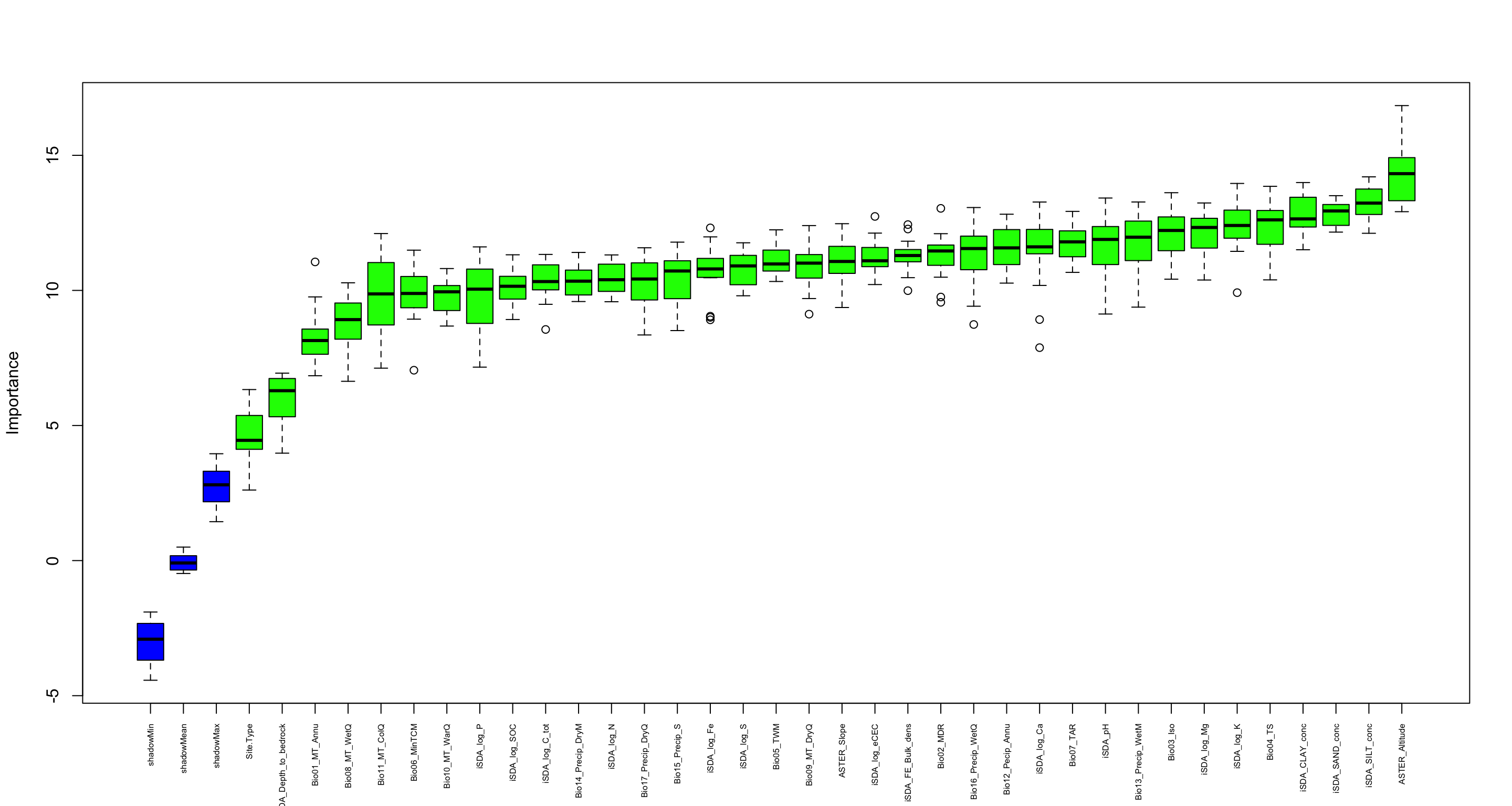

We are going to perform some exploratory data analysis (EDA) of the ERA agroforestry data. EDA is a critical pre-modelling step in order to make initial investigations so as to discover patterns, to spot anomalies, test hypothesis and to check assumptions. We are going to do this on the agroforestry data with the help of visualisations and summary statistics. Performing exploratory data analysis prior to conducting any machine learning is always a good idea, as it will help to get an understanding of the data in terms of relationships and how different predictor features are distributed, how they relate to the outcome (logRR) and how they relate to each other. Explorative data analysis is often an iterative process consisting of posing a question to the data, review the data, and develop further questions to investigate it before beginning the modelling and machine learning development. Hence, we need to conduct a proper EDA of our agroforestry data as it is fundamental to our later machine learning-based modelling analysis. We will first visually explore our agroforestry data using the DataExplorer package to generate histograms, density plots and scatter plots of the biophysical predictors. We will examine the results critically with our later machine learning approach in mind. We will continue the EDA by looking at the correlations between our biophysical predictors - and between our predictors and the outcome (logRR) to see if we can make any initial pattern recognition manually. Overall we will use the outcome of our EDA to identify required pre-processing steps needed for our machine learning models (e.g. excluding highly correlated predictors or normalizing some features for better model performance).

We will go through these nine steps in the EDA:

1) Missing data exploration

2) Density distributions of features i) of continuous predictors (all biophysical) i) of outcome (RR and logRR)

3) Histograms of features

- of continuous predictors (all biophysical)

- of outcome (RR and logRR)

4) Scatter plots

- of continuous predictors (all biophysical)

- of outcome (RR and logRR)

5) Quantile-quantile plots

- of continuous predictors (all biophysical)

- of outcome (RR and logRR)

6) Discritization of target feature, logRR

- Boxplots of predictors vs. discritized logRR**

7) PCA and variable importance

- of continuous predictors (all biophysical)

8) Correlation of features

- of numeric predictors (all biophysical)

- of outcome (RR and logRR)

9) Multiple pairwise t-test using discritized logRR values

- of continuous predictors (all biophysical)

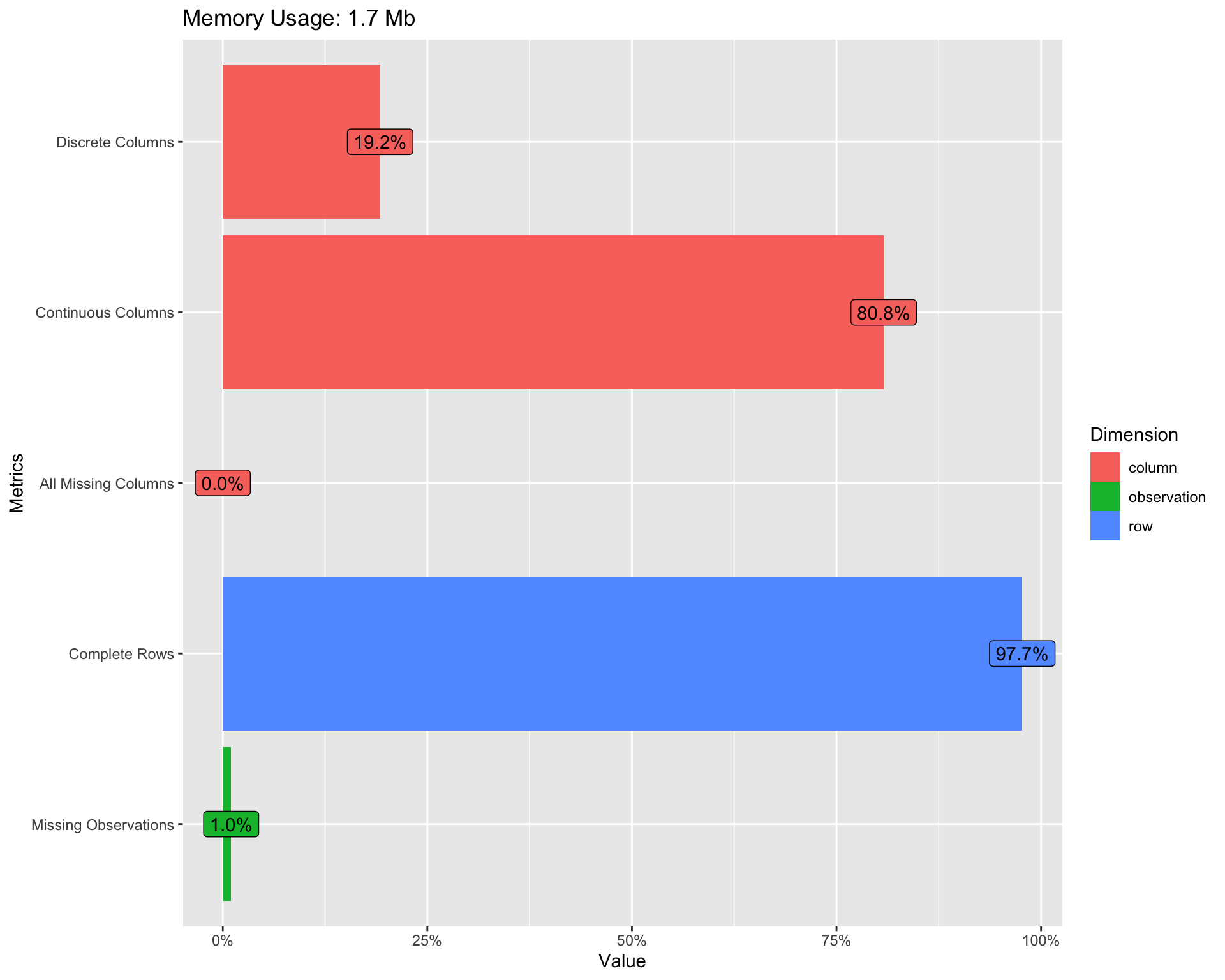

Lets first familiarise ourself with the agroforestry data using the functions introduce() and plot_intro() from DataExplorer package.

Show code

DataExplorer::introduce(agrofor.biophys.modelling.data) %>% glimpse()

Rows: 1

Columns: 9

Rowwise:

$ rows <int> 4578

$ columns <int> 52

$ discrete_columns <int> 10

$ continuous_columns <int> 42

$ all_missing_columns <int> 0

$ total_missing_values <int> 2436

$ complete_rows <int> 4473

$ total_observations <int> 238056

$ memory_usage <dbl> 1783016Show code

DataExplorer::plot_intro(agrofor.biophys.modelling.data)

(#fig:Re-introduction to the agroforestry data plot)Re-introducing the agroforestry data

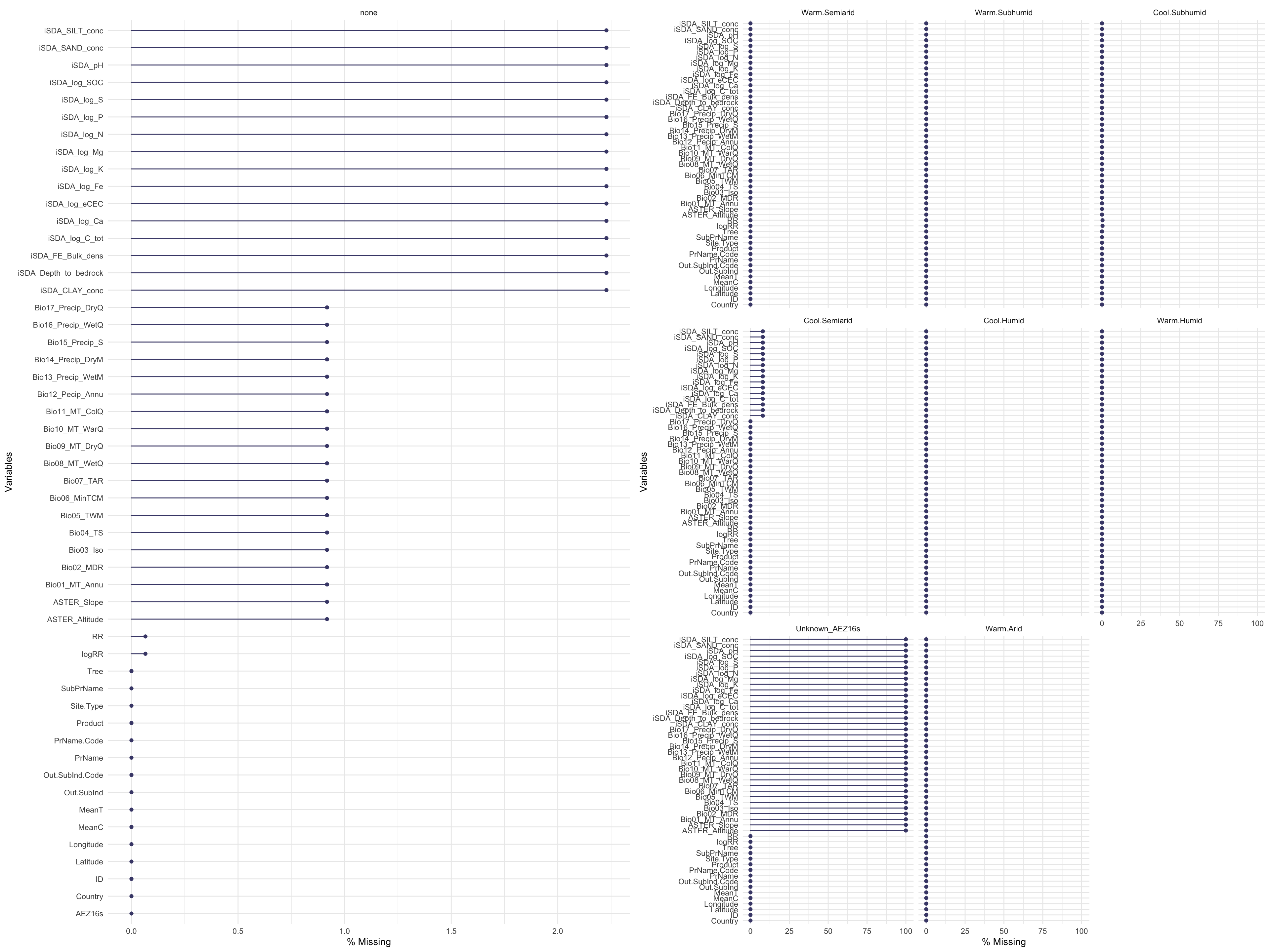

Missing data exploration

As part of the EDA of data cleaning we would need to deal with missing data in our agroforestry data. To get a better understanding of our missing data, such as “where it is” and how it manifest it self. We are going to make use of the naniar package, that is excellent for familiarixing our selfs with the missingness of the data.

First, lets view the number and percentage of missing values in each combination of grouped levels of practices (PrName) and outcomes (Out.SubInd).

Show code

agrofor.biophys.modelling.data.missing <- agrofor.biophys.modelling.data %>%

dplyr::group_by(PrName, Out.SubInd, AEZ16s) %>%

naniar::miss_var_summary() %>%

arrange(desc(n_miss))

Show code

rmarkdown::paged_table(agrofor.biophys.modelling.data.missing)

PrName <fct> | Out.SubInd <fct> | AEZ16s <fct> | |

|---|---|---|---|

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid | |

| Agroforestry Pruning-Reduced Tillage-Water Harvesting | Crop Yield | Cool.Semiarid |

Now we can visually assess where there is missing data. Lets also make one where we facet based on agroecological zones as it is interesting to see in which agroecological zones we miss data.

agrofor.biophys.gg_miss_var.all <- naniar::gg_miss_var(agrofor.biophys.modelling.data,

show_pct = TRUE,

facet = "none")

agrofor.biophys.gg_miss_var.AEZ <- naniar::gg_miss_var(agrofor.biophys.modelling.data,

show_pct = TRUE,

facet = AEZ16s)

# Warning: It is deprecated to specify `guide = FALSE` to remove a guide. Please use `guide = "none"` instead.

Show code

agrofor.biophys.gg_miss_var.combined <- cowplot::plot_grid(agrofor.biophys.gg_miss_var.all,

agrofor.biophys.gg_miss_var.AEZ)

agrofor.biophys.gg_miss_var.combined

(#fig:Visually assess where we have missing data)Visually assess missing data

Show code

# Warning: It is deprecated to specify `guide = FALSE` to remove a guide. Please use `guide = "none"` instead.

We see that most of data is quite complete however there are some spatially explicit patters for iSDA and WorldClim (BioClim) data. The variable/feature “Tree (species)” is the feature where most data is missing, yet it is only around 20 % of this data we do not have in our agroforestry data. In the faceted plot (by: agroecological zone), we see that there is a great deal of overlap between where we have missing quantitative data, from either iSDA or WorldClim and the missing qualitative information on agroecological zone - again highlighting that some areas/ERA observations are from areas outside the boundary of the co-variables data have been sourced.

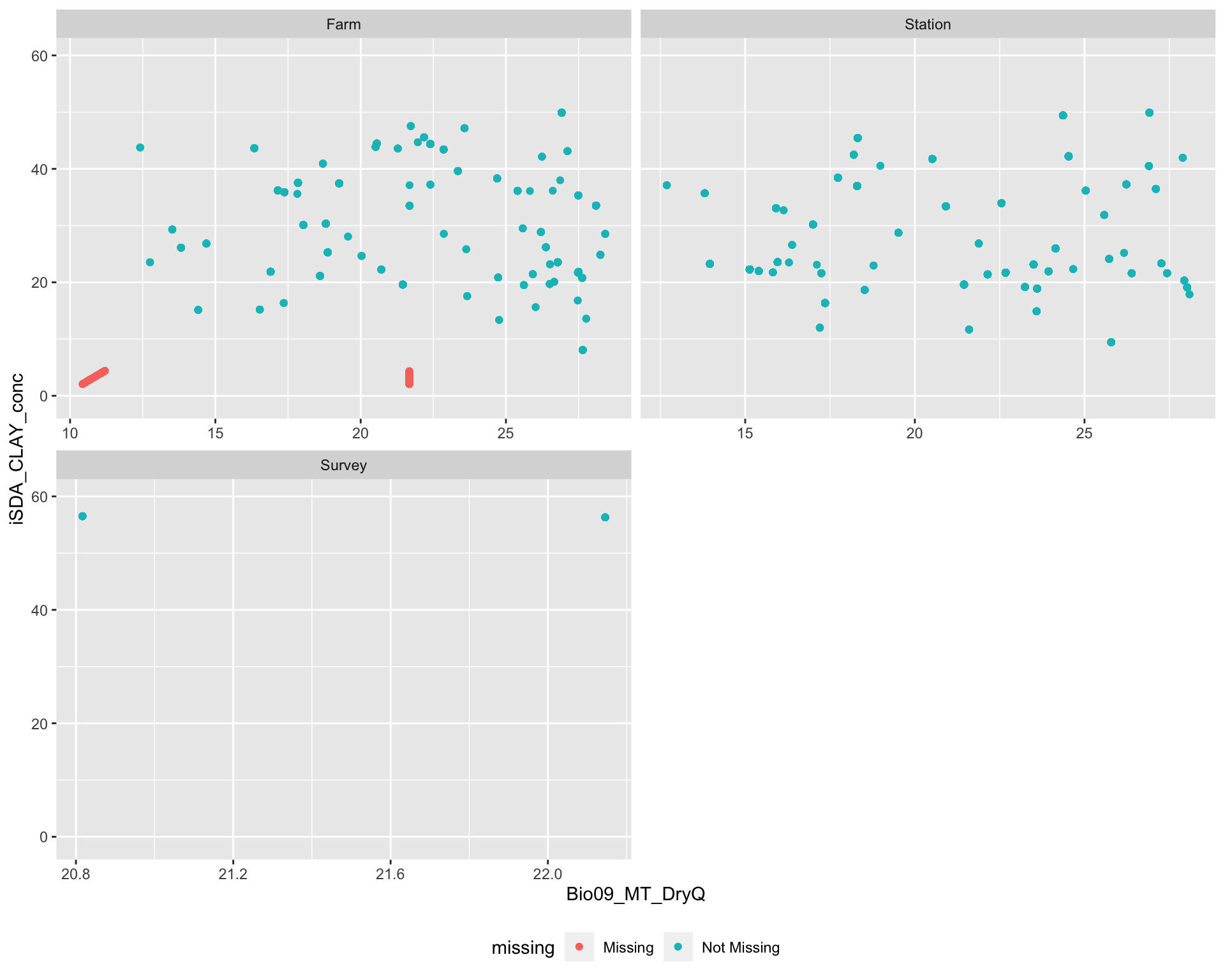

Lets look accros the site types (farm, research station and survey) whether we see any differences in missingness of data. To do this we are using the geom_miss_point() function from the naniar package. We are going to plot a random iSDA feature agains a random BioClim feature - as we now know that if there are missing data from one iSDA (or BioClim) feature it is missing in all the features deriving from the respected data source.

agrofor.biophys.selected.features <- readRDS(file = here::here("TidyMod_OUTPUT","agrofor.biophys.selected.features.RDS"))

miss.check.site <-

ggplot(data = agrofor.biophys.selected.features,

aes(x = Bio09_MT_DryQ,

y = iSDA_CLAY_conc)) +

naniar::geom_miss_point() +

facet_wrap(~Site.Type, ncol = 2, scales = "free_x") +

coord_cartesian(ylim = c(-1,60)) +

theme(legend.position = "bottom")

miss.check.country <-

ggplot(data = agrofor.biophys.selected.features,

aes(x = Bio09_MT_DryQ,

y = iSDA_CLAY_conc)) +

naniar::geom_miss_point() +

facet_wrap(~Country, ncol = 2, scales = "free_x") +

coord_cartesian(ylim = c(-1,60)) +

theme(legend.position = "bottom")

Show code

miss.check.site

(#fig:Plotting differences in missing data distribution accross site types)Missing data accross site type

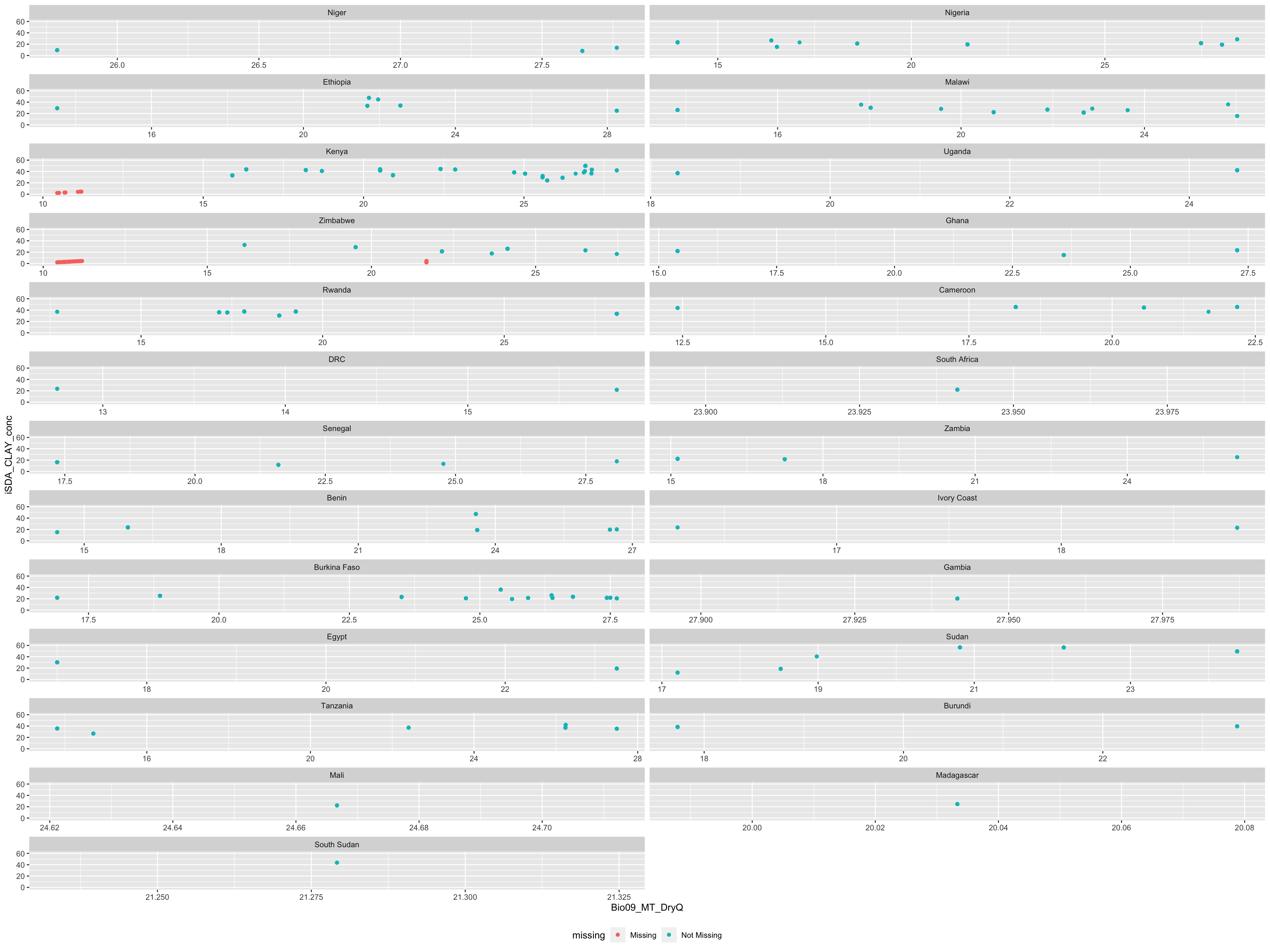

..and what about Countries? Do we see differences in the distribution of missing data across Countries?

Show code

miss.check.country

(#fig:Plotting differences in missing data distribution accross contries)Missing data accross Countries

We see that missing data is present only from research sites on farms. We can use the bind_shadow() and nabular() functions from naniar to keep better track of the missing values in our agroforestry data. We also see that Kenya and Zimbabwe are the two major sources of missing data. This is strange as they are not located outside the countinent.

If we use the nabular table format it helps you manage where missing values are in the dataset and makes it easy to do visualisations where when we split by missingness. Lets look at the missing data overlap between the two co-variables “Bio09_MT_DryQ” and “iSDA_CLAY_conc,” and then visualise a density plot.

Show code

agrofor.biophys.modelling.data.nabular <- naniar::nabular(agrofor.biophys.selected.features)

rmarkdown::paged_table(agrofor.biophys.modelling.data.nabular)

ID <dbl> | PrName <fct> | Out.SubInd <fct> | Product <fct> | AEZ16s <fct> | Country <fct> | Site.Type <fct> | MeanC <dbl> | |

|---|---|---|---|---|---|---|---|---|

| 1660 | Parklands | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 3.450000e+00 | |

| 1660 | Parklands | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 4.550000e+00 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 2.204370e+00 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 2.204370e+00 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 2.204370e+00 | |

| 1675 | Agroforestry Pruning | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 4.140900e+00 | |

| 1675 | Agroforestry Pruning | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 5.458400e+00 | |

| 1675 | Agroforestry Pruning | Biomass Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 5.458400e+00 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Crop Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 5.770000e-01 | |

| 1675 | Agroforestry Pruning-Organic Fertilizer | Crop Yield | Pearl Millet | Warm.Semiarid | Niger | Farm | 5.770000e-01 |

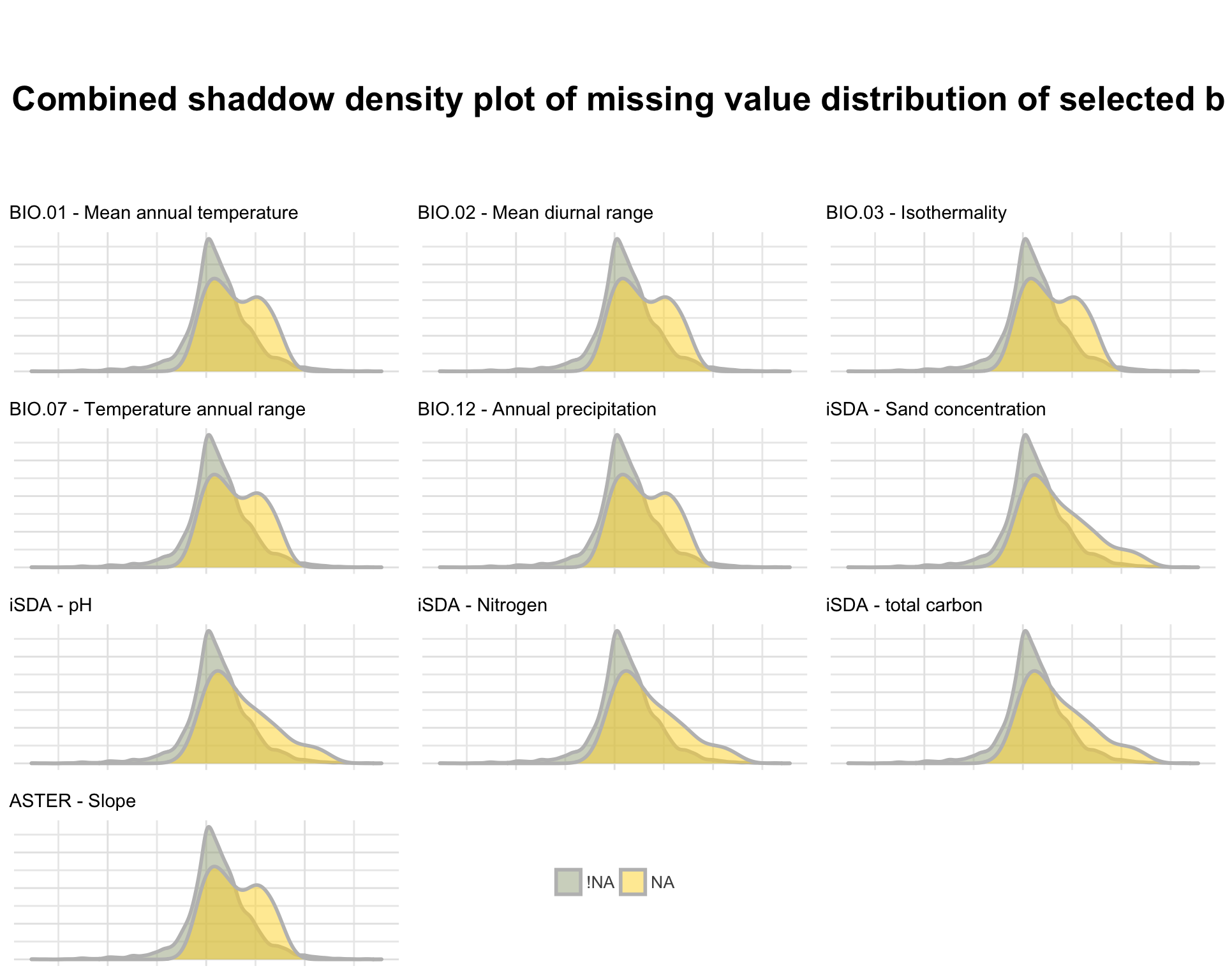

Using the naniar function called bind_shadow() we can generate a visual showing the areas of our agroforestry data where we have missing data. Lets make such a shaddow plot:

Show code

agrofor.biophys.modelling.data.numeric <- readRDS(file = here::here("TidyMod_OUTPUT", "agrofor.biophys.modelling.data.numeric.RDS"))

agrofor.biophys.modelling.data.numeric.shadow <- naniar::bind_shadow(agrofor.biophys.modelling.data.numeric)

# Bio01_MT_Annu

agrofor.biophys.shadow.bind.plot.Bio01 <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = Bio01_MT_Annu_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "BIO.01 - Mean annual temperature")

# Bio02_MDR

agrofor.biophys.shadow.bind.plot.Bio02 <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = Bio02_MDR_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "BIO.02 - Mean diurnal range")

# Bio03_Iso

agrofor.biophys.shadow.bind.plot.Bio03 <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = Bio03_Iso_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "BIO.03 - Isothermality")

# Bio07_TAR

agrofor.biophys.shadow.bind.plot.Bio07 <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = Bio07_TAR_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "BIO.07 - Temperature annual range")

# Bio12_Pecip_Annu

agrofor.biophys.shadow.bind.plot.Bio12 <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = Bio12_Pecip_Annu_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "BIO.12 - Annual precipitation")

# iSDA_SAND_conc

agrofor.biophys.shadow.bind.plot.sand <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = iSDA_SAND_conc_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "iSDA - Sand concentration")

# iSDA_log_C_tot

agrofor.biophys.shadow.bind.plot.totcarbon <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = iSDA_log_C_tot_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "iSDA - total carbon")

# iSDA_pH

agrofor.biophys.shadow.bind.plot.pH <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = iSDA_pH_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "iSDA - pH")

# iSDA_log_N

agrofor.biophys.shadow.bind.plot.N <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = iSDA_log_N_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "iSDA - Nitrogen")

# ASTER_Slope

agrofor.biophys.shadow.bind.plot.slope <- agrofor.biophys.modelling.data.numeric.shadow %>%

ggplot(aes(x = logRR,

fill = ASTER_Slope_NA)) +

geom_density(col = "gray", alpha = 0.5, size = 1) +

scale_fill_manual(aesthetics = "fill", values = c("#A2AC8A", "#FED82F")) +

theme_lucid(legend.title.size = 0) +

labs(subtitle = "ASTER - Slope")

# Extract a legend theme from the yplot that is laid out horizontally

agrofor.biophys.shadow.legend <- get_legend(agrofor.biophys.shadow.bind.plot.Bio01 +

guides(color = guide_legend(nrow = 1)) +

theme_lucid(legend.position = "bottom", legend.title.size = 0))

# Cleaning the yplot for any style or theme

agrofor.biophys.shadow.bind.plot.Bio01 <- agrofor.biophys.shadow.bind.plot.Bio01 + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.Bio02 <- agrofor.biophys.shadow.bind.plot.Bio02 + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.Bio03 <- agrofor.biophys.shadow.bind.plot.Bio03 + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.Bio07 <- agrofor.biophys.shadow.bind.plot.Bio07 + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.Bio12 <- agrofor.biophys.shadow.bind.plot.Bio12 + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.sand <- agrofor.biophys.shadow.bind.plot.sand + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.pH <- agrofor.biophys.shadow.bind.plot.pH + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.N <- agrofor.biophys.shadow.bind.plot.N + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.totcarbon <- agrofor.biophys.shadow.bind.plot.totcarbon + clean_theme() + rremove("legend")

agrofor.biophys.shadow.bind.plot.slope <- agrofor.biophys.shadow.bind.plot.slope + clean_theme() + rremove("legend")

# Creating the title

# add margin on the left of the drawing canvas,

# so title is aligned with left edge of first plot

agrofor.biophys.shadow.title <- ggdraw() +

draw_label(

"Combined shaddow density plot of missing value distribution of selected biophysical predictors vs. logRR",

fontface = 'bold',

x = 0,

hjust = 0,

size = 20) +

theme(plot.margin = margin(0, 0, 0, 7))

# Warning: Removed 15 rows containing non-finite values (stat_density).

Lets combine these in a cowplot

Show code

# Arranging the plots and using cowplot::plot_grid() to plot the three plots together

combined.agrofor.biophys.shadow <- cowplot::plot_grid(

agrofor.biophys.shadow.title,

NULL,

NULL,

#########

agrofor.biophys.shadow.bind.plot.Bio01,

agrofor.biophys.shadow.bind.plot.Bio02,

agrofor.biophys.shadow.bind.plot.Bio03,

agrofor.biophys.shadow.bind.plot.Bio07,

agrofor.biophys.shadow.bind.plot.Bio12,

agrofor.biophys.shadow.bind.plot.sand,

agrofor.biophys.shadow.bind.plot.pH,

agrofor.biophys.shadow.bind.plot.N,

agrofor.biophys.shadow.bind.plot.totcarbon,

agrofor.biophys.shadow.bind.plot.slope,

#########

agrofor.biophys.shadow.legend,

#########

ncol = 3, nrow = 5, align = "hv",

rel_widths = c(1, 1, 1),

rel_heights = c(2, 2, 2, 2))

combined.agrofor.biophys.shadow

Figure 1: Shaddow plot of selected biophysical predictor features

Show code

# Warning: Removed 15 rows containing non-finite values (stat_density).

# Warning: Graphs cannot be vertically aligned unless the axis parameter is set. Placing graphs unaligned.

# Warning: Graphs cannot be horizontally aligned unless the axis parameter is set. Placing graphs unaligned.

We see again that there is a spatial relation between the missingness of our data, as the pattern is similar for the features extracted from the same data sources. Therefore there must be some areas where we have no biophysical information. This is perhaps because the biophysical co-variables are extracted from a fixed boundary of the African continent and islands such as Capo Verde is situated outside the continent.

Density distributions of features

Until this point we have identified and familiarised ourself with the missing data. We can now make a clean numeric dataset in which we remove all NA observations. This will be helpful for the further process of EDA. For a more aesthetic experience we are also making a custom colour range of 35 colours that we can use to plot our 35 predictors with.

expl.agfor.data.no.na <- agrofor.biophys.modelling.data.numeric %>%

rationalize() %>%

drop_na()

dim(agrofor.biophys.modelling.data.numeric) # <- before removing NAs

# [1] 4578 37

dim(expl.agfor.data.no.na) # <- after removing NAs

# [1] 4461 37

# Colour range with 35 levels

nb.cols.35 <- 35

era.af.colors.35 <- colorRampPalette(brewer.pal(8, "Set2"))(nb.cols.35)

Show code

$page_1

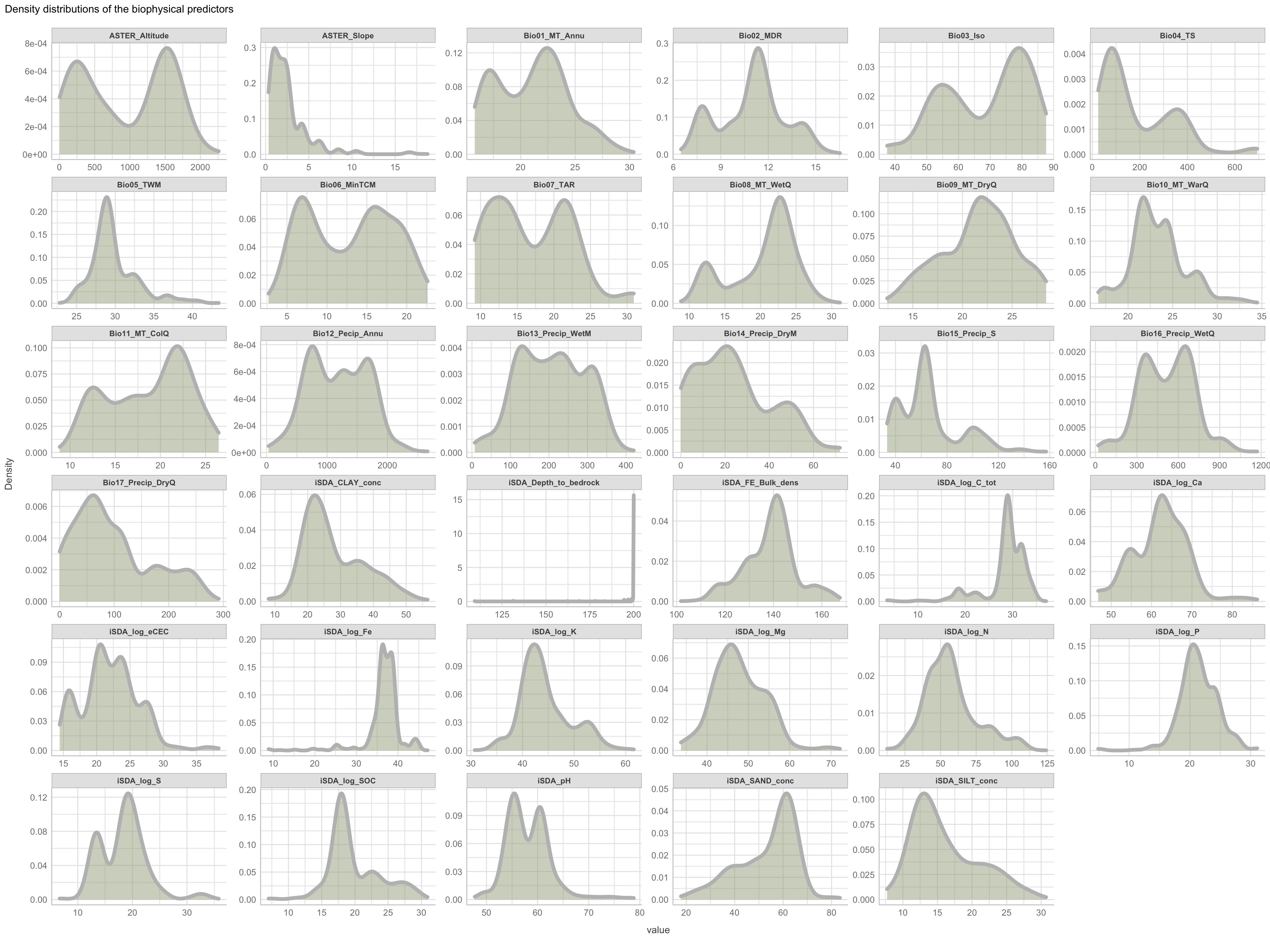

Figure 2: Density distributions of biophysical predictors

Not surprising we see a lot of variation in the density distribution for our biophysical predictors. Some of them shows a multimodal distribution patterns, e.g. altitude and Bio06 the minimum temperature of coldest month, Bio03 the Isothermality and iSDA pH shows a bimodal density distribution pattern, while others, such as Bio05 maximum temperature of warmest month, iSDA Iron concentrations and iSDA total carbon are skewed to the left, right and right, respectfully. Others, like Bio09 mean temperature of driest quarter and iSDA phosphorus concentrations seems to be having a good similarity of a normal distribution. This and other observations gives us a good understanding about the predictors. One take-away massage would be that we clearly need some sort of normalisation and centralisation of the predictors prior to our machine learning exercise in order to get good performance.

Show code

expl.agfor.dens.outcome <- expl.agfor.data.no.na %>%

dplyr::select(c("RR", "logRR")) %>%

plot_density(ggtheme = theme_lucid(),

geom_density_args = list("fill" = "#FED82F", "col" = "gray", "alpha" = 0.5, "size" = 2, "adjust" = 0.5),

ncol = 2, nrow = 1,

parallel = FALSE,

title = "Density distributions of the outcome logRR or RR"

)

Show code

$page_1

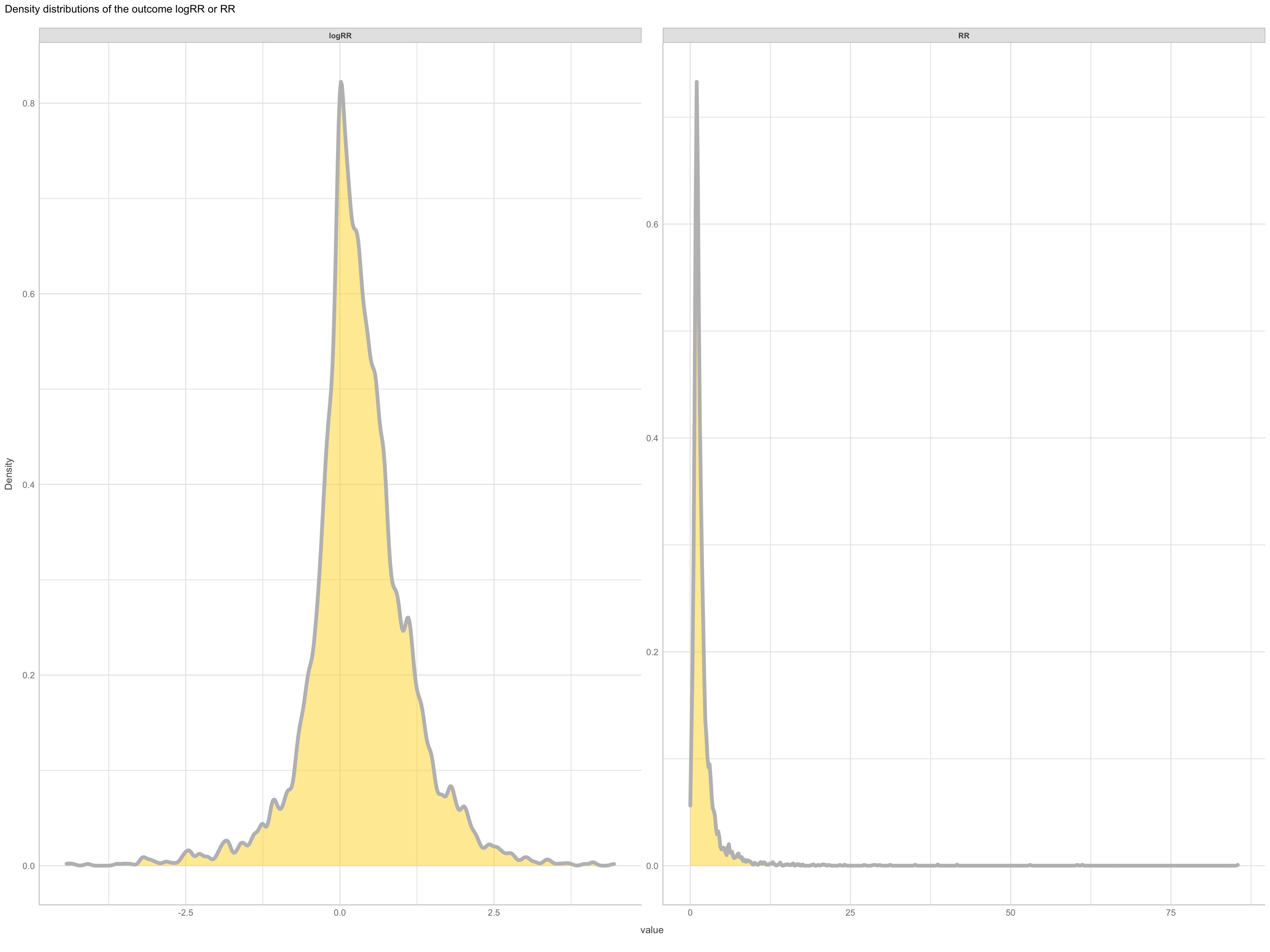

(#fig:logRR density distrubution)Density distributions logRR

It is not only the destribution of predictors that matter when doing machine learning modelling, and in order to get a good performance we also need to check for normality in our outcome feature as they will be difficult for the algorithm to predict if we have too abnormall patterns. So lets view the density distribution for our outcome feature(s) logRR and RR.

This clearly demonstrate that the log transformed RR is a much better outcome feature, compared to the raw RR. We might want to exclude extreme values from our logRR on both the positive and negative tail of the distribution. We might do this using the extreme outlier removal approach already used in the ERAAnalyze function, where response ratios above or below 3∗IQR (interquartile range) are removed.

is_outlier <- function(x) {

return(x < quantile(x, 0.25) - 3 * IQR(x) | x > quantile(x, 0.75) + 3 * IQR(x))

}

expl.agfor.data.no.na.logRR.outlier <- expl.agfor.data.no.na %>%

mutate(ERA_Agroforestry = 1) %>%

group_by(ERA_Agroforestry) %>%

mutate(logRR.outlier = ifelse(is_outlier(logRR), logRR, as.numeric(NA)))

# SAVING DATA

saveRDS(expl.agfor.data.no.na.logRR.outlier, here::here("TidyMod_OUTPUT", "expl.agfor.data.no.na.logRR.outlier.RDS"))

Show code

expl.agfor.data.no.na.logRR.outlier <- readRDS(here::here("TidyMod_OUTPUT", "expl.agfor.data.no.na.logRR.outlier.RDS"))

rmarkdown::paged_table(expl.agfor.data.no.na.logRR.outlier %>% count(logRR.outlier, sort = TRUE))

ERA_Agroforestry <dbl> | logRR.outlier <dbl> | n <int> | ||

|---|---|---|---|---|

| 1 | NA | 4418 | ||

| 1 | -2.507380 | 3 | ||

| 1 | -3.178054 | 2 | ||

| 1 | -4.433320 | 1 | ||

| 1 | -4.337291 | 1 | ||

| 1 | -4.092074 | 1 | ||

| 1 | -3.616559 | 1 | ||

| 1 | -3.504055 | 1 | ||

| 1 | -3.401197 | 1 | ||

| 1 | -3.222868 | 1 |

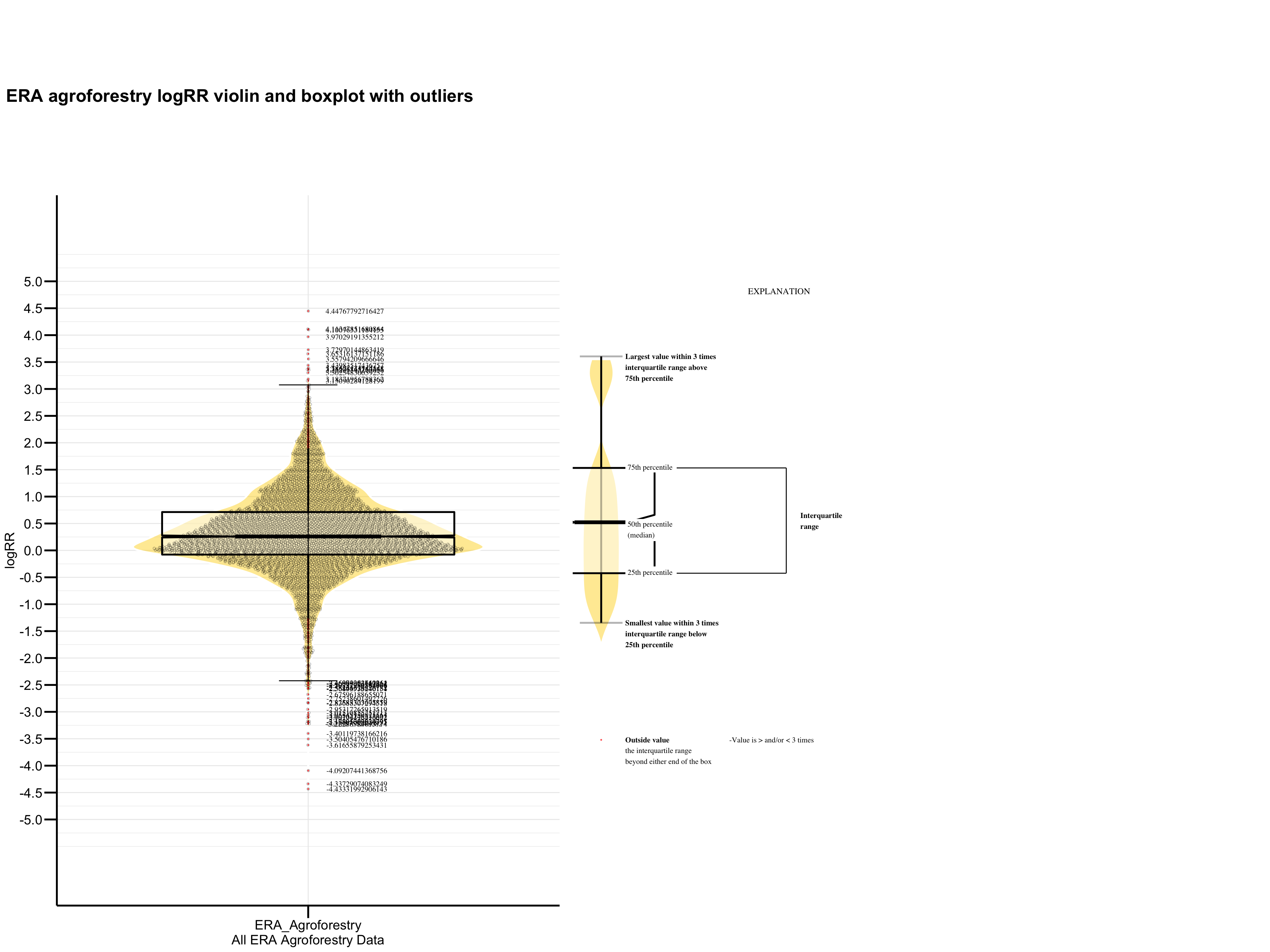

Based on the extreme outliers removal method we have 40 outliers with 28 of them being < 0 and 12 being > 0. Lets assess these outliers visually by making a nice violin and box plot of the outcome variable logRR using ggplot2 with the ggbeeswarm package. We will include the outliers indicated in red within the 3 * QR limit.

Show code

ggplot_box_legend <- function(family = "serif"){

# Create data to use in the boxplot legend:

set.seed(100)

sample_df <- data.frame(parameter = "test",

values = sample(500))

# Extend the top whisker a bit:

sample_df$values[1:100] <- 701:800

# Make sure there's only 1 lower outlier:

sample_df$values[1] <- -350

# Function to calculate important values:

ggplot2_boxplot <- function(x){

quartiles <- as.numeric(quantile(x,

probs = c(0.25, 0.5, 0.75)))

names(quartiles) <- c("25th percentile",

"50th percentile\n(median)",

"75th percentile")

IQR <- diff(quartiles[c(1,3)])

upper_whisker <- max(x[x < (quartiles[3] + 1.5 * IQR)])

lower_whisker <- min(x[x > (quartiles[1] - 1.5 * IQR)])

upper_dots <- x[x > (quartiles[3] + 1.5*IQR)]

lower_dots <- x[x < (quartiles[1] - 1.5*IQR)]

return(list("quartiles" = quartiles,

"25th percentile" = as.numeric(quartiles[1]),

"50th percentile\n(median)" = as.numeric(quartiles[2]),

"75th percentile" = as.numeric(quartiles[3]),

"IQR" = IQR,

"upper_whisker" = upper_whisker,

"lower_whisker" = lower_whisker,

"upper_dots" = upper_dots,

"lower_dots" = lower_dots))

}

# Get those values:

ggplot_output <- ggplot2_boxplot(sample_df$values)

# Lots of text in the legend, make it smaller and consistent font:

update_geom_defaults("text",

list(size = 3,

hjust = 0,

family = family))

# Labels don't inherit text:

update_geom_defaults("label",

list(size = 3,

hjust = 0,

family = family))

# Create the legend:

# The main elements of the plot (the boxplot, error bars, and count)

# are the easy part.

# The text describing each of those takes a lot of fiddling to

# get the location and style just right:

explain_plot <- ggplot() +

geom_violin(data = sample_df,

aes(x = parameter, y = values),

alpha = 0.5,

size = 4,

fill = "#FED82F",

colour = "white",

width = 0.3) +

geom_boxplot(data = sample_df,

aes(x = parameter, y = values),

fill = "white",

col = "black",

notch = TRUE,

outlier.size = 0.3,

lwd = 1,

alpha = 0.5,

outlier.colour = "red",

outlier.fill = "black",

show.legend = F,

outlier.shape = 10) +

stat_boxplot(data = sample_df,

aes(x = parameter, y = values),

alpha = 0.3,

size = 1,

geom = "errorbar",

colour = "black",

linetype = 1,

width = 0.3) + #whiskers+

geom_text(aes(x = 1, y = 950, label = ""), hjust = 0.5) +

geom_text(aes(x = 1.17, y = 950,

label = ""),

fontface = "bold", vjust = 0.4) +

theme_minimal(base_size = 5, base_family = family) +

geom_segment(aes(x = 2.3, xend = 2.3,

y = ggplot_output[["25th percentile"]],

yend = ggplot_output[["75th percentile"]])) +

geom_segment(aes(x = 1.2, xend = 2.3,

y = ggplot_output[["25th percentile"]],

yend = ggplot_output[["25th percentile"]])) +

geom_segment(aes(x = 1.2, xend = 2.3,

y = ggplot_output[["75th percentile"]],

yend = ggplot_output[["75th percentile"]])) +

geom_text(aes(x = 2.4, y = ggplot_output[["50th percentile\n(median)"]]),

label = "Interquartile\nrange", fontface = "bold",

vjust = 0.4) +

geom_text(aes(x = c(1.17,1.17),

y = c(ggplot_output[["upper_whisker"]],

ggplot_output[["lower_whisker"]]),

label = c("Largest value within 3 times\ninterquartile range above\n75th percentile",

"Smallest value within 3 times\ninterquartile range below\n25th percentile")),

fontface = "bold", vjust = 0.9) +

geom_text(aes(x = c(1.17),

y = ggplot_output[["lower_dots"]],

label = "Outside value"),

vjust = 0.5, fontface = "bold") +

geom_text(aes(x = c(1.9),

y = ggplot_output[["lower_dots"]],

label = "-Value is > and/or < 3 times"),

vjust = 0.5) +

geom_text(aes(x = 1.17,

y = ggplot_output[["lower_dots"]],

label = "the interquartile range\nbeyond either end of the box"),

vjust = 1.5) +

geom_label(aes(x = 1.17, y = ggplot_output[["quartiles"]],

label = names(ggplot_output[["quartiles"]])),

vjust = c(0.4,0.85,0.4),

fill = "white", label.size = 0) +

ylab("") + xlab("") +

theme(axis.text = element_blank(),

axis.ticks = element_blank(),

panel.grid = element_blank(),

aspect.ratio = 4/3,

plot.title = element_text(hjust = 0.5, size = 10)) +

coord_cartesian(xlim = c(1.4,3.1), ylim = c(-600, 900)) +

labs(title = "EXPLANATION")

return(explain_plot)

}

Creating logRR outliers plot

Show code

legend_plot <- ggplot_box_legend()

logRR.outlier.plot <-

ggplot(data = expl.agfor.data.no.na.logRR.outlier, aes(y = logRR, x = "ERA_Agroforestry")) +

scale_fill_viridis_d( option = "D") +

geom_violin(alpha = 0.5, position = position_dodge(width = 1), size = 1, fill = "#FED82F", colour = "white") +

geom_boxplot(notch = TRUE, outlier.size = 0.3, color = "black", lwd = 1, alpha = 0.5, outlier.colour = "red",

outlier.fill = "black", show.legend = F, outlier.shape = 10) +

scale_y_continuous(breaks =seq(-5, 5, 0.5), limit = c(-6, 6)) +

stat_boxplot(geom ='errorbar', width = 0.15, coef = 3) +

geom_text(aes(label = logRR.outlier), na.rm = TRUE, hjust = -0.3) +

# geom_point( shape = 21,size=2, position = position_jitterdodge(), color="black",alpha=1)+

ggbeeswarm::geom_quasirandom(shape = 21, size = 1, dodge.width = 0.5, color = "black", alpha = 0.3, show.legend = F) +

theme_minimal() +

ylab(c("logRR")) +

xlab("All ERA Agroforestry Data") +

theme(#panel.border = element_rect(colour = "black", fill=NA, size=2),

axis.line = element_line(colour = "black" , size = 1),

axis.ticks = element_line(size = 1, color = "black"),

axis.text = element_text(color = "black"),

axis.ticks.length = unit(0.5, "cm"),

legend.position = "none") +

font("xylab", size = 15) +

font("xy", size = 15) +

font("xy.text", size = 15) +

font("legend.text", size = 15) +

guides(fill = guide_legend(override.aes = list(alpha = 0.3, color = "black")))

logRR.outlier.plot.title <- ggdraw() +

draw_label(

"ERA agroforestry logRR violin and boxplot with outliers",

fontface = 'bold',

x = 0,

hjust = 0,

size = 20) +

theme(plot.margin = margin(0, 0, 0, 7))

Show code

Show code

Figure 3: logRR outliers plot

We see that there are some logRR observation we would have to remove in order for our machine learning models to perform well!

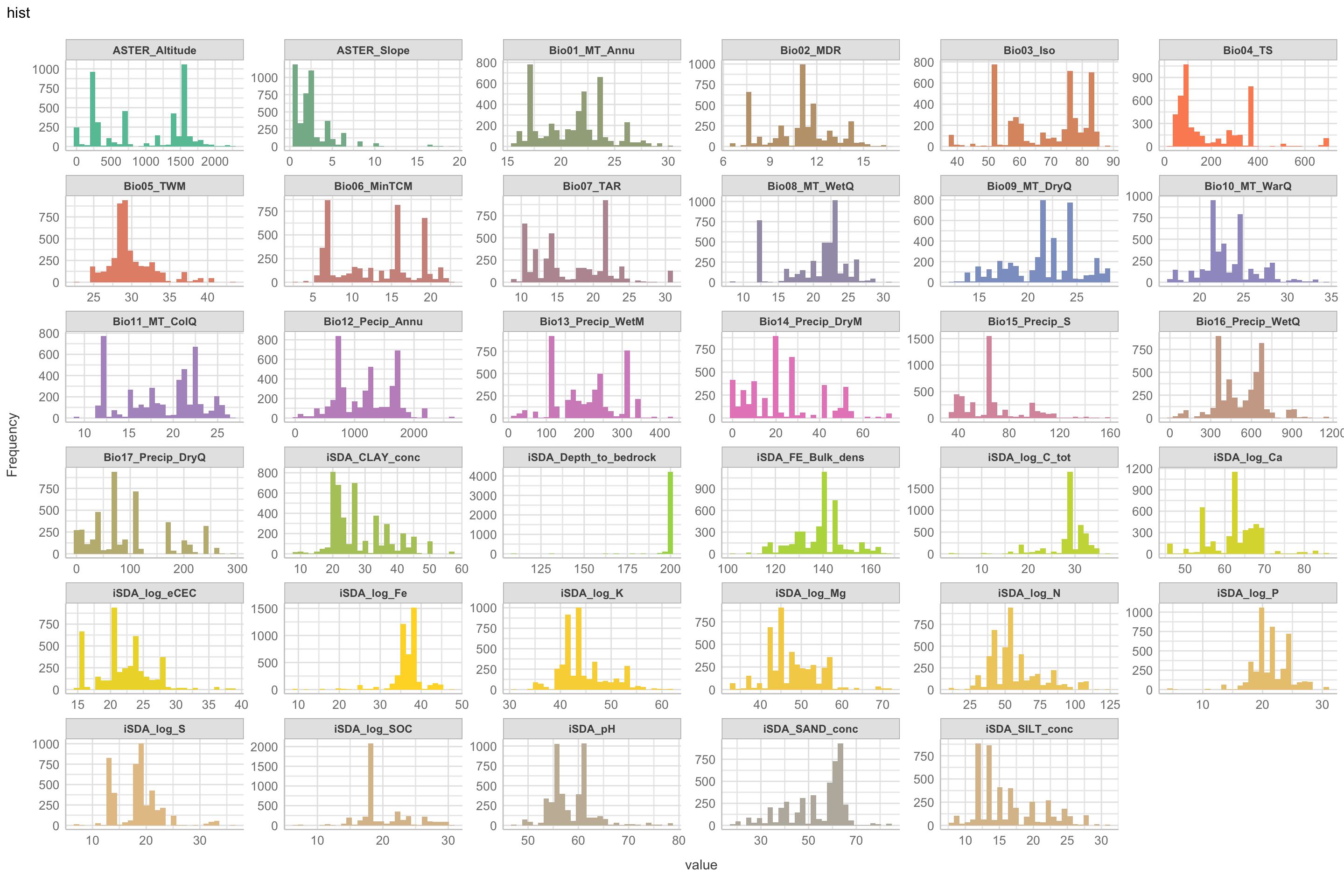

Histograms of biophysical predictor features

Lets now look at the histograms of our biophysical predictors. This is slightly similar to the density plots, but with the histograms we are able to see how the data is distributed in bins of 30 observations each. We are using the plot_histogram() from the DataExplorer package. The way we are going to plot the histogram requires a custom colour specification that has more than 1000 color objects specified.

Generating custom colour object with 1050 colours

Show code

# 1050 levels of 35 colours

explor.cols.group.35_1050 <- c("#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5",

"#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5",

"#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5",

"#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5",

"#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5",

"#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5", "#66C2A5",

"#84B797", "#84B797", "#84B797", "#84B797", "#84B797",

"#84B797", "#84B797", "#84B797", "#84B797", "#84B797",

"#84B797", "#84B797", "#84B797", "#84B797", "#84B797",

"#84B797", "#84B797", "#84B797", "#84B797", "#84B797",

"#84B797", "#84B797", "#84B797", "#84B797", "#84B797",

"#84B797", "#84B797", "#84B797", "#84B797", "#84B797",

"#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A",

"#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A",

"#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A",

"#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A",

"#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A",

"#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A", "#A2AC8A",

"#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C",

"#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C",

"#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C",

"#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C",

"#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C",

"#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C", "#C0A27C",

"#DE976F", "#DE976F", "#DE976F", "#DE976F", "#DE976F",

"#DE976F", "#DE976F", "#DE976F", "#DE976F", "#DE976F",

"#DE976F", "#DE976F", "#DE976F", "#DE976F", "#DE976F",

"#DE976F", "#DE976F", "#DE976F", "#DE976F", "#DE976F",

"#DE976F", "#DE976F", "#DE976F", "#DE976F", "#DE976F",

"#DE976F", "#DE976F", "#DE976F", "#DE976F", "#DE976F",

"#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62",

"#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62",

"#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62",

"#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62",

"#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62",

"#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62", "#FC8D62",

"#E59077", "#E59077", "#E59077", "#E59077", "#E59077",

"#E59077", "#E59077", "#E59077", "#E59077", "#E59077",

"#E59077", "#E59077", "#E59077", "#E59077", "#E59077",

"#E59077", "#E59077", "#E59077", "#E59077", "#E59077",

"#E59077", "#E59077", "#E59077", "#E59077", "#E59077",

"#E59077", "#E59077", "#E59077", "#E59077", "#E59077",

"#CF948C", "#CF948C", "#CF948C", "#CF948C", "#CF948C",

"#CF948C", "#CF948C", "#CF948C", "#CF948C", "#CF948C",

"#CF948C", "#CF948C", "#CF948C", "#CF948C", "#CF948C",

"#CF948C", "#CF948C", "#CF948C", "#CF948C", "#CF948C",

"#CF948C", "#CF948C", "#CF948C", "#CF948C", "#CF948C",

"#CF948C", "#CF948C", "#CF948C", "#CF948C", "#CF948C",

"#B998A1", "#B998A1", "#B998A1", "#B998A1", "#B998A1",

"#B998A1", "#B998A1", "#B998A1", "#B998A1", "#B998A1",

"#B998A1", "#B998A1", "#B998A1", "#B998A1", "#B998A1",

"#B998A1", "#B998A1", "#B998A1", "#B998A1", "#B998A1",

"#B998A1", "#B998A1", "#B998A1", "#B998A1", "#B998A1",

"#B998A1", "#B998A1", "#B998A1", "#B998A1", "#B998A1",

"#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5",

"#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5",

"#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5",

"#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5",

"#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5",

"#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5", "#A39CB5",

"#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB",

"#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB",

"#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB",

"#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB",

"#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB",

"#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB", "#8DA0CB",

"#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9",

"#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9",

"#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9",

"#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9",

"#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9",

"#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9", "#9F9BC9",

"#B197C7", "#B197C7", "#B197C7", "#B197C7", "#B197C7",

"#B197C7", "#B197C7", "#B197C7", "#B197C7", "#B197C7",

"#B197C7", "#B197C7", "#B197C7", "#B197C7", "#B197C7",

"#B197C7", "#B197C7", "#B197C7", "#B197C7", "#B197C7",

"#B197C7", "#B197C7", "#B197C7", "#B197C7", "#B197C7",

"#B197C7", "#B197C7", "#B197C7", "#B197C7", "#B197C7",

"#C392C6", "#C392C6", "#C392C6", "#C392C6", "#C392C6",

"#C392C6", "#C392C6", "#C392C6", "#C392C6", "#C392C6",

"#C392C6", "#C392C6", "#C392C6", "#C392C6", "#C392C6",

"#C392C6", "#C392C6", "#C392C6", "#C392C6", "#C392C6",

"#C392C6", "#C392C6", "#C392C6", "#C392C6", "#C392C6",

"#C392C6", "#C392C6", "#C392C6", "#C392C6", "#C392C6",

"#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4",

"#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4",

"#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4",

"#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4",

"#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4",

"#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4", "#D48EC4",

"#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3",

"#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3",

"#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3",

"#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3",

"#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3",

"#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3", "#E78AC3",

"#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC",

"#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC",

"#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC",

"#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC",

"#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC",

"#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC", "#DA99AC",

"#CDA996", "#CDA996", "#CDA996", "#CDA996", "#CDA996",

"#CDA996", "#CDA996", "#CDA996", "#CDA996", "#CDA996",

"#CDA996", "#CDA996", "#CDA996", "#CDA996", "#CDA996",

"#CDA996", "#CDA996", "#CDA996", "#CDA996", "#CDA996",

"#CDA996", "#CDA996", "#CDA996", "#CDA996", "#CDA996",

"#CDA996", "#CDA996", "#CDA996", "#CDA996", "#CDA996",

"#C0B880", "#C0B880", "#C0B880", "#C0B880", "#C0B880",

"#C0B880", "#C0B880", "#C0B880", "#C0B880", "#C0B880",

"#C0B880", "#C0B880", "#C0B880", "#C0B880", "#C0B880",

"#C0B880", "#C0B880", "#C0B880", "#C0B880", "#C0B880",

"#C0B880", "#C0B880", "#C0B880", "#C0B880", "#C0B880",

"#C0B880", "#C0B880", "#C0B880", "#C0B880", "#C0B880",

"#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A",

"#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A",

"#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A",

"#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A",

"#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A",

"#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A", "#B3C86A",

"#A6D854", "#A6D854", "#A6D854", "#A6D854", "#A6D854",

"#A6D854", "#A6D854", "#A6D854", "#A6D854", "#A6D854",

"#A6D854", "#A6D854", "#A6D854", "#A6D854", "#A6D854",

"#A6D854", "#A6D854", "#A6D854", "#A6D854", "#A6D854",

"#A6D854", "#A6D854", "#A6D854", "#A6D854", "#A6D854",

"#A6D854", "#A6D854", "#A6D854", "#A6D854", "#A6D854",

"#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C",

"#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C",

"#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C",

"#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C",

"#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C",

"#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C", "#B7D84C",

"#C9D845", "#C9D845", "#C9D845", "#C9D845", "#C9D845",

"#C9D845", "#C9D845", "#C9D845", "#C9D845", "#C9D845",

"#C9D845", "#C9D845", "#C9D845", "#C9D845", "#C9D845",

"#C9D845", "#C9D845", "#C9D845", "#C9D845", "#C9D845",

"#C9D845", "#C9D845", "#C9D845", "#C9D845", "#C9D845",

"#C9D845", "#C9D845", "#C9D845", "#C9D845", "#C9D845",

"#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D",

"#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D",

"#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D",

"#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D",

"#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D",

"#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D", "#DBD83D",

"#EDD836", "#EDD836", "#EDD836", "#EDD836", "#EDD836",

"#EDD836", "#EDD836", "#EDD836", "#EDD836", "#EDD836",

"#EDD836", "#EDD836", "#EDD836", "#EDD836", "#EDD836",

"#EDD836", "#EDD836", "#EDD836", "#EDD836", "#EDD836",

"#EDD836", "#EDD836", "#EDD836", "#EDD836", "#EDD836",

"#EDD836", "#EDD836", "#EDD836", "#EDD836", "#EDD836",

"#FED82F", "#FED82F", "#FED82F", "#FED82F", "#FED82F",

"#FED82F", "#FED82F", "#FED82F", "#FED82F", "#FED82F",

"#FED82F", "#FED82F", "#FED82F", "#FED82F", "#FED82F",

"#FED82F", "#FED82F", "#FED82F", "#FED82F", "#FED82F",

"#FED82F", "#FED82F", "#FED82F", "#FED82F", "#FED82F",

"#FED82F", "#FED82F", "#FED82F", "#FED82F", "#FED82F",

"#F9D443", "#F9D443", "#F9D443", "#F9D443", "#F9D443",

"#F9D443", "#F9D443", "#F9D443", "#F9D443", "#F9D443",

"#F9D443", "#F9D443", "#F9D443", "#F9D443", "#F9D443",

"#F9D443", "#F9D443", "#F9D443", "#F9D443", "#F9D443",

"#F9D443", "#F9D443", "#F9D443", "#F9D443", "#F9D443",

"#F9D443", "#F9D443", "#F9D443", "#F9D443", "#F9D443",

"#F4D057", "#F4D057", "#F4D057", "#F4D057", "#F4D057",

"#F4D057", "#F4D057", "#F4D057", "#F4D057", "#F4D057",

"#F4D057", "#F4D057", "#F4D057", "#F4D057", "#F4D057",

"#F4D057", "#F4D057", "#F4D057", "#F4D057", "#F4D057",

"#F4D057", "#F4D057", "#F4D057", "#F4D057", "#F4D057",

"#F4D057", "#F4D057", "#F4D057", "#F4D057", "#F4D057",

"#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B",

"#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B",

"#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B",

"#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B",

"#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B",

"#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B", "#EFCC6B",

"#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F",

"#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F",

"#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F",

"#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F",

"#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F",

"#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F", "#EAC87F",

"#E5C494", "#E5C494", "#E5C494", "#E5C494", "#E5C494",

"#E5C494", "#E5C494", "#E5C494", "#E5C494", "#E5C494",

"#E5C494", "#E5C494", "#E5C494", "#E5C494", "#E5C494",

"#E5C494", "#E5C494", "#E5C494", "#E5C494", "#E5C494",

"#E5C494", "#E5C494", "#E5C494", "#E5C494", "#E5C494",

"#E5C494", "#E5C494", "#E5C494", "#E5C494", "#E5C494",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6",

"#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6",

"#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6",

"#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6",

"#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6",

"#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6", "#C7B9A6",

"#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC",

"#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC",

"#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC",

"#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC",

"#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC",

"#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC", "#BCB6AC",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A",

"#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A", "#DBC09A"

)

Show code

expl.agfor.hist <- expl.agfor.data.no.na %>%

dplyr::select(-c("RR", "logRR")) %>%

DataExplorer::plot_histogram(ggtheme = theme_lucid(),

geom_histogram_args = list(fill = (explor.cols.group.35_1050), bins = 30L),

ncol = 6, nrow = 6,

parallel = FALSE,

title = "hist"

)

# SAVING PLOT

saveRDS(expl.agfor.hist, here::here("TidyMod_OUTPUT","expl.agfor.hist.RDS"))

Show code

$page_1

Figure 4: Histogram of biophysical predictors vs. logRR

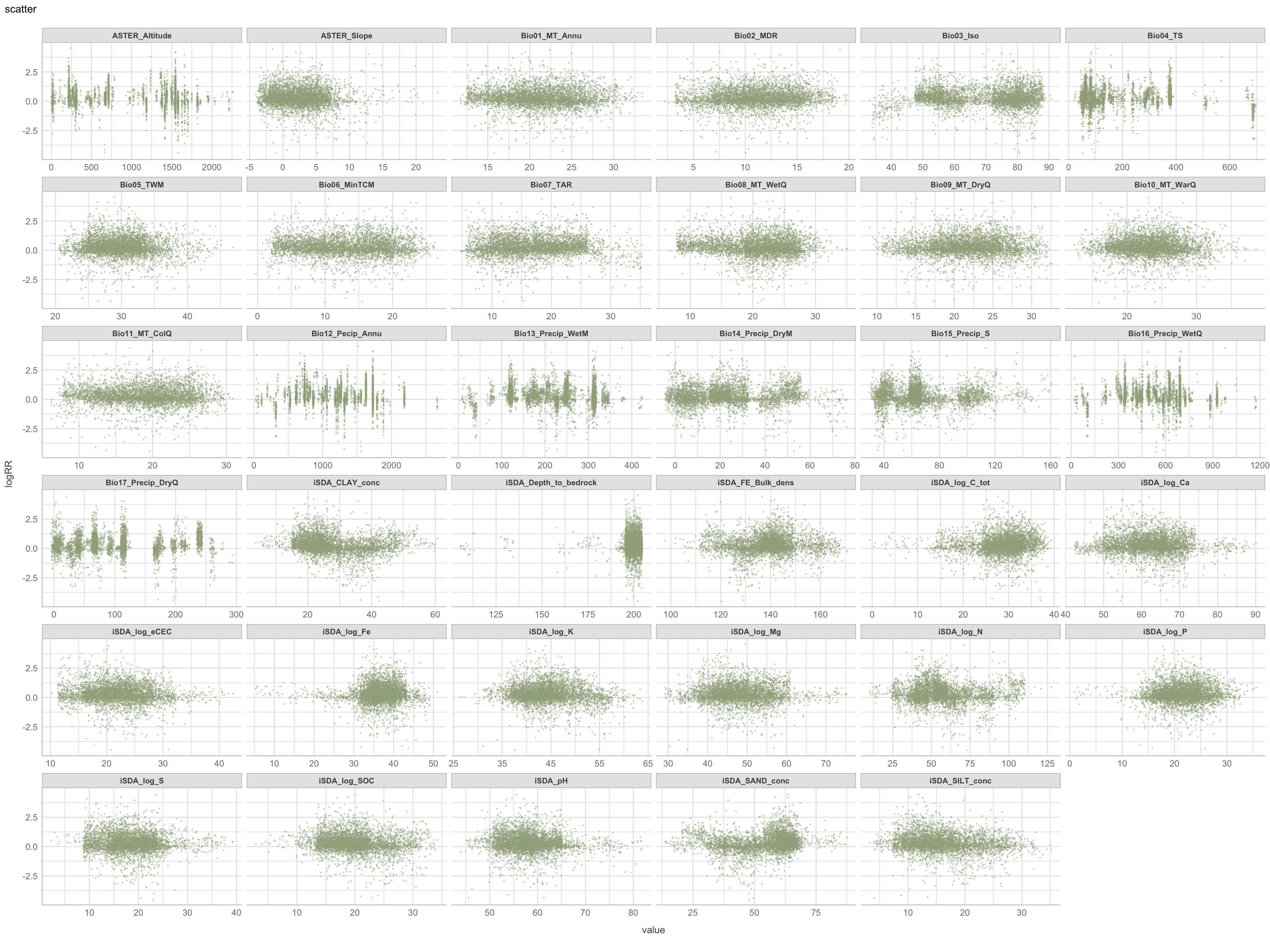

Scatter plots i) of continuous predictors (all biophysical) i) of outcome (RR and logRR)

We use the plot_scatterplot() function from the DataExplorer package to generate a multiples of all the predictors with the response/outcome, logRR on the y-axis.

Show code

expl.agfor.scatter <- expl.agfor.data.no.na %>%

dplyr::select(-c("RR")) %>%

DataExplorer::plot_scatterplot(by = "logRR",

geom_point_args = list("col" = "#A2AC8A", "shape" = 10, "alpha" = 0.3,

"position" = "position_jitter"("width" = 0.1, "height" = 4.5), "size" = 0.3), # adding a jitter effect to avoid

ggtheme = theme_lucid(), # too much overlap of points

ncol = 6, nrow = 6,

title = "scatter")

# SAVING PLOT

saveRDS(expl.agfor.scatter, here::here("TidyMod_OUTPUT","expl.agfor.scatter.RDS"))

Show code

$page_1

Figure 5: Scatter plot of biophysical predictors vs. logRR

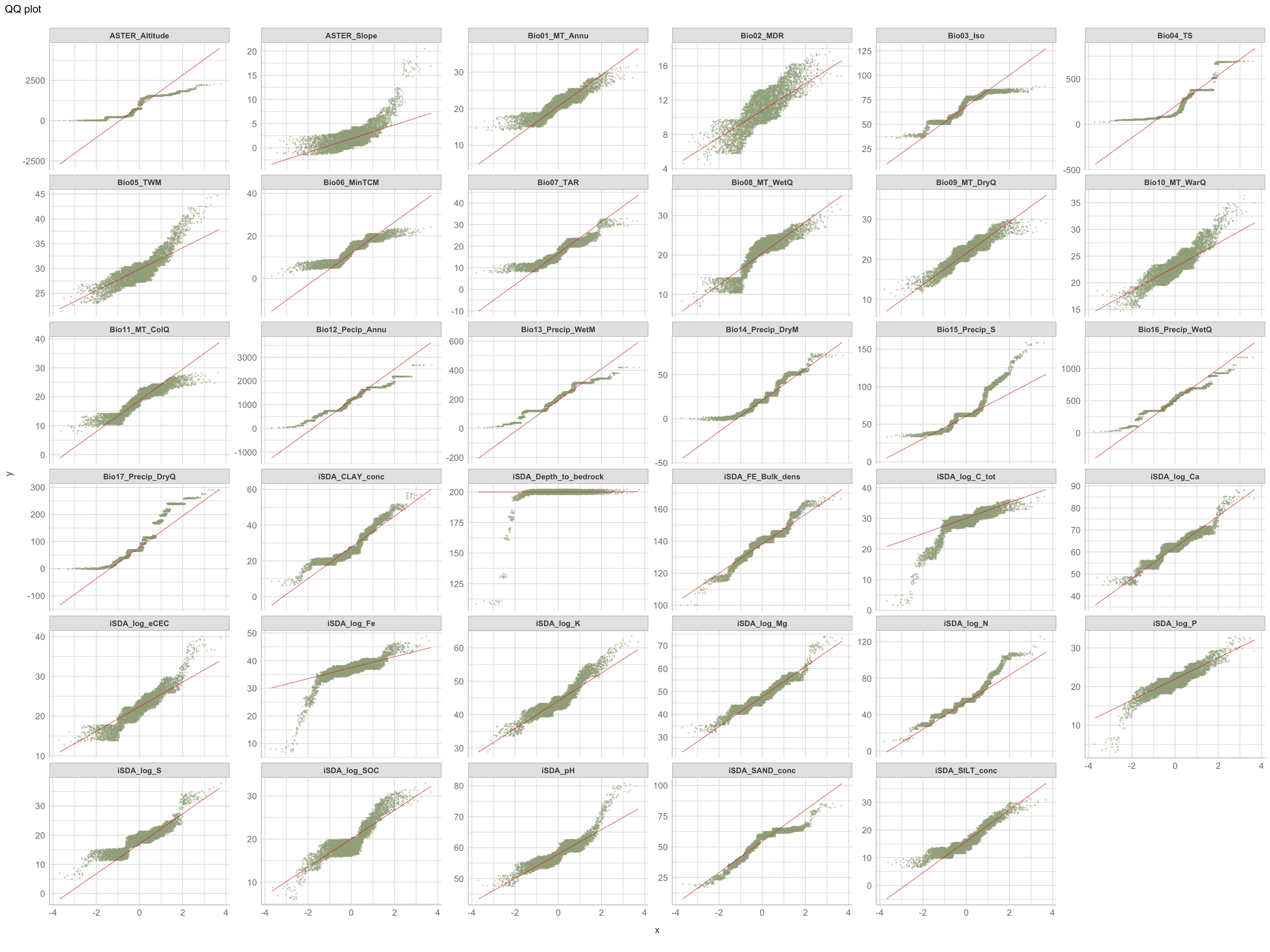

Quantile-quantile plots i) of continuous predictors (all biophysical) i) of outcome (RR and logRR)

Quantile-quantile plots (QQ-plots) is a plot of the quantiles of one sample or distribution of the data against the quantiles of a second sample. QQ-plot makes it possible to compare the distribution of the data of a batch with the so-called normal or Gaussian distribution with the hypothesis that the data is distributed along the diagonal axis in the plot, through the 1st and 3rd quartiles (here shown in red).

expl.agfor.qqplot.pred <- expl.agfor.data.no.na %>%

dplyr::select(-c("RR", "logRR")) %>%

plot_qq(geom_qq_args = list("col" = "#A2AC8A", "shape" = 10, "alpha" = 0.3,

position = position_jitter(width = 0.1, height = 2), "size" = 0.3),

geom_qq_line_args = list("colour" = "#de2d26", "alpha" = 0.6, "size" = 0.4, "geom" = "path", "position" = "identity"),

ggtheme = theme_lucid(),

ncol = 6, nrow = 6,

title = "QQ plot")

# SAVING PLOT

saveRDS(expl.agfor.qqplot.pred, here::here("TidyMod_OUTPUT","expl.agfor.qqplot.pred.RDS"))

Show code

$page_1

Figure 6: QQ plot of biophysical predictors

Again.. we clearly see that the predictors are not normally distributed. The QQ-plots can be interpreted based on whether there shape is of convex, concave, convex-concave, or concave-convex. A concave plot implies that the sample on the x-axis is more right-skewed, like the shape we see for iSDA Iron concentrations and iSDA total carbon. A convex plot implies that the sample on the x-axis is less right-skewed, or more left skewed, that is very clear what is the case for the slope feature. A convex-concave (concave-convex) plot implies that the data is heavy tailed on the x axis, e.g. iSDA soil organic carbon.

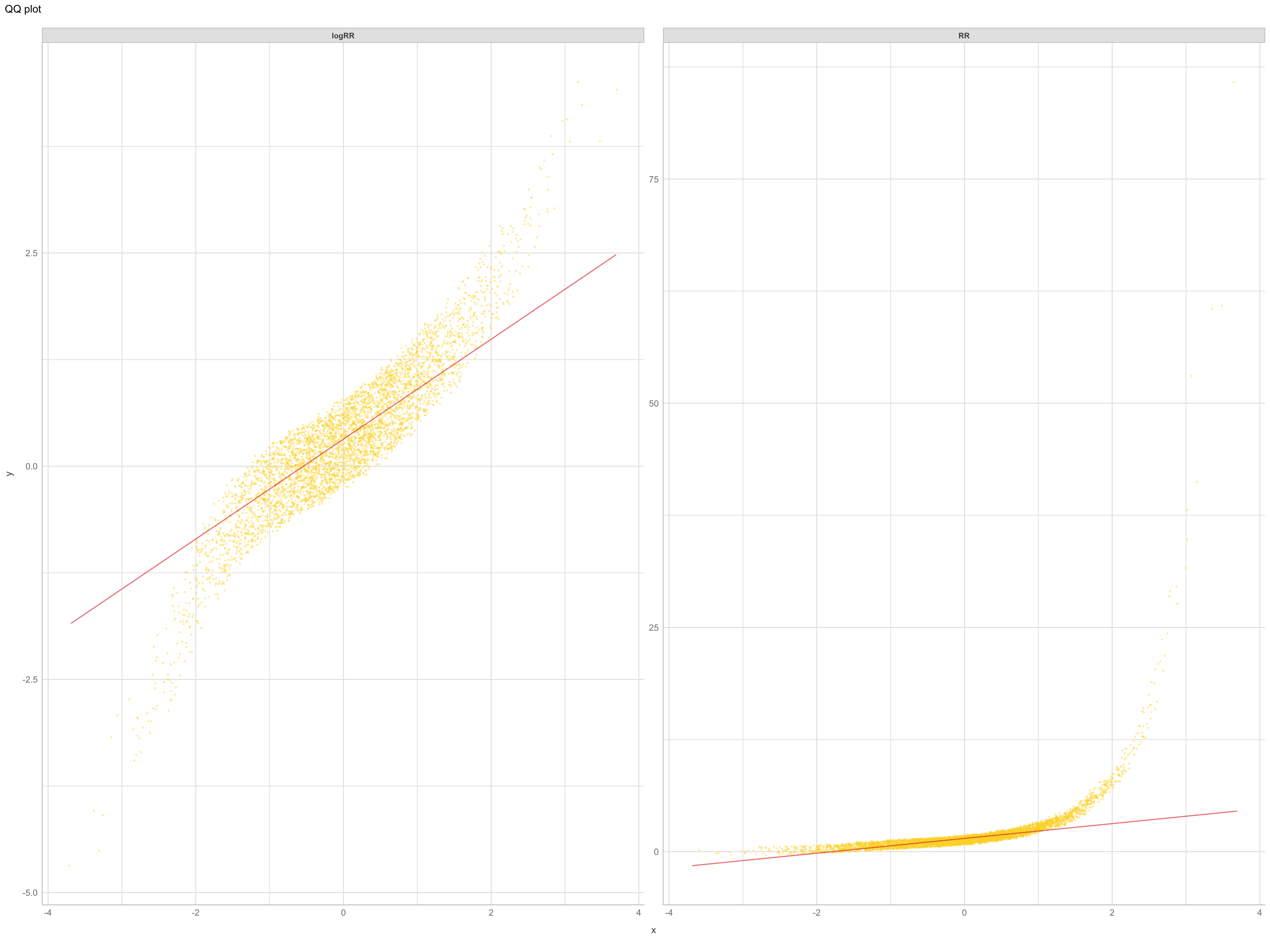

Lets also take a look at a QQ-plot for the outcome, logRR and RR.

expl.agfor.qqplot.logRR <- expl.agfor.data.no.na %>%

dplyr::select(c("RR", "logRR")) %>%

plot_qq(geom_qq_args = list("col" = "#FED82F", "shape" = 10, "alpha" = 0.3,

position = position_jitter(width = 0.1, height = 0.5), "size" = 0.5),

geom_qq_line_args = list("colour" = "#de2d26", "alpha" = 0.6, "size" = 0.6, "geom" = "path", "position" = "identity"),

ggtheme = theme_lucid(),

ncol = 2, nrow = 2,

title = "QQ plot")

# SAVING PLOT

saveRDS(expl.agfor.qqplot.logRR, here::here("TidyMod_OUTPUT","expl.agfor.qqplot.logRR.RDS"))

Show code

$page_1

Figure 7: qq plot of outcomes RR and logRR

It is very evident again that the log-transformed RR has a better distribution, even though we can clearly see from the convex-concave patterns of logRR, that the data is heavy tailed on both ends. These “extreme” data points can perhabs be minimised with the removal of the extreme outliers, that we just discussed in previous section.

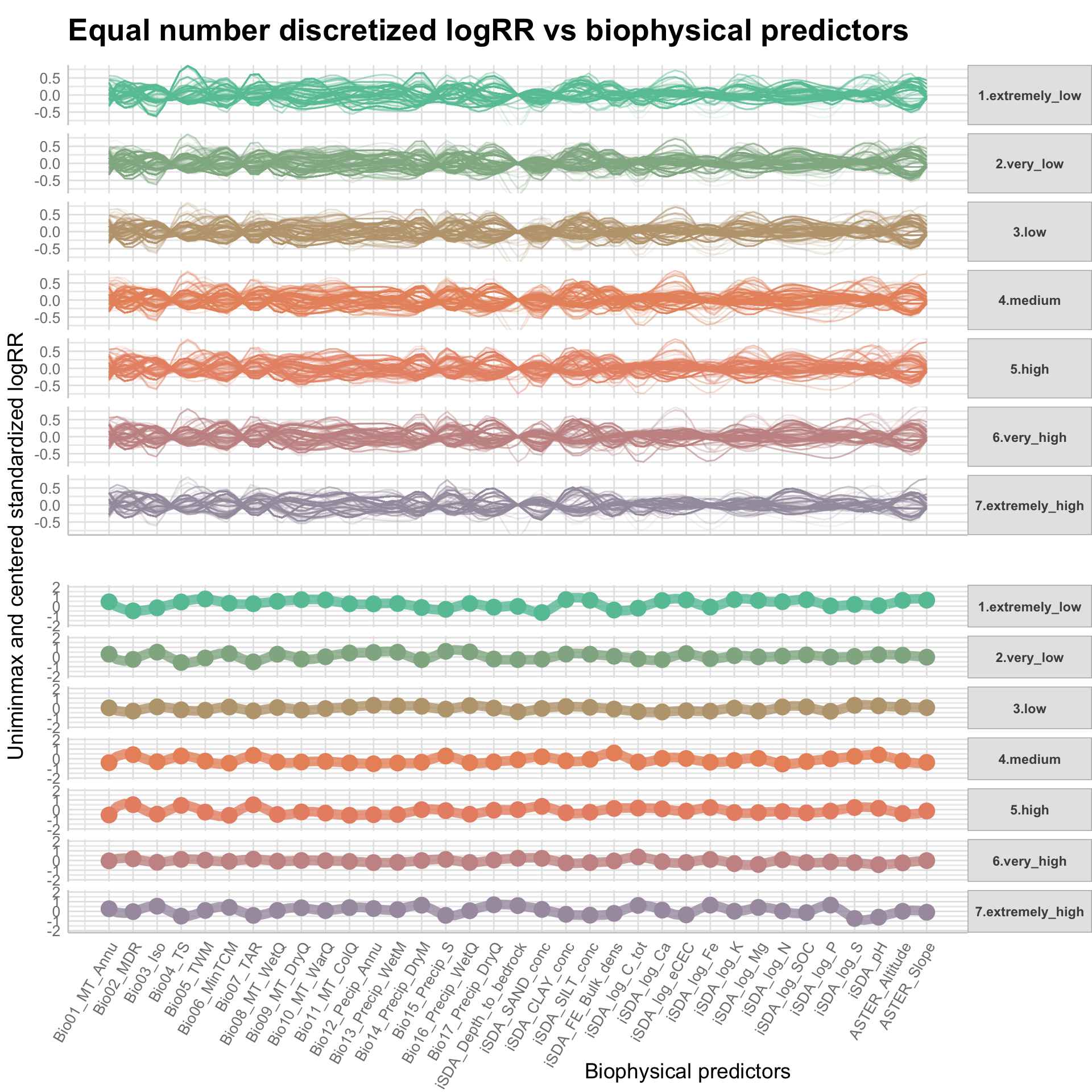

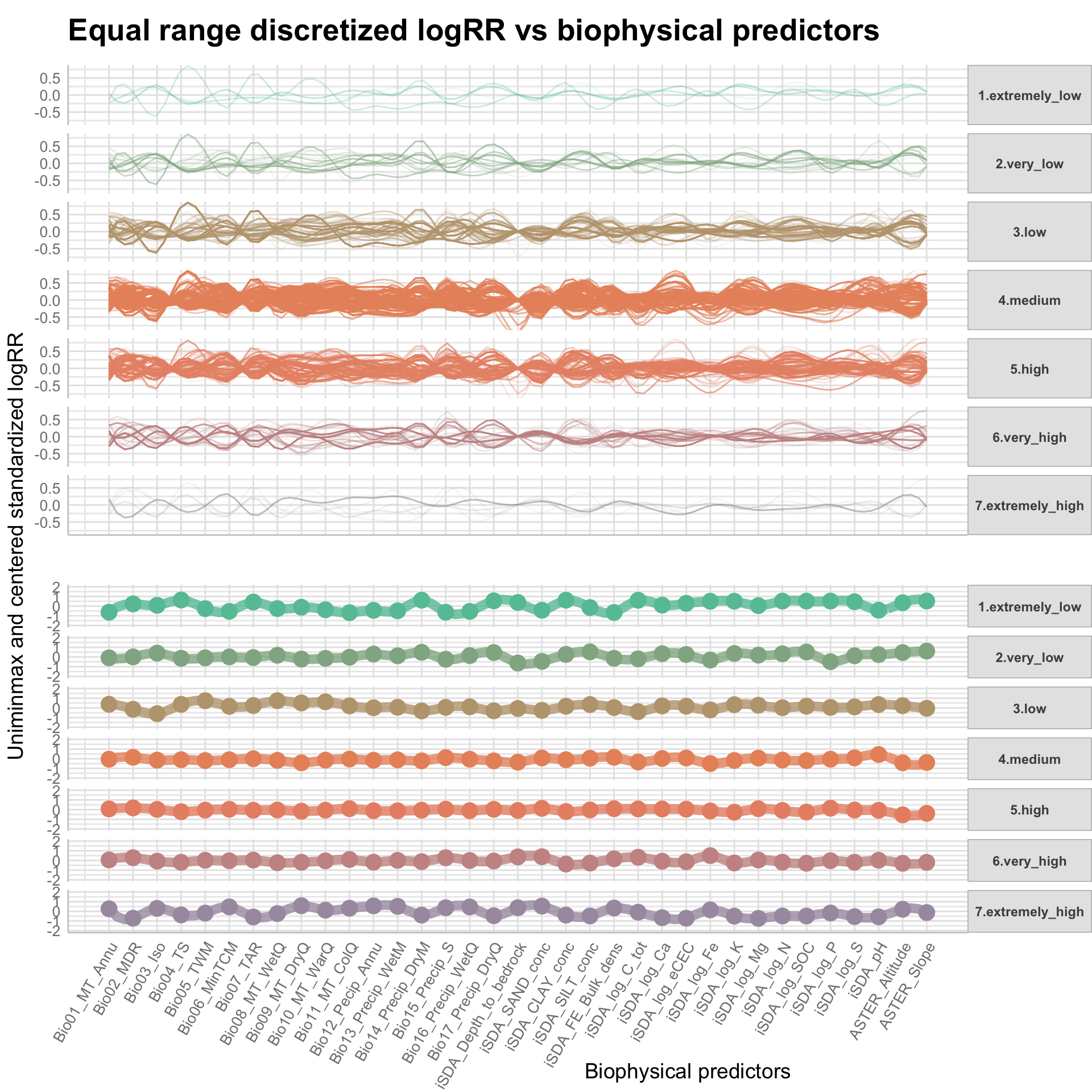

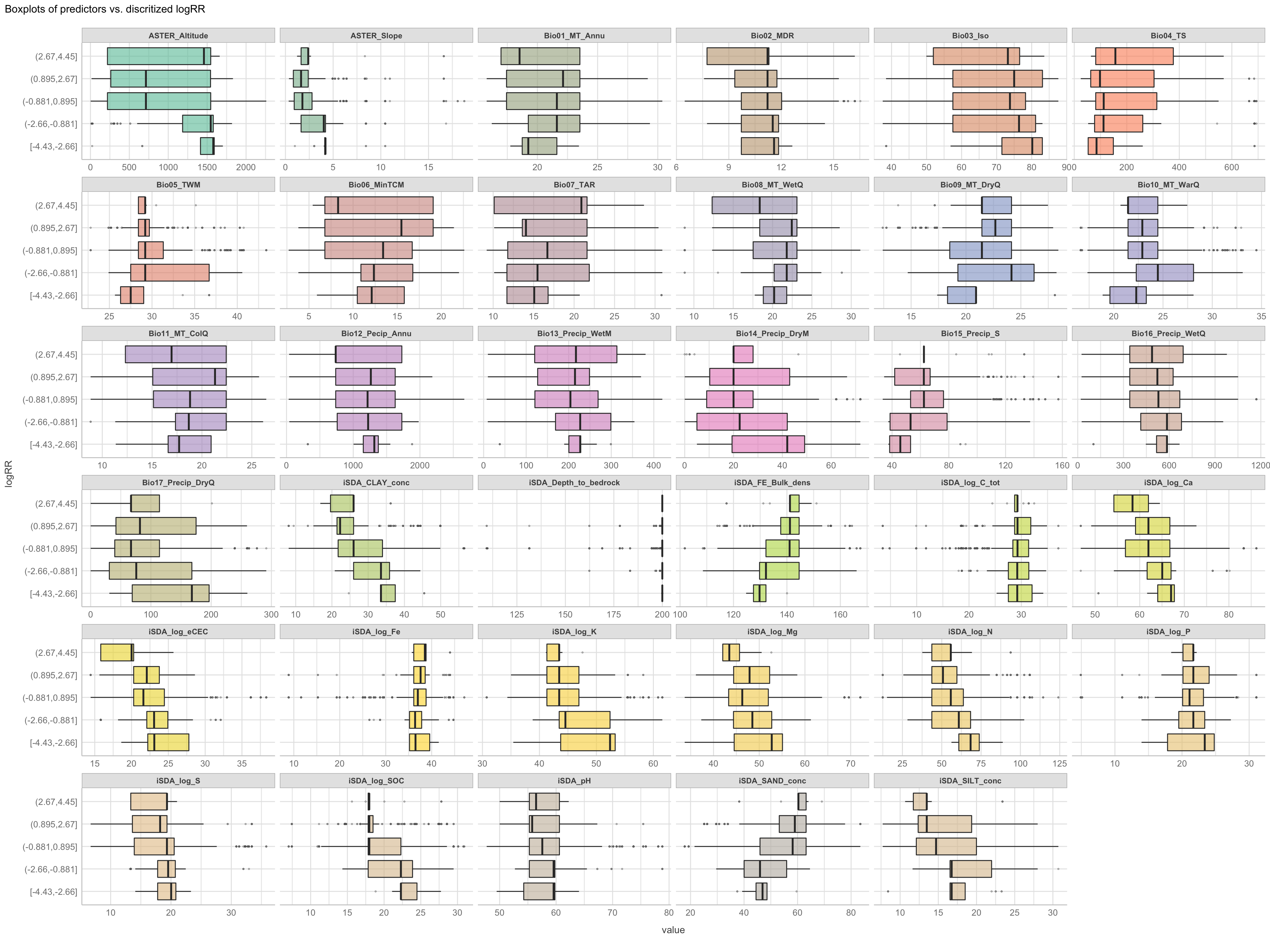

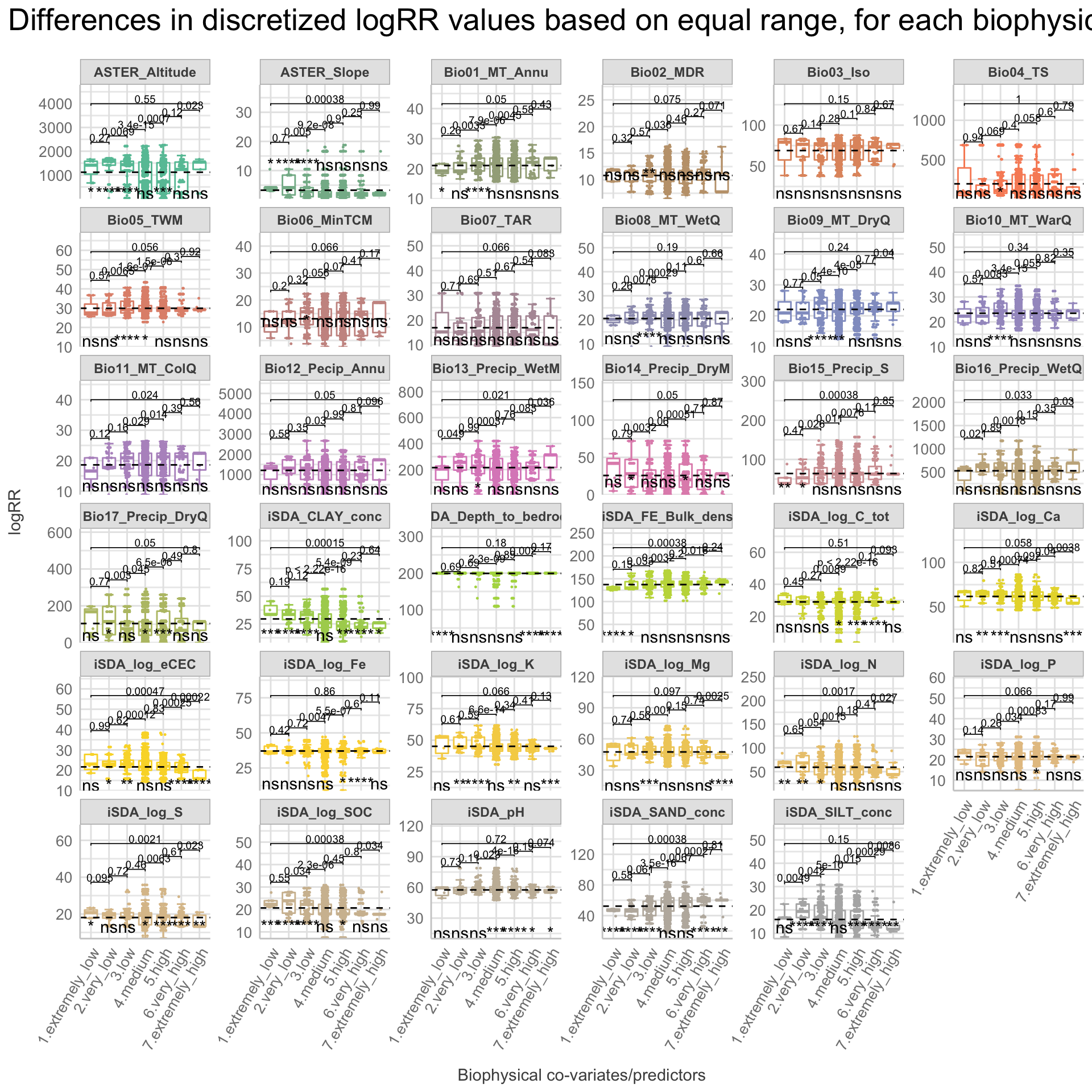

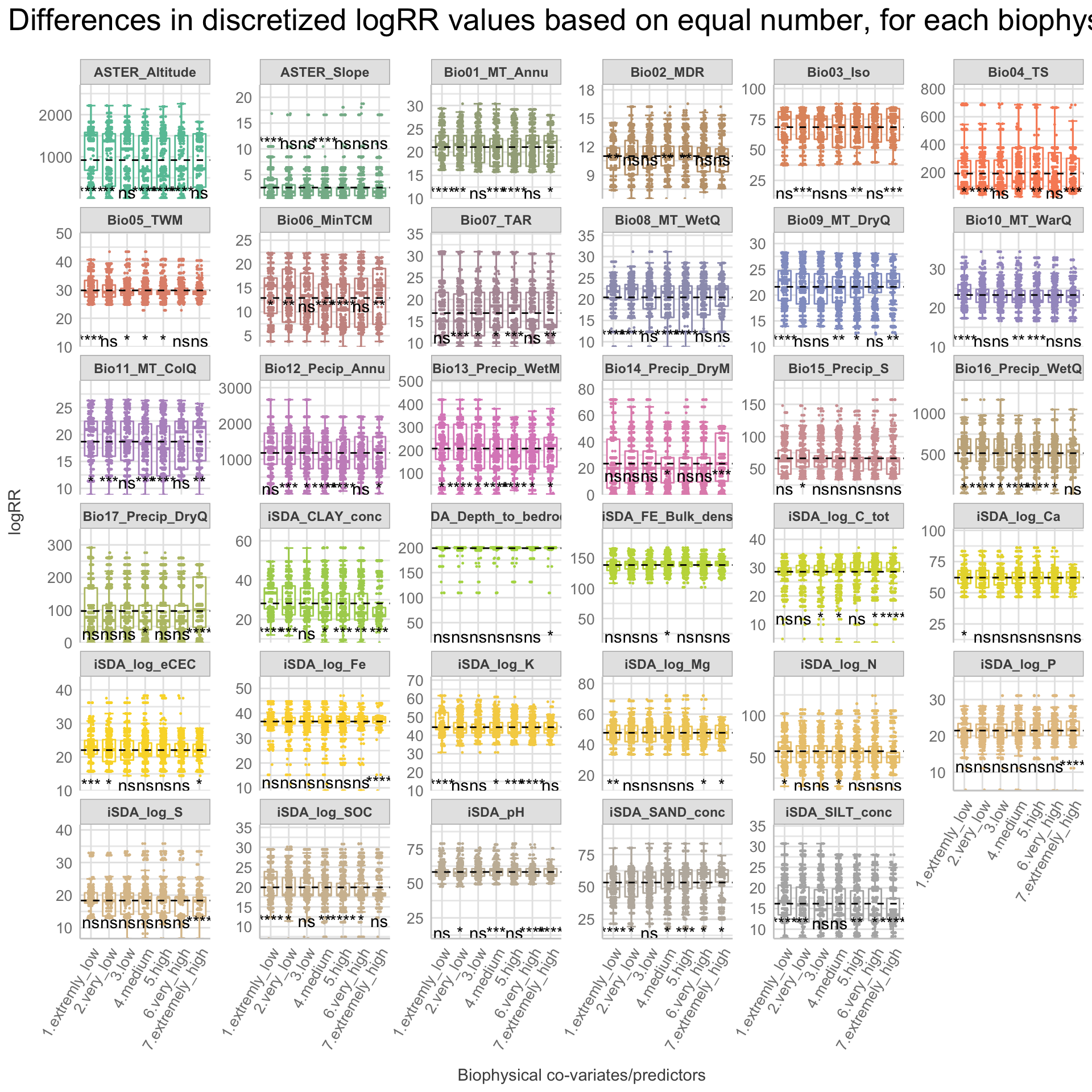

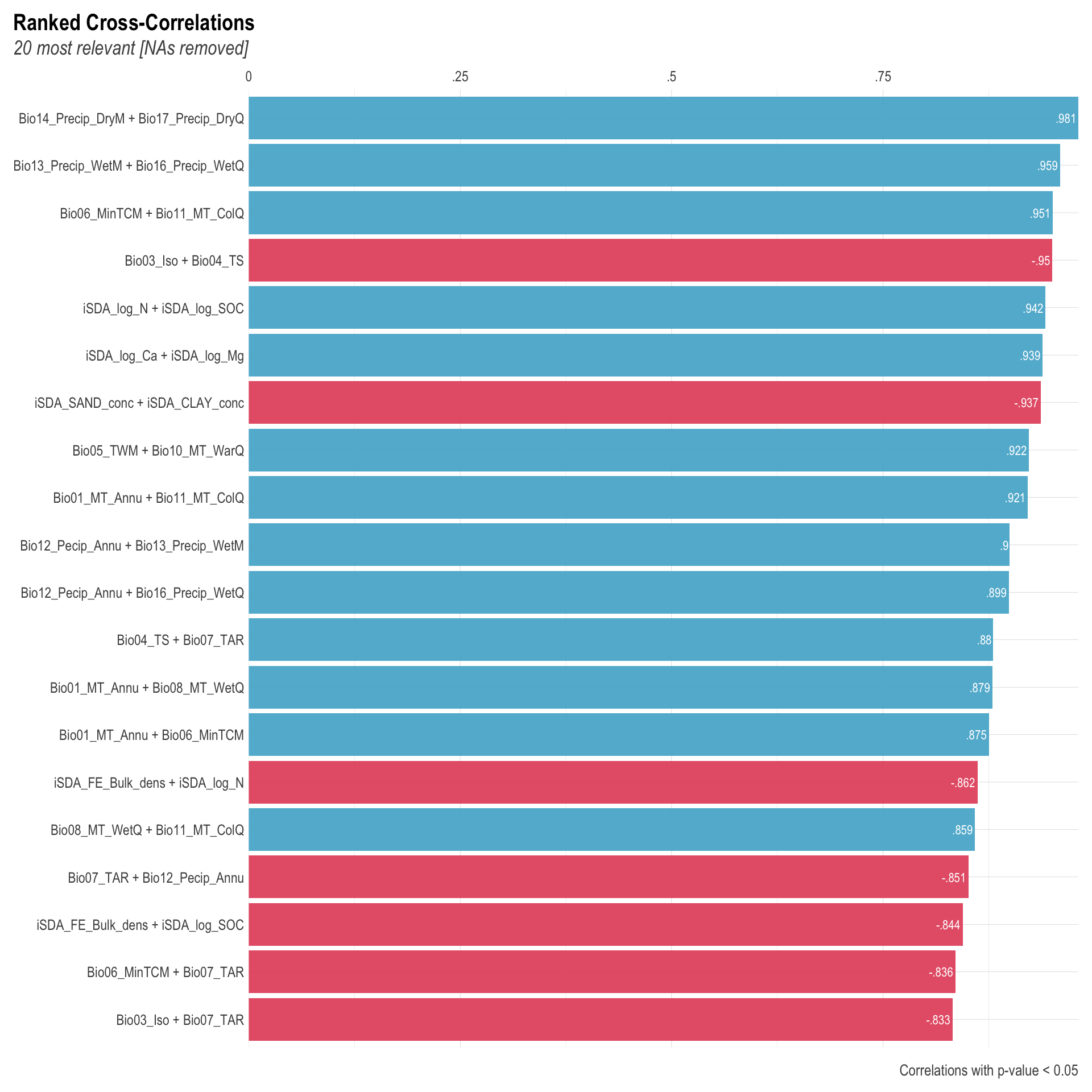

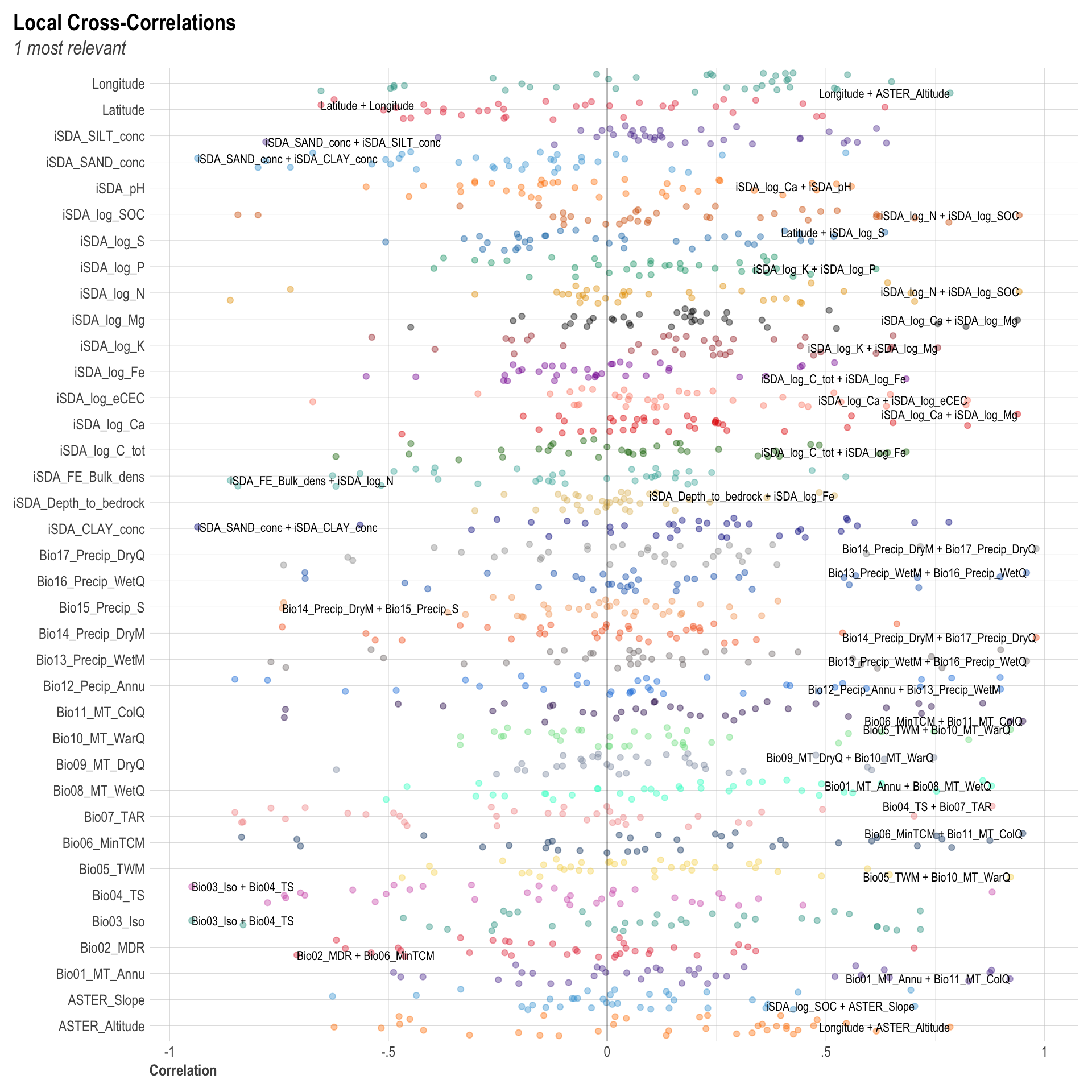

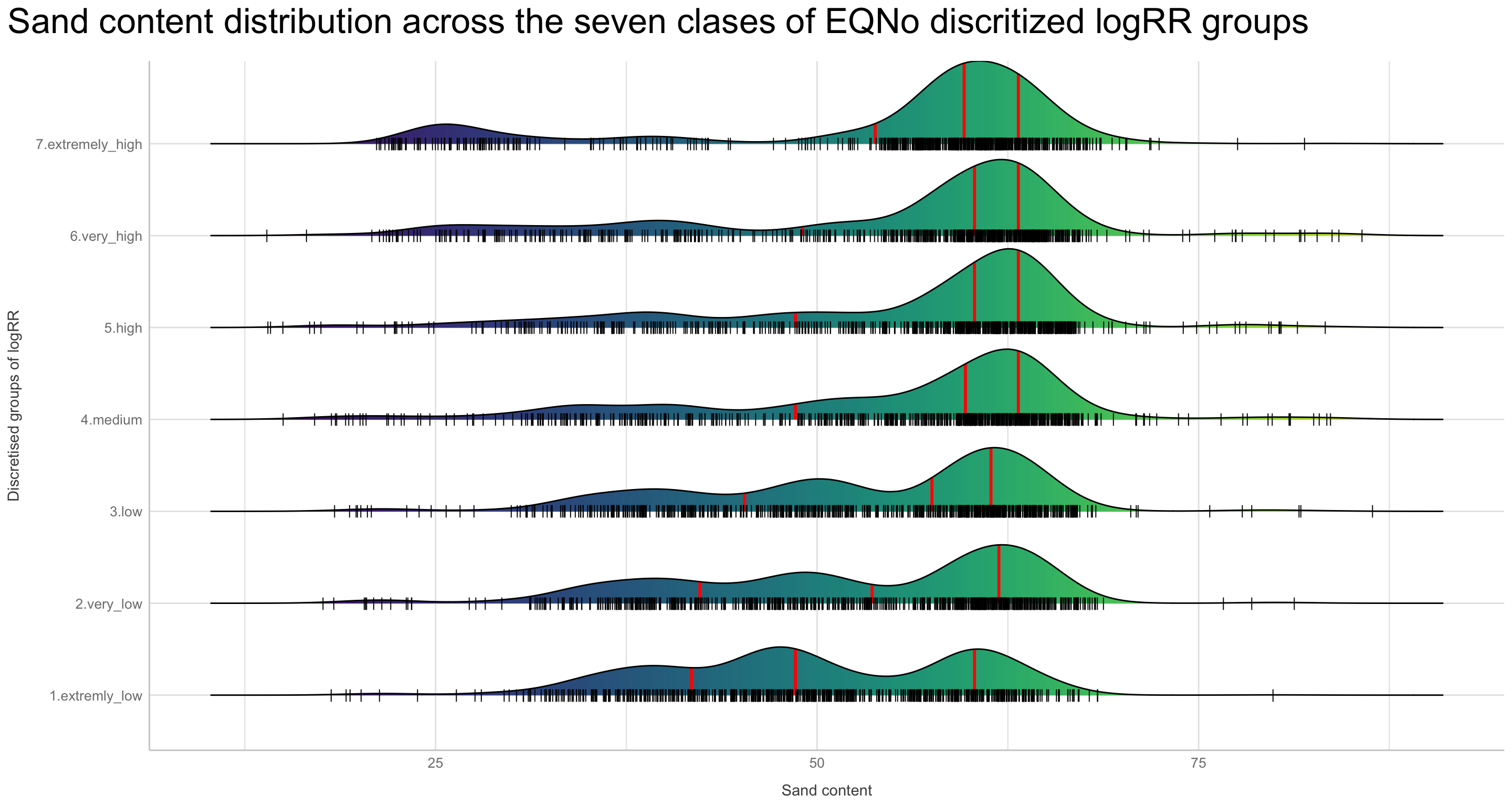

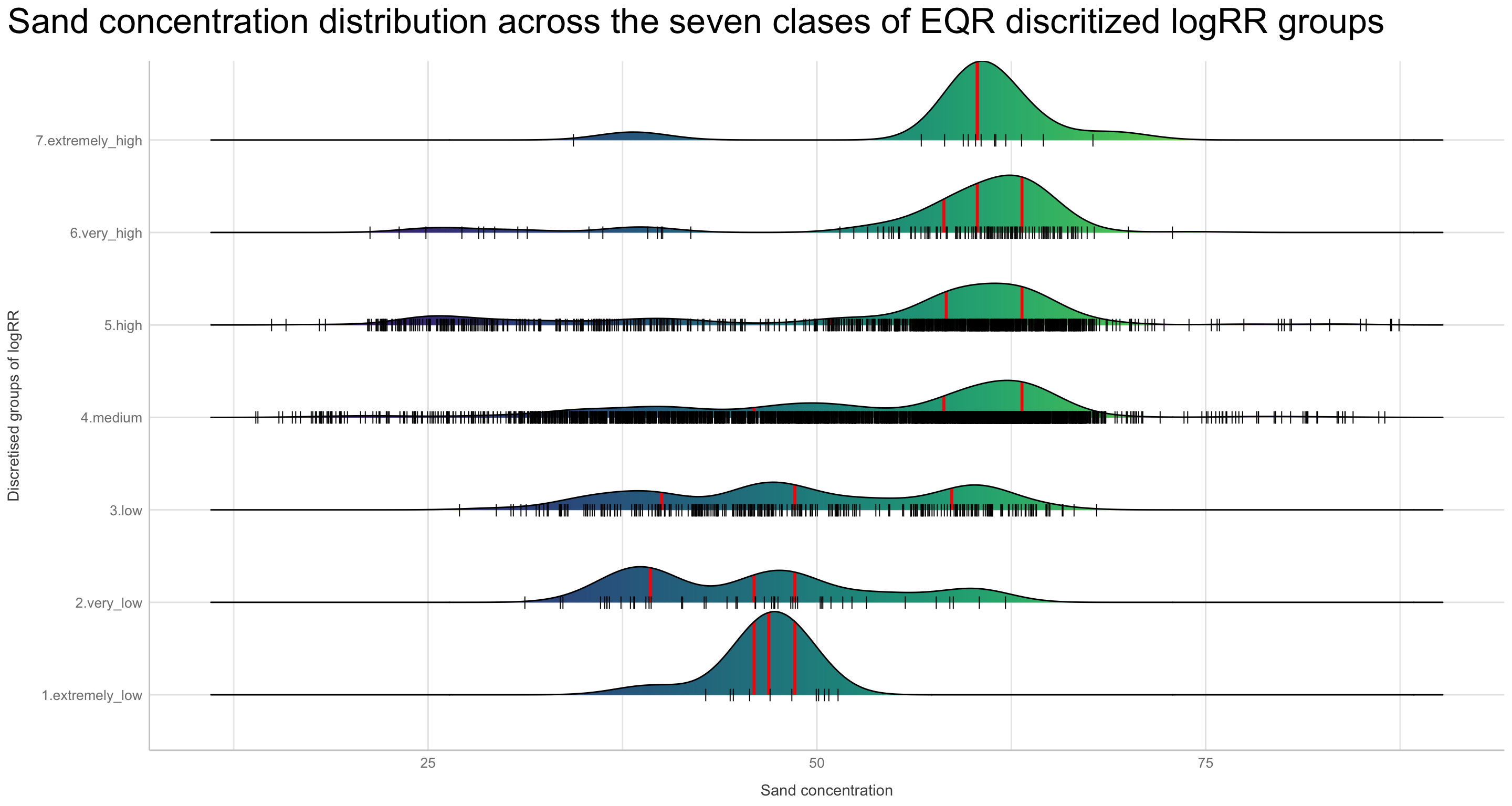

Discritization of target feature, logRR i) Boxplots of predictors vs. discritized logRR

We are now going to discretize our continues outcome feature, logRR into seven categorical/nominal groups. In statistics and machine learning, discretization refers to the process of converting or partitioning continuous features into discretized or nominal features with certain intervals. The goal of discretization is to reduce the number of values a continuous feature have by grouping it into a number of intervals or bins. This can be useful when one wish to perform EDA on a continues feature with no or very little linear (co)-relation to predictors. Hence, we can group the continuous outcome feature, logRR and look at discrete differences on the predictors for each group of logRR. Continuous features have a smaller chance of correlating with the target variable due to infinite degrees of freedom and may have a complex non-linear relationship. After discretizing logRR into groups it it easier to perform test on differences and for these results to be interpreted. The aim of performing the discretization of logRR in our case is to be able to make a pairwise t-test for the different groups of logRR. Thereby getting an understanding of whether there is significant differences between levels of logRR. Discretization, or binning/grouping of a continuous feature is mostly done in two ways, and we are going to evaluate the t-test outcome from both of these methods - because the binning technique is so different:

Equal-Width Discretization: Separating all possible values into ‘N’ number of bins, each having the same width. Formula for interval width: Width = (maximum value - minimum value) / N. Where N is the number of bins or intervals. This method doesn’t improve the value spread and it can handle outliers effectively.

Equal-Frequency Discretization: Separating all possible values into ‘N’ number of bins, each having the same amount of observations. These intervals are normally corresponding to ranges or quantile values. This method does improve the value spread and it can handle outliers effectively.

First, we are creating a two new feature columns in our agroforestry modelling data. We are using the functions cut_interval() and cut_number(), to perform the discrete levels of logRR based on the equal-frequency method and the equal-width method, respectfully. For each method seven groups are created. The groups range from 1, extremely low (low logRR values) to 7, extremely high (high logRR values).

# Pairwise comparison between PrName groups in the different biophysical co-variates.

#Do we find differences in logRR across ?

# Making new column in which we group the outcome variable logRR into binned categories

agrofor.biophys.modelling.data.discretized.logRR <- agrofor.biophys.modelling.data %>%

rationalize(logRR) %>%

drop_na(logRR) %>%

mutate(logRR_counts_cut_interval = cut_interval(logRR, n = 7)) %>%

mutate(logRR_EQR_group = case_when(

logRR_counts_cut_interval == "[-4.43,-3.16]" ~ "1.extremely_low",

logRR_counts_cut_interval == "(-3.16,-1.9]" ~ "2.very_low",

logRR_counts_cut_interval == "(-1.9,-0.627]" ~ "3.low",

logRR_counts_cut_interval == "(-0.627,0.642]" ~ "4.medium",

logRR_counts_cut_interval == "(0.642,1.91]" ~ "5.high",

logRR_counts_cut_interval == "(1.91,3.18]" ~ "6.very_high",

logRR_counts_cut_interval == "(3.18,4.45]" ~ "7.extremely_high",)) %>%

mutate(logRR_counts_cut_number = cut_number(logRR, n = 7)) %>%

mutate(logRR_EQNo_group = case_when(

logRR_counts_cut_number == "[-4.43,-0.31]" ~ "1.extremly_low",

logRR_counts_cut_number == "(-0.31,-0.0195]" ~ "2.very_low",

logRR_counts_cut_number == "(-0.0195,0.155]" ~ "3.low",

logRR_counts_cut_number == "(0.155,0.379]" ~ "4.medium",

logRR_counts_cut_number == "(0.379,0.643]" ~ "5.high",

logRR_counts_cut_number == "(0.643,1.1]" ~ "6.very_high",

logRR_counts_cut_number == "(1.1,4.45]" ~ "7.extremely_high",))

Lets compare the newly created factor levels. Are there differences in how logRR was grouped by the two methods..?

Show code

rmarkdown::paged_table(agrofor.biophys.modelling.data.discretized.logRR %>%

sample_n(25) %>% # randomly sampling a subset of 25 rows/observations

dplyr::relocate(logRR_EQNo_group, logRR_EQR_group, logRR, RR, ID))

logRR_EQNo_group <chr> | logRR_EQR_group <chr> | logRR <dbl> | RR <dbl> | ID <dbl> | |

|---|---|---|---|---|---|

| 4.medium | 4.medium | 0.27259356 | 1.3133663 | 1068 | |

| 2.very_low | 4.medium | -0.16496160 | 0.8479263 | 782 | |

| 3.low | 4.medium | 0.01967277 | 1.0198675 | 1342 | |

| 7.extremely_high | 5.high | 1.13345902 | 3.1063830 | 1855 | |

| 5.high | 4.medium | 0.53256561 | 1.7032967 | 692 | |

| 5.high | 4.medium | 0.50160324 | 1.6513667 | 1068 | |

| 3.low | 4.medium | 0.13815034 | 1.1481481 | 1855 | |

| 6.very_high | 5.high | 0.79850770 | 2.2222222 | 557 | |

| 6.very_high | 5.high | 0.76362044 | 2.1460317 | 1259 | |

| 6.very_high | 5.high | 0.80506510 | 2.2368421 | 632 |

Now we can prepare a parallel coordinate plot, that is an excellent way of visually assessing the differences in patterns for the discretized logRR groups for each of the biophysical predictors on the x-axis. A parallel coordinate plot allows to compare the feature of several individual observations (as a series) on a set of numeric variables (e.g. our biophysical predictors). We are going to make use of the ggparcoord() function from the GGally package to make this fancy multiples plot where all logRR groups are stacked on the y-axis.

Note: that we are making individual plots for each of the two discritization methods.

# Equal number data

par.coord.data.EQNo <-

agrofor.biophys.modelling.data.discretized.logRR %>%

dplyr::select(-c(ID, Out.SubInd, Product, MeanC, MeanT, AEZ16s, Latitude, Country, Longitude, Site.Type, Tree, SubPrName, RR,

logRR, logRR_EQR_group, PrName, PrName.Code, Out.SubInd.Code, logRR_counts_cut_interval, logRR_counts_cut_number)) %>%

mutate_if(is.character, as.factor) %>%

rationalize() %>%

drop_na() %>%

arrange(across(starts_with("logRR_EQNo_group")))

# Equal range data

par.coord.data.EQR <-

agrofor.biophys.modelling.data.discretized.logRR %>%

dplyr::select(-c(ID, Out.SubInd, Product, MeanC, MeanT, AEZ16s, Latitude, Country, Longitude, Site.Type, Tree, SubPrName, RR,

logRR, logRR_EQNo_group, PrName, PrName.Code, Out.SubInd.Code, logRR_counts_cut_interval, logRR_counts_cut_number)) %>%

mutate_if(is.character, as.factor) %>%

rationalize() %>%

drop_na() %>%

arrange(across(starts_with("logRR_EQR_group")))

# Facet label names

logRR_group.label.names <- c("1.extremely_low", "2.very_low", "3.low", "4.medium", "5.high", "6.very_high", "7.extremely_high")

names(logRR_group.label.names) <- c("1", "2", "3", "4", "5", "6", "7")

Defining a custom colours range for the parallel coordinate plots

Show code

# Defining a custom colours range

#library(RColorBrewer)

nb.cols.25 <- 25

era.af.colors.25 <- colorRampPalette(brewer.pal(8, "Set2"))(nb.cols.25)

Show code

par.coord.EQNo.plot <-

GGally::ggparcoord(par.coord.data.EQNo,

columns = 1:35,

#groupColumn = 36, # group by logRR_EQNo_group

scale = "center", # uniminmax to standardize vertical height, then center each variable at a value specified by the scaleSummary param

# order = "anyClass", # ordering by feature separation between any one class and the rest (as opposed to their overall variation between classes).

missing = "exclude",

showPoints = FALSE,

boxplot = FALSE,

#shadeBox = 35,

centerObsID = 1,

#title = "Discretized logRR values based on equal number vs. biophysical predictors",

alphaLines = 0.1,

splineFactor = TRUE, # making lines curvey

#shadeBox = NULL,

mapping = aes(color = as.factor(logRR_EQNo_group))) +

scale_color_manual("logRR_EQNo_group", labels = levels(par.coord.data.EQNo$logRR_EQNo_group), values = era.af.colors.25) +

# ggplot2::scale_color_manual(values = era.af.colors.25) + # assigning costom colour range

facet_grid(logRR_EQNo_group ~ . , labeller = labeller(logRR_EQNo_group = logRR_group.label.names)) +

coord_cartesian(ylim = c(- 0.8, 0.8)) +

theme_lucid() +

theme(plot.title = element_text(size = 20), legend.position = "none", #axis.text.x = element_text(angle = 60, hjust = 1)

axis.text.x = element_blank(),

strip.text.y = element_text(angle = 0),

plot.margin = margin(1, 0, 1, 0)) +

xlab("") +

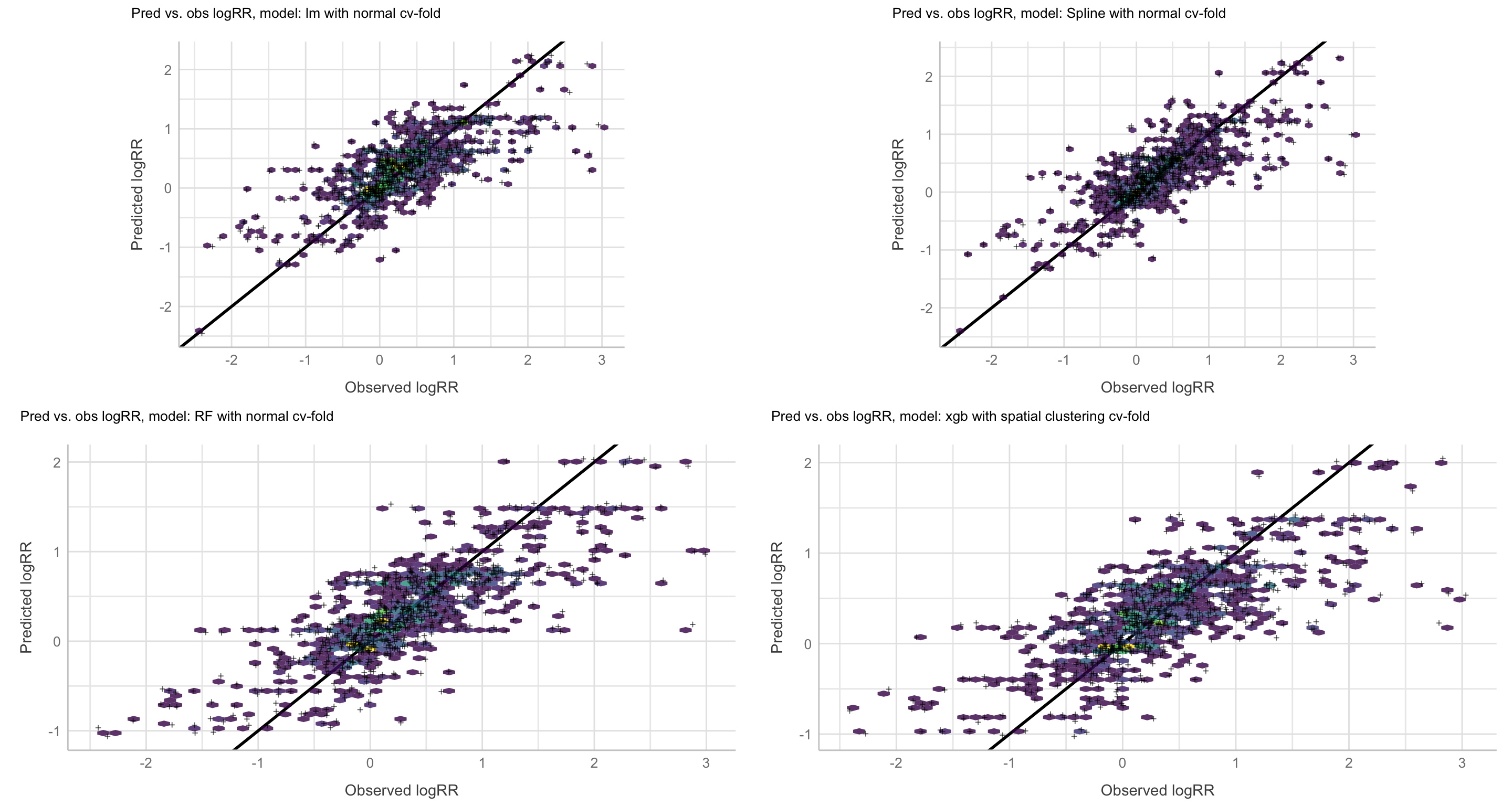

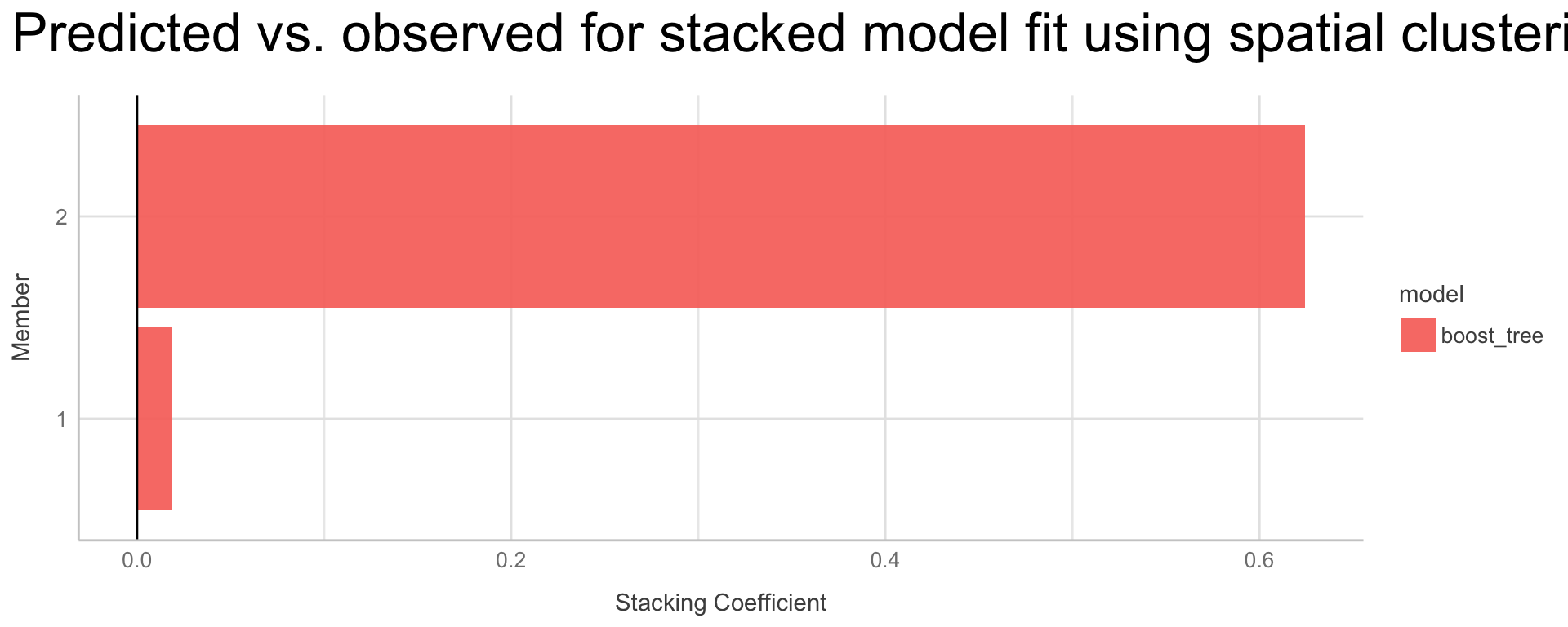

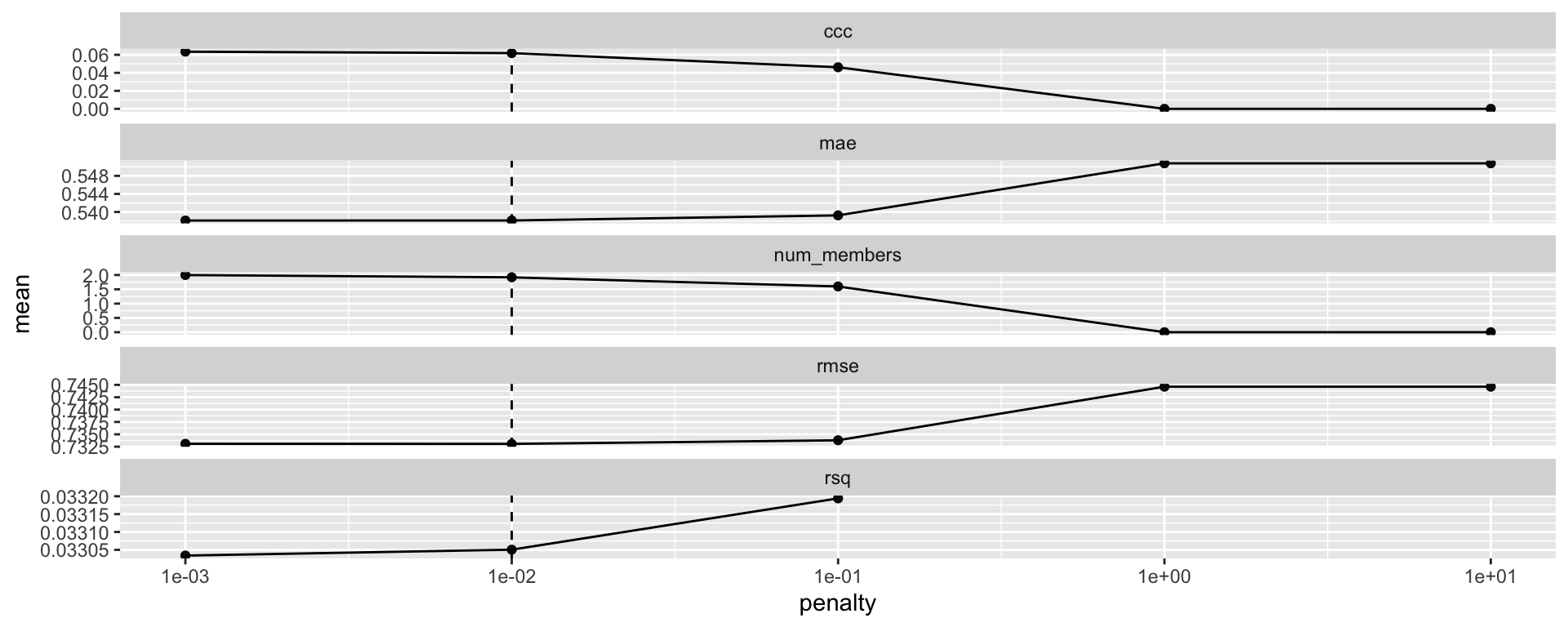

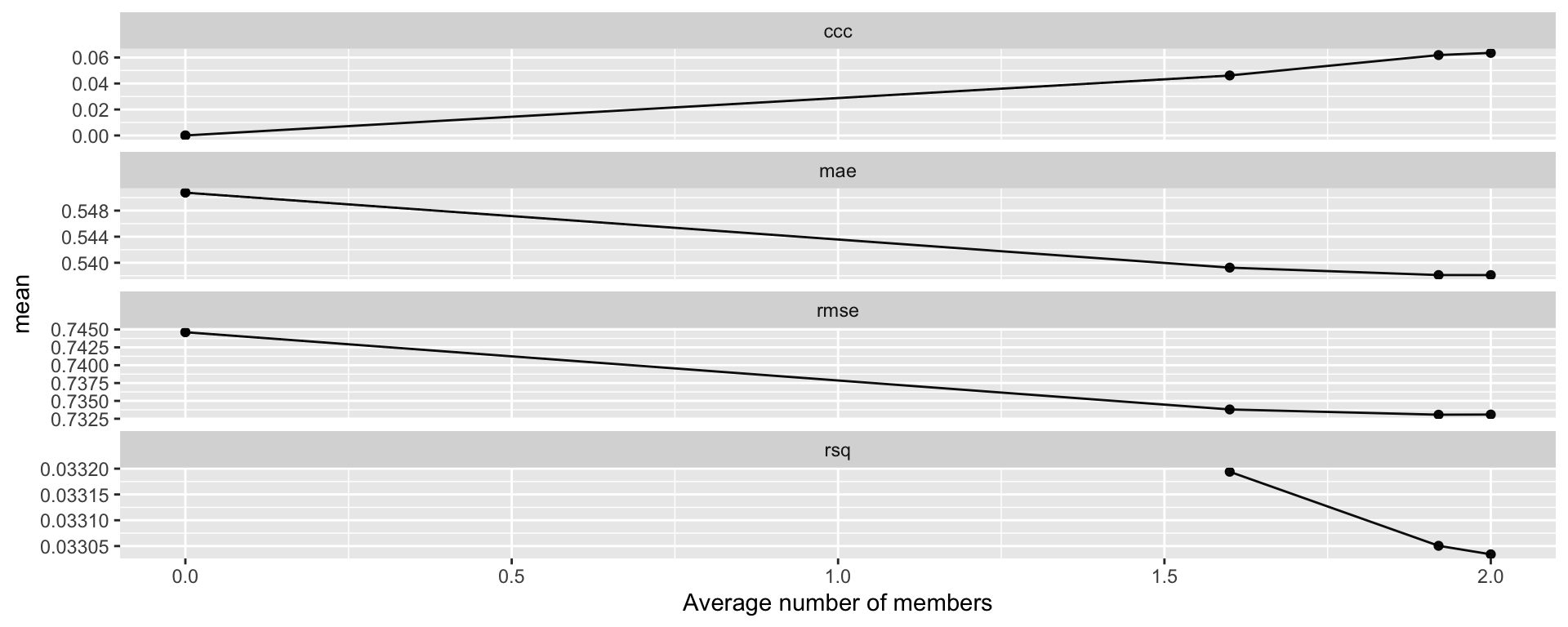

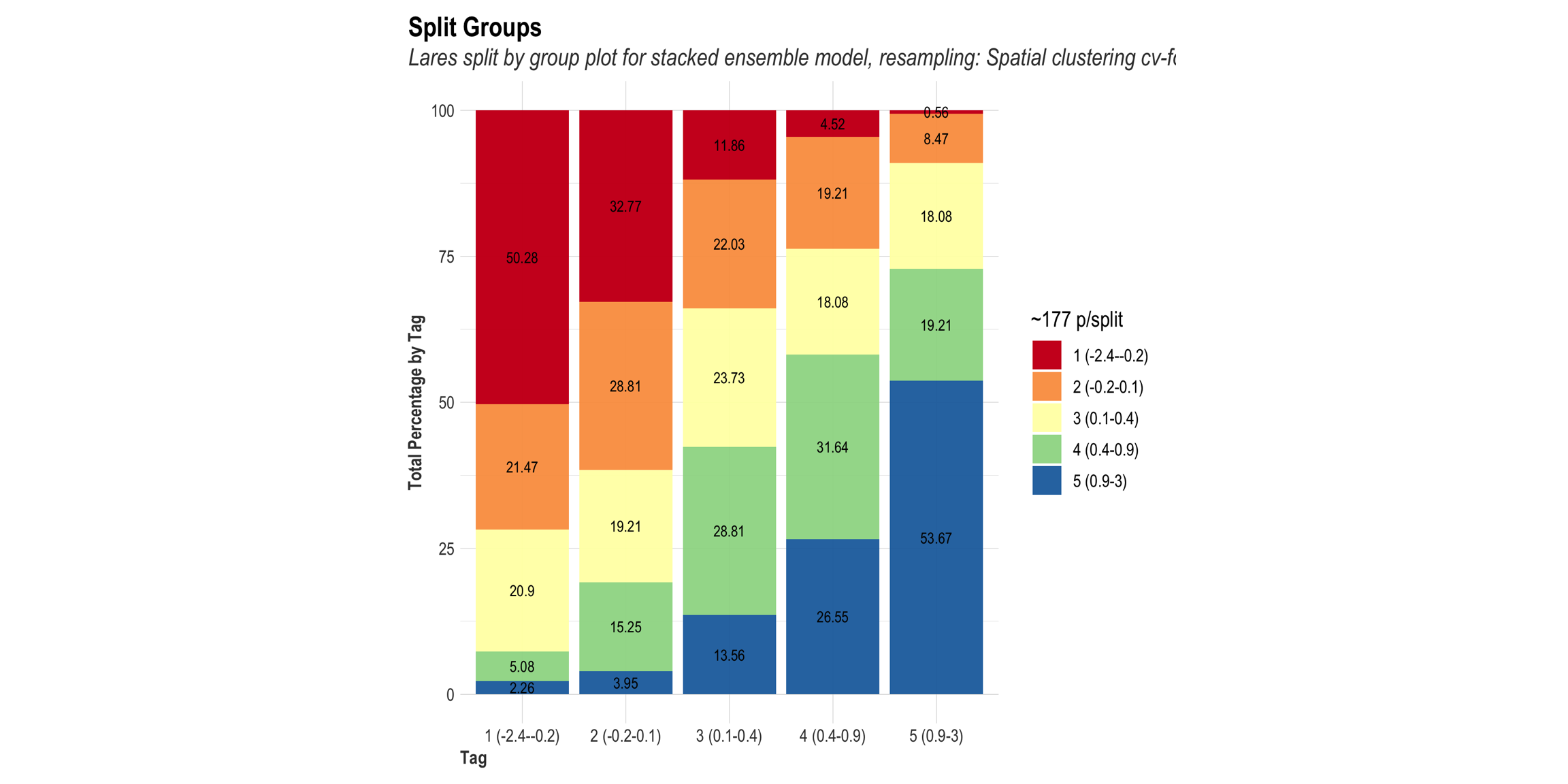

ylab("")